Motivated by the success of masked language modeling (MLM) in pre-training pure language processing fashions, the builders suggest w2v-BERT that explores MLM for self-supervised speech illustration studying.

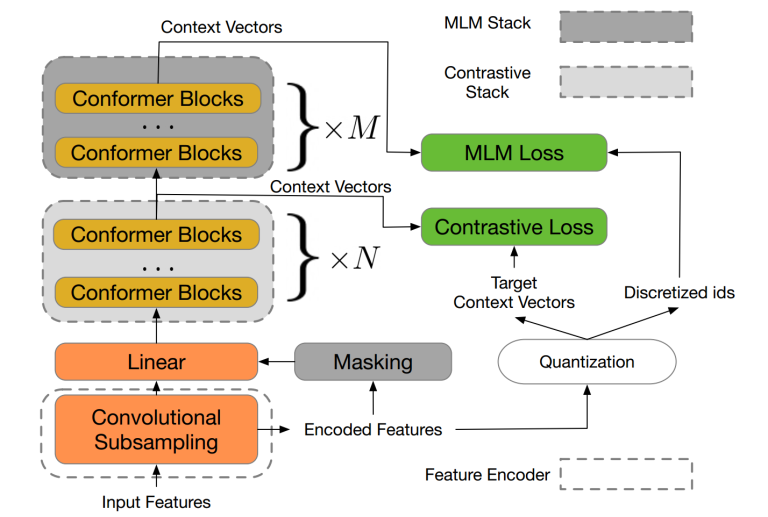

w2v-BERT is a framework that mixes contrastive studying and MLM, the place the previous trains the mannequin to discretize enter steady speech alerts right into a finite set of discriminative speech tokens, and the latter trains the mannequin to study contextualized speech representations through fixing a masked prediction job consuming the discretized tokens.

In distinction to current MLM-based speech pre-training frameworks equivalent to HuBERT, which depends on an iterative re-clustering and re-training course of, or vq-wav2vec, which concatenates two individually educated modules, w2v-BERT could be optimized in an end-to-end style by fixing the 2 self-supervised duties (the contrastive job and MLM) concurrently.

The experiments present that w2v-BERT achieves aggressive outcomes in comparison with present state-of-the-art pre-trained fashions on the LibriSpeech benchmarks when utilizing the Libri-Mild~60k corpus because the unsupervised information.

Specifically, when in comparison with revealed fashions equivalent to conformer-based wav2vec~2.0 and HuBERT, the represented mannequin reveals 5% to 10% relative WER discount on the test-clean and test-other subsets. When utilized to Google’s Voice Search site visitors dataset, w2v-BERT outperforms our inner conformer-based wav2vec~2.0 by greater than 30% comparatively.

You possibly can view the complete article here

There may be additionally a tutorial video on YouTube.