Can computer systems assume? Can AI fashions be acutely aware? These and related questions usually pop up in discussions of latest AI progress, achieved by pure language fashions GPT-3, LAMDA and different transformers. They’re nonetheless nonetheless controversial and on the point of a paradox, as a result of there are normally many hidden assumptions and misconceptions about how the mind works and what pondering means. There is no such thing as a different approach, however to explicitly reveal these assumptions after which discover how the human data processing might be replicated by machines.

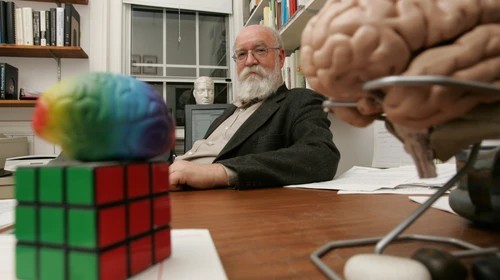

Just lately a workforce of AI scientists undertook an fascinating experiment. Utilizing the favored GPT-3 neural mannequin by Open AI, they fine-tuned it on the complete corpus of the works of Daniel Dennett, an American thinker, author, and cognitive scientist whose analysis facilities on the philosophy of thoughts and science. The intention, as acknowledged by the researchers, was to see whether or not the AI mannequin might reply philosophical questions equally to how the thinker himself would reply these questions. Dennett himself took half within the experiment, and answered ten philosophical questions, which then had been fed into the fine-tuned model of the GPT-3 transformer mannequin.

The experiment set-up was easy and easy. Ten questions had been posed to each the thinker and the pc. An instance of questions is : ”Do human beings have free will? What form or sorts of freedom are value having?” The AI was prompted with the identical questions augmented with context assuming the questions comes inside an interview with Dennett. The solutions from the pc had been then filtered by the next algorithm: 1) the reply was truncated to be of roughly the identical size because the human’s response; 2) the solutions containing revealing phrases (like, “interview”) had been dropped. 4 AI generated solutions had been obtained for each query and no cherry-picking or modifying was completed.

How had been the outcomes evaluated? The reviewers had been offered with a quiz, and the intention was to pick out the “right” reply from the batch of 5, the place the opposite 4 had been from synthetic intelligence. The quiz is out there on-line for anybody to strive their detective ability, and we suggest you strive it to see should you might fare higher than consultants:

https://ucriverside.az1.qualtrics.com/jfe/form/SV_9Hme3GzwivSSsTk

The end result was not totally sudden. “Even educated philosophers who’re consultants on Dan Dennett’s work have substantial problem distinguishing the solutions created by this language era program from Dennett’s personal solutions,” mentioned the analysis chief. The participant’s solutions weren’t a lot greater than a random guess on a few of the questions and a bit higher on the others.

What insights can we acquire from this analysis? Does it imply that GPT-like fashions are capable of exchange people quickly in lots of fields? Does it have something to do with pondering, pure language understanding, and synthetic normal intelligence? Will machine studying produce human-level outcomes and when? These are necessary and fascinating questions and we’re nonetheless removed from the ultimate solutions.