Ever stared down a take a look at query, the reply lurking simply beneath the floor of your thoughts? You acknowledge the lengthy, acquainted path to the answer, however a knot of panic varieties in your abdomen — there simply isn’t sufficient time.

Been there! I confronted an analogous problem when engaged on an issue from the GATE DA 2024 paper.

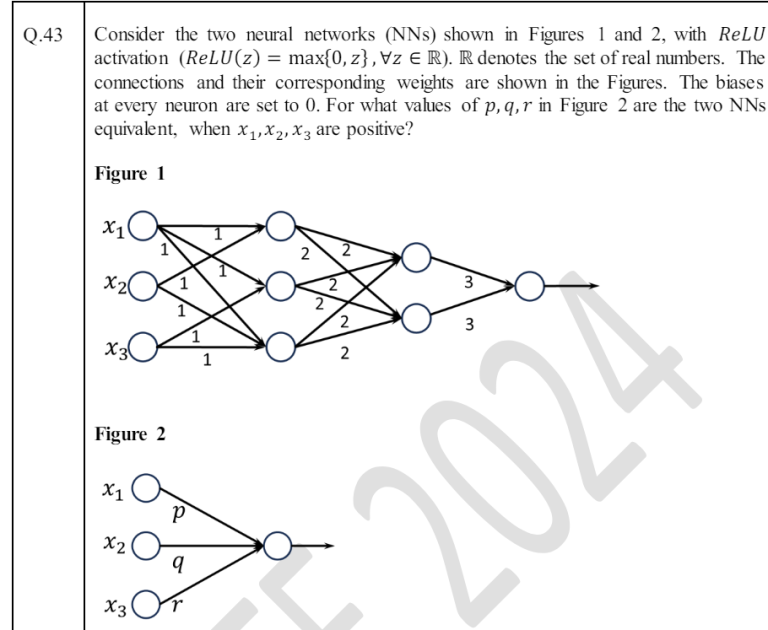

This drawback

New to ‘neural networks’? This video by 3Blue1Brown explains them. Price a watch!

https://www.youtube.com/watch?v=aircAruvnKk&list=PLZHQObOWTQDNU6R1_67000Dx_ZCJB-3pi&index=1&ab_channel=3Blue1Brown

For the neural community veterans on the market, let’s sort out this drawback head-on first. The important thing to simplifying the answer lies inside the issue particulars themselves.

Right here’s why:

- We’re utilizing the ReLU activation perform, which implies any optimistic enter will merely move by unchanged.

- Moreover, all of the weights and inputs (x1, x2, x3) are confirmed to be optimistic values.

Since negativity will get nixed by ReLU and we solely have optimistic numbers floating round, we are able to ditch the ReLU perform altogether! This enables us to unravel the equations immediately with the precise values, streamlining the method.

Simple substitution will get us the solutions. Let’s see how p, q, and r unfold!

Voila now we have our p,q and r as 36, 24 and 24.

Whereas this methodology works like a attraction, it may be time-consuming and vulnerable to errors.

Fortunately, there’s a sooner strategy we are able to use. It might sound unconventional, however generally the reply lies not simply in calculations, however in a quiet inside hunch. That is the place instinct is available in..

We’ve noticed that:

- Last Layer Dependence: The ultimate layer activation appears to rely equally on all activations from the third layer onwards.

- Balanced Propagation: Nodes within the third layer obtain equal affect from all nodes within the second layer, as a result of equal weights.

These observations are like breadcrumbs main us in direction of an easier resolution. By focusing solely on how the primary layer impacts the second layer.

The First Two Layers Reveal:

- Dominant Enter: In comparison with x2 and x3, x1 connects to all three nodes within the subsequent layer. This means a stronger affect on the general end result.

- Equal Weights: With all weights being equal, the activation power of x1 will immediately translate to a extra vital influence on the ultimate output in comparison with x2 and x3.

- Balanced Impression: Since x2 and x3 connect with solely two nodes every, and the weights are equal, their activations will doubtless have a extra balanced and doubtlessly equal influence on the ultimate output.

We’ve got p > q = r. Now we are able to analyze the choices and discover the one which matches this. Fortunately now we have just one possibility that satisfies this

This evaluation isn’t only a hunch; it’s a transparent signpost! By specializing in the primary layer and the dominant affect of x1, we’ve cracked the issue for an easier sub-network. Gone are the times of complicated calculations — the important thing lies in these basic relationships. We’ve found a streamlined resolution, guided not by brute drive calculations, however by a eager understanding of the inside workings and observations to aggressively simplify fueled by a contact of instinct.