“All fashions are flawed, however some are helpful” — George E.P. Field.

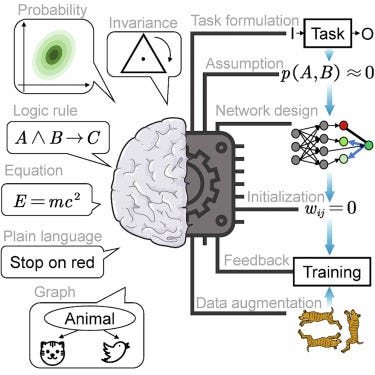

On this Weblog, I purpose to delineate a key class of problem-solving methodologies that we’ll time period as Principle Embedded Studying (TEL). TEL uniquely blends machine studying answer approaches with well-grounded theories or ideas. As such, TEL inherits the flexibleness and differentiability of recent machine studying and the robustness of well-

established theories.

This primary part supplies an outline of Principle Embedded Studying (TEL). Following chapters will talk about particular situations of TEL as utilized to various fields similar to By-product Pricing, Optimum Management, and Combinatorial Optimization. These examples will primarily draw from my revealed and ongoing analysis. The fixing means of scientific and Engineering issues can typically be seen as a computational path connecting from knowledge to options, and people paths are dictated by sure assumptions. Downside-solving first requires establishing two units of assumptions: those that replicate our prior information about the issue at hand (prior information assumptions), and those that represent an indispensable prerequisite for rendering the issue solvable

(solvability assumptions). These assumptions manifest themselves right into a mannequin or a computational framework. As an illustration, within the real-world optimization drawback of discovering optimum routes for a number of autos, our prior information concerning the linear relationship between journey distance and prices, and our assumptions in goal and constraint parameters, result in a computational framework within the type of a combined integer linear program (MILP) named Vechicle Routing Problem¹. Equally, in theoretical choice pricing, our prior information of no-arbitrage precept and our assumptions that the underlying asset follows a specific stochastic differential equation (SDE), result in a computational framework famously often known as the Black Scholes model².

As soon as the computational framework or mannequin is manifested by the assumptions, this framework facilitates in addition to dictates what kind of subsequent computations must be completed, and rigidly constrains how we’d characterize the answer of the issue. For instance, for Automobile Routing Issues, as soon as we arrange the combined integer linear programming (MILP) framework, sequence of computations are enabled and carried out contained in the optimization solvers (e.g. globally through Column Technology routine or regionally through simplex methodology), and the top results of this computational path is the optimum answer for the issue at hand represented within the type of resolution variables and the optimality circumstances they fulfill. Equally, for theoretical choice pricing issues, as soon as we’re below the Black-Scholes framework, sequence of derivations and computations are completed utilizing stochastic calculus to represents the no-arbitrage pricing precept, and we attain a parabolic partial differential equation (generalized Black Scholes PDE) that choice value ought to fulfill. This PDE is the mathematical relations that the answer ought to fulfill, and in some sense, that is how we characterize the answer. We have to resolve this PDE both analytically or numerically to get the specified pricing perform.

In essence, the computational framework emerges from the assumptions we make throughout drawback fixing (prior information and the necessities for solvability). This framework, in flip, prescribes the computational steps wanted to reach at an answer and, importantly, it additionally defines how this answer is represented. This coherence between assumptions, mannequin, computation, and answer illustration is important for subsequent discussions on Precept-driven strategy, studying based mostly strategy and Principle Embedded Studying.

The principle-driven strategy is one manifestation of the assumptions, mannequin, and computations coherence. For the computations to be tractable (ideally analytically), the precept pushed approaches make a number of sturdy assumptions to simplify the issue and restrict its diploma of freedom. Mixed with assumptions that represents prior information associated to the useful working of the issue at hand, they result in a computational framework that’s exact however biased. It’s exact as a result of the next computations below this framework are fairly structured: the framework typically includes a set of equations representing the relationships that must be held between sure portions, or some equilibrium or optimality circumstances that must be happy, and subsequent computations merely exploit these relations to succeed in the ultimate converged outcomes. It’s biased as a result of the assumptions made proper from the beginning could also be too sturdy, they usually could also be solely needed for solvability however deviate from the reality. Because the precept pushed strategy follows a strict linear stream (assumptions, mannequin, and computations), this bias injected originally can result in sub-optimality of the options on the finish of this computational course of.

The machine studying based mostly strategy is one other compelling manifestation of the idea, mannequin, computation, and answer illustration coherence. It’s value revisiting that principle-driven strategies make use of two distinct kinds of assumptions: assumptions for solvability and assumptions for prior information illustration. Distinctly, the machine learning-based strategy doesn’t invoke any of the solvability assumptions. It solely operates below a single, modest prior information assumption: the transformation from the preliminary uncooked knowledge to the specified answer could be adequately approximated by the machine studying mannequin that we go for (assumptions such because the coaching knowledge having an i.i.d. distribution and the info containing the important info for studying are implicitly encompassed inside this single assumption). This broad but weak assumption inherently produces a mannequin (because it’s the one we initially select) that bridges the hole between the enter and the specified output straight. In distinction to the mannequin utilized within the principle-driven strategy, the mannequin on this context is comparatively unbiased, primarily attributed to the minimal assumptions it makes, resulting in the flexibleness of studying based mostly approaches. Nevertheless, this lack of bias comes with a caveat; the mannequin is notably imprecise. It endeavors solely to align with the correlations within the knowledge, with full disregard for the useful mechanisms inherent to the issue being solved.

Following this setup, the next computations are common, strictly adhering to the replace protocols of the chosen machine studying mannequin. This may contain gradient descent in deep studying, quadratic programming in Assist Vector Machines, or a grasping search in resolution bushes, amongst others. The kind of computations stays the identical no matter the number of issues we feed into the the identical chosen machine studying mannequin. Quite the opposite, in principle-driven methods, as soon as the assumptions has been made and the mannequin has been established, the next computations concerned comprise each problem-specific computations (similar to deriving Black-Scholes Partial Differential Equations from Stochastic Differential Equations) and common computations (for example, the PDE solver which, whatever the PDE we enter, performs related operations). Whereas within the learning-based strategy, all computations are common and rely solely on the chosen ML mannequin and its mounted replace guidelines. Whereas this universality of ML computations eliminates the need for solvability assumptions, it presents a problem relating to integrating prior information into our answer course of. For instance, think about making an attempt to include the precept of no-arbitrage into your loss perform whenever you’re coaching a big neural community in a supervised trend from enter to actual choice value. This represents a major impediment because the universality of the machine studying computations could not lend itself to the easy integration of such domain-specific information.

In some sense, within the studying based mostly approaches, these common and brute-force computations change the meticulous and problem-specific ones. Correspondingly, the illustration of the issue answer is all the time encapsulated inside the skilled ML mannequin as a substitute of problem-specific mathematical equations as within the principle-driven strategy. The universality of those computational strategies and answer representations supplies a proof as to why learning-based approaches should not preoccupied with solvability assumptions: just by adhering to the computational processes dictated by the chosen machine studying mannequin and its replace guidelines, the issue is inherently solvable.

Given the rigidity of the principle-driven strategy and the flexibleness of the machine studying strategy, an attention-grabbing query arises: Can we reap the advantages of each? Can we make principle versatile or make the black field extra theory-grounded? Can we mix these two kinds of approaches extra organically?

Any strategy to problem-solving could be thought to be computational paths connecting knowledge to options. As mentioned in (Assumption, Mannequin, Computation, Illustration) part, these paths are ruled by the preliminary selection of assumptions. As these decisions (encompassing solvability and prior information) are merely human constructions reasonably than absolute truths, no single computational path inherently surpasses others. The principle-driven approaches and learning-based approaches every represents distinct paths whose variations emerge from our differing picks of assumptions. This attitude affords us the freedom to plan our personal computational path as we see applicable.

Reverting to the duty of integrating principle-based and learning-based approaches, it’s potential to conceive a computational path that attracts upon probably the most fascinating traits of each. This line of thought brings us again to our two kinds of assumptions: solvability and prior information, in addition to the 2 kinds of computations: common and problem-specific. We have now established three key insights from earlier discussions:

First perception: We’d like solvability assumptions for simplifications of the issue in order to make the issue solvable, however some of these assumptions can inject bias into the computational path thus sub-optimality of the options.

Second perception: The universality of the computations in studying based mostly methodology routinely renders the issue solvable, just by adhering to the common replace guidelines of the chosen ML mannequin.

Third perception: Prior information assumptions lend a theoretical grounding to each the computations and their ensuing options. Nevertheless, integrating or representing these assumptions could be difficult within the pure learning-based approaches.

These three insights recommend a compelling technique for Principle Embedded Studying:

1. Start with the unique principle-driven strategy whereas preserving its complete framework, mathematical relationships, problem-specific computations, solvability assumptions and prior information assumptions.

2. Eradicate all solvability assumptions from the beginning, which is able to create gaps within the unique computational path.

3. To fix these gaps, apply machine studying mannequin parametrizations and inject knowledge.

4. With this re-establishment of the unique computational path, the preserved prior information assumptions, mathematical relationships, or the general goal of the issue organically result in the development of loss features for coaching the machine studying fashions that bridge these computational gaps. These gaps are bridged exactly as a result of universality of computations related to the ML replace or coaching guidelines.

The TEL technique outlined above could be additional elucidated by the accompanying illustration in Determine under.

The determine depicts three distinct computational paths: principle-driven, learning-based, and TEL, all connecting knowledge to answer. The principle-driven path displays a extra structured nature because of its inclusion of problem-specific computations and simplification from sturdy assumptions. In distinction, the learning-based strategy depends on common computations, which could be computationally demanding, much less structured and fewer exact in comparison with the computations within the principle-driven strategy.

It is very important observe that within the principle-driven strategy, the computations comprise each problem-specific ones (e.g., derivation of PDE in Black Scholes) and common ones (e.g., PDE solvers). Within the plot, the background represents the dominance of common computations all through, whereas solely alongside the principle-driven computational path do the computations exhibit problem-specific traits. In the direction of the top of the principle-driven path, the computations transition again to being common (e.g., PDE solvers).

The TEL strategy eliminates solvability assumptions whereas retaining all prior information assumptions alongside its computational path. When solvability assumptions are eliminated, the TEL computational path diverges from the structured and problem-specific computations alongside the principle-driven path. As a substitute, it ventures into the common computations represented by the white background. This corresponds to the utilization of ML fashions and its updating guidelines for bridging the computational hole left behind by the removing of solvability assumptions alongside the computational paths.

The plot illustrates that the TEL technique adheres to a minimum-deviation precept. It goals to attenuate deviations from the unique principle-driven computational path and solely deviates when encountering solvability assumptions. Throughout such encounters, the TEL strategy leverages the universality of ML fashions to bypass the hole or take the detour into the white background.

The appliance of this TEL technique in follow varies relying on the precise drawback at hand. As an instance the sensible implementation of the TEL technique, I current three situations on this thesis the place TEL is utilized to choice pricing, optimum management, and combinatorial optimization. By analyzing these examples, it’s anticipated that the TEL technique will change into clearer and extra understandable.

This part presents a non-exhausting dialogue of assorted seminal contributions from the group that align with the ideas underlying TEL. Moreover, it introduces three modern purposes of TEL in distinct domains: choice pricing, optimum management, and combinatorial optimization. These unique purposes, both revealed or below evaluation, exemplify TEL’s versatility and efficacy. They’re manifested within the distinctive methodologies: Arbitrage Free Neural Community, Neural ODE Controller³, and Reinforcement Studying Column Generation⁴.

Physics Knowledgeable Neural Community

Physics Knowledgeable Neural Community or PINN⁵ is an occasion of Principle Embedded Studying (TEL) utilized for fixing Partial Differential Equations. Within the context of fixing PDEs, Physics Knowledgeable Neural Networks (PINN), doesn’t depend on supervised studying to study the mapping between inputs and corresponding answer perform values (that are sometimes obtained utilizing computationally intensive numerical solvers). As a substitute, PINN straight exploits the speculation in the issue — the useful type of the PDE equations, utilizing the identical neural community parameterization of the answer perform to characterize all phrases within the PDE through auto-differentiation instruments. By setting the left-hand facet of the PDE equal to the right-hand facet, we acquire a loss perform that can be utilized to coach the parameters of the identical neural community. That is the TEL technique in motion: the removing of biased solvability compromises similar to linear approximation of the answer through finite distinction (Finite Distinction Methodology) or that the answer could be expanded into totally different foundation features (Spectral Methodology). With the removing of those assumptions, a spot is left within the full PDE fixing computational path, as the unique computations in FDM or SM, specifically the fixing of programs of linear equations, are not relevant. To bridge this hole, PINN straight exploits the preserved mathematical relations in the issue (the PDE itself) to derive a loss perform that may practice the neural community. By adhering to the computational processes dictated by the chosen neural community and its replace guidelines, notably backpropagation, the gaps left by the removing of solvability assumptions are crammed and the computational path is made entire once more.

Empirical Mannequin Studying (EML) & Predictive Optimization

EML & Predictive Optimization⁶⁷⁸ are different situations of Principle Embedded Studying (TEL) utilized for fixing actual world optimization issues. Within the principle-driven approaches, optimization fashions are sometimes crafted with predetermined resolution variables, constraints, and stuck mannequin coefficients, subsequently adopted by the optimization solver’s computations. Such mounted fashions, by their very nature, embed quite a few assumptions that distill real-world intricacies for simplification. Take, for instance, the Automobile Routing Downside (VRP); its Blended-Integer Linear Programming (MILP) formulation makes use of mounted parameters to simplify away the complexities similar to unpredictable highway obstructions or variable visitors circumstances, that are inherently difficult to precise mathematically. These assumptions fall below what’s termed ‘solvability assumptions’ as they’re made only for with the ability to resolve the issue with optimization solvers. In distinction, strategies like Empirical Mannequin Studying and Predictive Optimization, sharing the identical ideas of Principle-Embedded Studying (TEL), purpose to take away these solvability assumptions by numerous diploma. They obtain this by parameterizing sure elements of the optimization mannequin, such because the aims and constraints parameters in Predictive Optimization or extra constraints in Empirical Mannequin Studying within the type of discovered relationships between noticed knowledge and resolution variables. These fashions are then skilled with loss features straight tied to the end-goal optimization aims, facilitating a holistic, end-to-end studying course of. By integrating nuances in uncooked knowledge that beforehand eluded mathematical illustration inside the optimization framework, the standard of the optimization solvers outcomes could be probably improved. This melding of studying based mostly strategy and its replace guidelines ensures that the gaps created by discarding solvability assumptions are adequately bridged, and the computational path is made entire once more.

Automated Implicit Differentiation

Automated Implicit Differentiation⁹ is one other set of strategies that may be considered TEL, which effectively differentiate by iterative solvers (e.g optimization solver, mounted level or equilibrium iteration) by combining implicit differentiation of features with auto-differentiation, and such mixture is enabled by implicit perform theorem¹⁰. The target of differentiation over iterative solvers is to find out the sensitivity of the ultimate answer with respect to the solver’s enter. That is significantly related in eventualities like bi-level optimizations, the place we compute derivatives of a nested optimization drawback to unravel an outer drawback, in addition to in optimizing hyperparameters that govern the iterative solver or computational course of, to enhance the standard of ultimate answer. Within the context of machine studying and significantly deep studying, this sensitivity evaluation is usually achieved by auto-differentiation through backpropagation. It is because the output sometimes has an specific useful relationship with the enter, established by the neural community’s structure, and backpropagation could be carried out effectively by the routinely constructed computational graph.

Nevertheless, the scenario adjustments when coping with iterative solvers, the place the outputs don’t normally have an specific useful relationship with the inputs. As a substitute, they’re computationally linked by the iterative course of. Auto-differentiation strategies can’t be straight utilized right here. In a learning-based strategy to sort out this situation, following the method of auto-diff in neural community, one must manually assemble the computational graph representing every step of the iterative solver. Auto-differentiation can then function on this graph. Nevertheless, creating such a graph shouldn’t be trivial and will lead to a particularly giant and computationally costly graph for backpropagation. In distinction, a principle-driven strategy to differentiation by iterative solvers would necessitate particular person mathematical derivations tailor-made to every solver or algorithm, so as to relate the iterative fixing course of answer to its inputs utilizing optimality or equilibrium circumstances. This methodology can usually be tedious and labor-intensive.

As an exemplification of the Principle-Embedded Studying (TEL) framework, Automated Implicit Differentiation harmonizes the principle-driven and learning-based approaches for efficient differentiation inside iterative solvers. This integration is facilitated by the applying of the Implicit Operate Theorem. Let’s think about the inputs of the iterative solvers as θ and conceive the outputs (or options) as being parameterized by these inputs, therefore z(θ). This attitude permits us to deal with the complete iterative computational operation as a perform that maps inputs to outputs. The situation that the output, denoted as z* should fulfill could be encapsulated by the equation F(θ, z*(θ)) = 0. This equation is rooted in principle-driven methodologies, reflecting the inherent useful components of the issue that the iterative solver goals to resolve — for instance, the KKT circumstances for convex optimization solvers. Notice that for the concept to carry (and likewise for the sake of implementation of it to get sensitivity), F, which represents drawback particular or person outlined optimality, equilibrium or stationary situation, have to be differentiable w.r.t to each z* and θ. In keeping with the Implicit Operate Theorem, the sensitivity of the answer with respect to the inputs could be deduced solely from the by-product features of the perform F on the particular answer level z*.

Thus, with Automated Implicit Differentiation, as soon as we repair the setup for the iterative solvers (a predetermined set of θ), we are able to nonetheless adhere to the inflexible computational course of decided by these solvers to succeed in an answer. Upon reaching that answer, its sensitivity with respect to the solver inputs (solver configuration or another parameterizations that would affect the iterative fixing course of) is decoupled from the intricate computations inside the solver. This separation permits for the environment friendly extraction of sensitivity info, which might then be used to refine the solver setup or its parameterization. On this method, Automated Implicit Differentiation reaps the advantages of the solver’s rigidity and its optimality or equilibrium circumstances, as per the principle-driven strategy. Concurrently, we achieve from the power to swiftly consider sensitivity and replace parameters, very like in learning-based approaches, to enhance answer qualities. Such methodology could be utilized to mounted level iterative solvers¹¹ ¹², optimizations¹³ ¹⁴ ¹⁵, and design machine studying layers with extra structured outputs and constrained incorporation¹⁶ ¹⁷ ¹⁸ and fewer reminiscence demand¹⁹ ²⁰.

Arbitrage Free Neural Community: AFNN

AFNN is an occasion of Principle Embedded Studying (TEL) utilized for choice pricing, which can be my unique analysis. AFNN features equally to PINN, using some recognized bodily legal guidelines (on this case, monetary ideas) for neural community coaching through hand-crafted losses. Within the principle-driven strategy to choice pricing, which incorporates dynamic hedging arguments for deriving the Black Scholes Partial Differential Equation (PDE), the method begins by specifying a steady stochastic differential equation by parameter becoming. Following this, a zero-cost, self-financing portfolio course of is established. Guaranteeing the absence of arbitrage within the portfolio (i.e., the zero-cost, self-financing portfolio worth shouldn’t enhance arbitrarily) leads to the formulation of particular equations that the choice pricing perform should fulfill, culminating within the Black Scholes PDE. AFNN leverages this course of: it begins by representing the choice pricing perform with a neural community. Then, by expressing all components of the principle-driven derivation both by the neural community’s output or its auto-differentiation elements, the no-arbitrage precept is remodeled right into a coaching loss for the community, changing the normal reliance on the Black Scholes PDE.

Put AFNN into the TEL viewpoint as mentioned in Part “Coherence,” there are two units of assumptions made within the above principle-driven strategy for choice pricing: solvability assumptions (underlying follows some Stochastic Differential Equation e.g., Geometric Brownian Movement course of), and prior information assumptions (there shouldn’t be any self-financing, riskless zero-cost, and worthwhile portfolio available in the market). Primarily based on the idea, mannequin, computation, and illustration coherence, totally different attitudes in the direction of these assumptions will lead to totally different computational paths that attain the answer from knowledge, and on this context:

- If contemplating each units of assumptions, we attain the Black Scholes PDE the place we formulate the prior information no-arbitrage assumption below the stochastic calculus framework.

- If lifting each units of assumptions, we attain the pure learning-based strategy, free from all the restrictions of the Black-Scholes mannequin and may straight study from out there real-world knowledge with out having to construct a mannequin first. Nevertheless, this strategy requires a considerable amount of computations and doesn’t think about the no-arbitrage precept.

- If retaining the idea of prior information and modifying the solvability assumption (changing the geometric Brownian movement mannequin with a extra intricate underlying mannequin), the learning-based strategy is attained. Consequently, analytical solvability is forfeited, and as a substitute, the Kolmogorov theorem is employed, whereby fixing partial differential equations (PDEs) is substituted with supervised studying strategies that make the most of options obtained from stochastic simulations at numerous factors.

- If lifting all solvability assumptions and protecting all prior information assumptions as recommended by TEL technique, we attain the AFNN described on this part.

AFNN methodology learns the no-arbitrage choice value derived from any underlying asset course of in a data-driven approach. By incorporating principle straight into the educational course of through the Principle Embedded Studying (TEL) technique, AFNN is able to studying the risk-neutral value in a model-free method. As such, it capitalizes on some great benefits of each data-driven studying and theory-informed principle-driven approaches for choice pricing.

Neural ODE Controller: NOC

NOC is an occasion of Principle Embedded Studying (TEL) utilized for Optimum Management, which can be my unique analysis. The principle-driven strategy (the Most Precept) begins implicitly with modeling the system dynamics with a set of differential equations (e.g., the by-product of x(t) equals f(x(t), u(x,t), t)), then the optimum management drawback could be formulated right into a constrained optimization drawback over features. With reformulation utilizing Lagrange multiplier and Hamiltonian, the mandatory optimality circumstances of that optimization drawback result in a system of differential equations that the optimum trajectories and management features ought to fulfill. NOC straight parameterizes the system dynamics with neural ODE (known as dynamics learner), whereas parameterizing the management perform with one other neural community (known as controller). On this approach, the complete optimization drawback within the Most Precept, its needed optimality circumstances, and the ensuing differential equations change into neural community coaching, because the management goal could be expressed in a data-driven approach with trajectories roll out utilizing the neural ODE system.

Put NOC into the TEL viewpoint as mentioned in Part “Coherence,” there are two units of assumptions made within the principle-driven strategy for optimum management: solvability assumptions (underlying system observe the mounted differential equations, first-order needed situation suffice for international optimality), and prior information assumptions (state is being managed and evolving inside a steady vector discipline). Primarily based on the idea, mannequin, computation, and illustration coherence, totally different attitudes in the direction of these assumptions will lead to totally different computational paths that attain the answer from knowledge, and on this context:

- If contemplating each units of assumptions, we attain the principle-driven strategy (the Most Precept) the place we resolve the trajectory (useful) optimization of state and management and derive first order optimality circumstances that management perform must fulfill.

- If lifting each units of assumptions, we attain the Reinforcement Studying strategy for fixing the optimum management drawback. With model-free RL, as a substitute of making an attempt to suit the dynamics with differentiable equations and optimizing controls, this dynamics modeling collapses into the management optimization through using reward features, and now it’s the reward perform that must be calibrated or fitted throughout totally different observations (state house). With model-based RL, the continual vector discipline is discretized and fitted through easy supervised studying course of to foretell the subsequent state given earlier state and motion, and the fitted subsequent state predictor is used to hurry up management optimization inside the discrete MDP framework.

- If protecting the prior information assumptions and solely lifting the second solvability assumptions concerning the optimality situation, we cannot derive analytically the optimality circumstances for management perform anymore, due to this fact we have to do parameterization and optimize the controller straight all below the mounted differentiable equations in our solvability assumptions, and we attain the strategy of AI Pontryagin²¹.

- If lifting all solvability assumptions and protecting all prior information assumptions as recommended by TEL technique, we attain the NOC described on this part.

NOC naturally arises from the optimization process within the Most Precept derivation. By skipping the step of representing system dynamics with differential equations, NOC strikes away from the first-order optimality circumstances and solves the issue with differentiable loss minimization in a data-driven approach, thus benefiting from each principle-driven strategy and learning-based strategy. NOC consists of a coupled neural ODE for controlling dynamical programs with unknown dynamics. That is achieved through an alternate coaching between two neural networks in our NOC mannequin: the dynamics learner and the controller. By means of an intriguing interaction and mutual supervision between these two neural networks, the mannequin learns the optimum management in addition to the system dynamics.

Reinforcement Studying for Column Technology: RLCG

RLCG is an occasion of Principle Embedded Studying (TEL) utilized for Combinatorial Optimization Issues with an exponential variety of resolution variables. Within the principle-driven strategy for fixing large-scale optimization with an exponential variety of resolution variables (e.g., Column Technology), which is an iterative fixing means of sub-optimization issues decomposed from the unique optimization drawback, a column or resolution variable with probably the most destructive diminished value is all the time added transferring ahead to the subsequent iteration within the iterative fixing course of. RLCG replaces this mounted column choice rule with a discovered rule from a reinforcement studying agent and formulates the complete fixing course of right into a sequential decision-making drawback.

Put RLCG into the TEL viewpoint as mentioned in Part “Coherence,” there are two units of assumptions made within the principle-driven strategy: solvability assumptions (all the time add probably the most destructive cut back value column at every iteration because the optimum column choice), and prior information assumptions (the issue into account could be solved utilizing CG algorithm; CG algorithm follows a sequential decision-making process). Primarily based on the idea, mannequin, computation, and illustration coherence, totally different attitudes in the direction of these assumptions will lead to totally different computational paths that attain the answer from knowledge, and on this context:

- If contemplating all these two units of assumptions besides from the “CG algorithm follows a sequential decision-making process” prior information assumption, we attain the principle-driven strategy (the Column Technology algorithm) the place we resolve RMP-SP iteratively and add columns with probably the most destructive diminished value at every iteration.

- If eliminating all of the solvability and prior information assumptions, we arrive at a pure one-shot imitation studying strategy utilizing machine studying. Nevertheless, such a technique doesn’t at present exist. The absence of this purely ML-based imitation strategy truly validates the TEL technique and its minimal deviations from principle-driven precept. It highlights the significance of leveraging prior information and constructions to their fullest extent.

- If eradicating the solvability assumption and the prior information assumption that the “CG algorithm follows a sequential decision-making process,” we arrive on the imitation studying strategy for Column Generation²².

- If lifting all solvability assumptions and protecting all prior information assumptions as recommended by TEL technique, we attain RLCG as described on this part.

RLCG integrates the principle-driven strategy of fixing combinatorial optimization issues by Column Technology with Reinforcement Studying. On this setup, the first focus of the educational algorithm is to speed up the convergence of the Column Technology, whereas concurrently leveraging the structured and rigorous means of Column Technology to sort out the combinatorial nature of the optimization drawback.

Assumptions underpin the formulation of fashions, which in flip each facilitate and limit computations. These computations, carried out inside the bounds of the mannequin or computational framework, converge to provide an answer with a illustration suitable with the governing framework. This course of yields a wide range of computational paths bridging knowledge to options.

“All fashions are flawed, some are helpful.”

This premise permits us to return to the origin of the computational path and choose assumptions we deem appropriate, thereby modifying the ensuing fashions, subsequent computations, and answer representations. By deciding on totally different assumptions, we achieve the freedom to assemble totally different computational paths or problem-solving strategies.

My thesis proposes a technique termed Principle Embedded Studying (TEL) for growing computational paths inside the assumptions, fashions, computations, and illustration coherence. The idea of TEL is easy: it strives for minimal deviation from the established principle-driven strategy historically used to unravel the issue at hand. Bearing this in thoughts, TEL relies upon upon everything of the prevailing principle-driven strategy’s framework, discarding solely the solvability assumptions and their related elements inside that framework. These solvability assumptions and elements are rendered pointless as a result of deployment of ML common computations. Subsequently, TEL is ready to forge a computational path that deviates minimally from the unique structured principle-driven computational trajectory. Thus, TEL strategies exhibit each flexibility and rigidity.

My thesis work explores three unique situations of TEL strategies for various drawback context: by-product princing, optimum management and combinatorial optimization. The three precept pushed approaches that act because the beginning factors for TEL minimal deviations are: Black Scholes dynamic hedging arguments, Most precept and Column Technology. And in consequence, we have now three unique strategies: Arbitrage Free Neural Community (AFNN), Neural Optimum Management (NOC) and Reinforcement Studying for Column Technology (RLCG).

AFNN: The principle-driven strategy of Black Scholes dynamic hedging arguments for choice pricing necessitates the idea of an underlying asset value course of for problem-solving inside the stochastic calculus framework or mannequin. AFNN alleviates this solvability assumption, parameterizes the pricing perform, and builds the loss perform based mostly on the dynamic hedging argument and no-arbitrage precept inherent within the principle-driven strategy. On this TEL methodology, deviations from the principle-driven computational path stem from the modelling of the processes concerned (GBM mannequin in Black Scholes, real-world knowledge in AFNN), leading to divergent pricing answer representations (PDE circumstances in Black Scholes, neural community in AFNN) and subsequent computations (structured derivation and PDE fixing in Black Scholes, differentiable loss optimization in AFNN).

NOC: The principle-driven strategy for optimum management (most precept) presupposes that the managed dynamical system abides by mounted differential equations to unravel the issue inside the variational calculus framework. NOC lifts this solvability assumption, extends past the first-order needed optimality situation with differentiable optimization, parameterizes the dynamical perform and the management perform, and constructs the loss perform utilizing the identical useful optimization current within the Most Precept. On this TEL methodology, the lifting of solvability assumptions results in deviations from the principle-driven computational paths within the modelling a part of system dynamics (mounted differential equations in Most Precept, Neural ODE in NOC). This deviation provides rise to totally different answer optimum management representations (first order needed circumstances within the type of differentiable equations in Most Precept, neural community in NOC) and subsequent computations (first order optimality situation derivation resulting in a set of differentiable equations and the fixing of these equations in Most Precept, differentiable optimization in NOC).

RLCG: The principle-driven strategy for resolving large-scale combinatorial optimization issues (Column Technology) is underpinned by the sub-optimal selection that probably the most destructive diminished value column at every iteration results in the quickest convergence, which permits subsequent fixing iterations. RLCG lifts the idea made in CG column choice step concerning probably the most destructive cut back value column being the optimum column so as to add, parameterizes the column choice rule, and constructs the loss perform by formulating the complete CG course of as a sequential decision-making drawback.

Ultimately, I need to make some normal ideas when it comes to the right way to discover solvability assumptions inside present precept pushed approaches so as to apply TEL technique:

Firstly, search out heuristics. They are often seen as probably the most conspicuous solvability assumptions, ceaselessly employed to speed up and simplify computational processes. Since they normally lack an optimality assure, they’re perceived as computational compromises — or in different phrases, solvability assumptions. We make the most of this strategy in RLCG, and it straight lifts the grasping heuristics for column choice and employs an ML mannequin (Reinforcement Studying) to fix this hole within the unique CG computational path.

Secondly, begin from the start. Delve into the inception of the principle-driven strategy, as solvability assumptions are sometimes made at this stage to border the problem-solving course of inside a selected mathematical framework. This enables us to start out formulating any optimality or equilibrium circumstances (the ultimate mathematical relationships that the answer should adhere to) inside that framework. Exchange these assumptions and their constituents with ML fashions, and navigate in the direction of the top: make the most of these ML fashions in accordance with how the unique elements of the eliminated solvability assumptions have been used within the unique principle-driven strategy, and subsequently assemble the loss accordingly on the conclusion of the unique derivation. We make the most of this strategy in NOC. The mounted differential equations used within the Most Precept on the outset allow the derivation of the first-order needed optimality situation for the management perform inside the variational calculus framework. NOC substitutes this mounted differential equation straight with a Neural ODE.

Thirdly, give attention to the conclusion. Instantly examine the ultimate mathematical relationships that the answer must adjust to. Establish which elements of those mathematical relationships could be changed with ML fashions, and work your approach backwards: we validate our collection of elements to get replaced by referring to the earlier derivations. If any of the elements seem as a consequence of preliminary solvability assumptions, we are able to certainly change them with ML fashions. This strategy is utilized in AFNN, the place the ultimate mathematical equations (portfolio worth doesn’t develop) evokes the development of coaching loss for pricing perform parameterization, and thus the general AFNN algorithm.

Following TEL methods, principle could be naturally mixed with studying, and the computations enabled in studying course of are all the time guided by rigorous ideas. Therfore, TEL advantages from the flexibleness of learning-based approaches and the rigidity of principle-driven approaches, with none pointless assumptions made for solvability.

[1] Black, Fischer and Scholes, Myron. “The Pricing of Choices and Company Liabilities.” Journal of Political Financial system, vol. 81, no. 3, pp. 637–654, 1973, JSTOR.

[2] Toth, P. and Vigo, D. “The Automobile Routing Downside.” Monographs on Discrete Arithmetic and Purposes, vol. 9, Society for Industrial and Utilized Arithmetic, Philadelphia, 2002, ISBN: 0–89871–579–2.

[3] Chi, Cheng. “NODEC: Neural ODE For Optimum Management of Unknown Dynamical Programs.” arXiv preprint arXiv:2401.01836, 2024.

[4] Chi, Cheng; Aboussalah, Amine Mohamed; Khalil, Elias Boutros; Wang, Juyoung; Sherkat-Masoumi, Zoha. “A Deep Reinforcement Studying Framework for Column Technology.” 2022.

[5] Raissi, Maziar; Perdikaris, Paris; Karniadakis, George E. “Physics-Knowledgeable Neural Networks: A Deep Studying Framework for Fixing Ahead and Inverse Issues Involving Nonlinear Partial Differential Equations.” Journal of Computational Physics, vol. 378, pp. 686–707, 2019.

[6] Kotary, James; Fioretto, Ferdinando; Van Hentenryck, Pascal; Wilder, Bryan. “Finish-to-Finish Constrained Optimization Studying: A Survey.” 2023. Accessible at arXiv:2103.16378.

[7] Tang, Bo; Khalil, Elias B. “PyEPO: A PyTorch-based Finish-to-Finish Predict-then-Optimize Library for Linear and Integer Programming.” 2022. DOI: 10.48550/arXiv.2206.14234.

[8] Lombardi, Michele; Milano, Michela. “Boosting Combinatorial Downside Modeling with Machine Studying.” In Proceedings of IJCAI, pp. 5472–5478, 2018.

[9] Blondel, Mathieu; Berthet, Quentin; Cuturi, Marco; Frostig, Roy; Hoyer, Stephan; Llinares-Lopez, Felipe; Pedregosa, Fabian; Vert, Jean-Philippe. “Environment friendly and Modular Implicit Differentiation.” In Advances in Neural Info Processing Programs, vol. 35, pp. 5230–5242, 2022.

[10] Krantz, Steven; Parks, Harold. “The Implicit Operate Theorem.” Trendy Birkhäuser Classics, Birkhäuser, 2003, ISBN: 0–8176–4285–4.

[11] Bai, Shaojie; Koltun, Vladlen; Kolter, J. Zico. “Neural Deep Equilibrium Solvers.” ICLR 2022 Poster.

[12] Jeon, Younghan; Lee, Minsik; Choi, Jin Younger. “Differentiable Ahead and Backward Fastened-Level Iteration Layers.” Division of Electrical and Pc Engineering, ASRI, Seoul Nationwide College; Division of Electrical Engineering, Hanyang College.

[13] Bertrand, Quentin; Klopfenstein, Quentin; Massias, Mathurin; Blondel, Mathieu; Vaiter, Samuel; Gramfort, Alexandre; Salmon, Joseph. “Implicit Differentiation for Quick Hyperparameter Choice in Non-Easy Convex Studying.” The Journal of Machine Studying Analysis, vol. 23, no. 1, pp. 6680–6722.

[14] Lai, Zeqiang; Wei, Kaixuan; Fu, Ying; Härte, Philipp; Heide, Felix. “nabla-Prox: Differentiable Proximal Algorithm Modeling for Giant-Scale Optimization.”

[15] Ramzi, Z.; Mannel, F.; Bai, S.; Starck, J.-L.; Ciuciu, P.; Moreau, T. “Shine: Sharing the Inverse Estimate from the Ahead Move for Bi-Stage Optimization and Implicit Fashions.” arXiv preprint arXiv:2106.00553, 2021.

[16] Amos, Brandon; Kolter, Zico. “OptNet: Differentiable Optimization as a Layer in Neural Networks.” Proceedings of the Worldwide Convention on Machine Studying (ICML), 2017.

[17] Djolonga, Josip; Krause, Andreas. “Differentiable Studying of Submodular Fashions.” Advances in Neural Info Processing Programs 30 (NIPS 2017), 2017.

[18] Kim, Y.; Denton, C.; Hoang, L.; Rush, A. M. “Structured Consideration Networks.” arXiv preprint arXiv:1702.00887, 2017.

[19] Kolter, Zico; Duvenaud, David; Johnson, Matt. “Deep Implicit Layers — Neural ODEs, Deep Equilibrium Fashions, and Past.” NeurIPS 2020 tutorial.

[20] El Ghaoui, L.; Gu, F.; Travacca, B.; Askari, A.; Tsai, A. Y. “Implicit Deep Studying.” arXiv preprint arXiv:1908.06315, vol. 2, 2019.

[21] Böttcher, Lucas; Antulov-Fantulin, Nino; Asikis, Thomas. “AI Pontryagin or How Synthetic Neural Networks Study to Management Dynamical Programs.” Nature Communications, vol. 13, no. 1, pp. 333, 2022, Nature Publishing Group. DOI: 10.1038/s41467–021–27590–0.

[22] Morabit, Mouad; Desaulniers, Man; Lodi, Andrea. “Machine-Studying-Primarily based Column Choice for Column Technology.” Transportation Science, vol. 55, no. 4, pp. 815–831, 2021, INFORMS.