The corporate has shared its analysis about an AI mannequin that may decode speech from noninvasive recordings of mind exercise. It has potential to assist folks after traumatic mind damage, which left them unable to speak by way of speech, typing, or gestures.

Decoding speech primarily based on mind exercise has been a long-established purpose of neuroscientists and clinicians, however a lot of the progress has relied on invasive mind recording strategies, similar to stereotactic electroencephalography and electrocorticography.

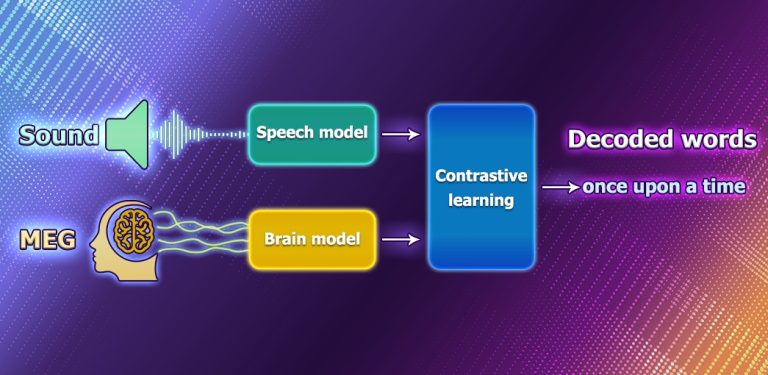

Meantime researchers from Meta assume that changing speech through noninvasive strategies would offer a safer, extra scalable resolution that might finally profit extra folks. Thus, they created a deep studying mannequin skilled with contrastive studying after which used it to align noninvasive mind recordings and speech sounds.

To do that, scientists used an open supply, self-supervised studying mannequin wave2vec 2.0 to establish the advanced representations of speech within the brains of volunteers whereas listening to audiobooks.

The method contains an enter of electroencephalography and magnetoencephalography recordings right into a “mind” mannequin, which consists of an ordinary deep convolutional community with residual connections. Then, the created structure learns to align the output of this mind mannequin to the deep representations of the speech sounds that have been introduced to the contributors.

After coaching, the system performs what’s generally known as zero-shot classification: with a snippet of mind exercise, it could possibly decide from a big pool of latest audio recordsdata which one the individual truly heard.

Based on Meta: “The outcomes of our analysis are encouraging as a result of they present that self-supervised skilled AI can efficiently decode perceived speech from noninvasive recordings of mind exercise, regardless of the noise and variability inherent in these information. These outcomes are solely a primary step, nonetheless. On this work, we targeted on decoding speech notion, however the final purpose of enabling affected person communication would require extending this work to speech manufacturing. This line of analysis may even attain past helping sufferers to probably embrace enabling new methods of interacting with computer systems.”

Study extra in regards to the analysis here