Understanding our journey from logistic regression by MLP, CNN, RNN, LSTM, Transformer, LLM to AGI(sometime, perhaps)

Within the final half now we have seen how we will seize the sample between enter and output utilizing mathematical equations and perceive its limitations with unstructured knowledge. Right here we are going to see how neural networks can modify their matrix weights throughout coaching to seize the patterns in unstructured knowledge and predict the subsequent output with excessive accuracy. We may also see how we will seize the spatial patterns of pictures by performing mathematical operations referred to as convolution on the matrices of a deep neural community — our first trace of working with unstructured knowledge. We are going to see how connecting the output again to the enter and dealing with sequences in time steps helps create ‘reminiscence’ which can be utilized to seize sequential patterns like textual content. Points like vanishing and exploding gradients however, we are going to see how we will overcome them by including alternative into this reminiscence in LSTMs, permitting the community to neglect issues that aren’t necessary fixing the problems confronted by RNNs.

Up to now now we have checked out conventional machine studying, how it’s doable to mannequin the sample between enter and output knowledge after which use historic knowledge for coaching and parameter optimisation to precisely predict the subsequent output. We’ve got checked out completely different sorts of output — steady, binary & categorical however our knowledge was all the time structured.

How do you take care of knowledge that’s unstructured? Certain you possibly can seize the essence of pictures, movies, audio recordsdata by encoding them to 1s and 0s however understanding that timestamp ‘t’ has frequency ‘f’ is not going to assist us establish if its a taylor swift track. Conventional machine studying algorithms depend upon the enter knowledge to be informative , not spatially dependent. Coping with this type of knowledge requires a completely new method — Deep Studying!

A Primary Neural Community: Deep studying is the method of making and coaching neural networks to carry out a selected job. A Multi Layered Perceptron(MLP) is probably the most fundamental type of a neural community. Lets break it right down to its components. Lets begin with multi-linear regression which we noticed earlier, predicted output:

Representing it in a matrix kind:

If we add a bias in order that our output is non-zero when all inputs are 0 and the above equation could be represented like this:

which can be the illustration of the Multi Layered Perceptron. As you possibly can think about, it’s multi-linear regression at coronary heart and might solely predict linear patterns. For it to have the ability to work with every kind of patterns, now we have to make some modifications to it, the primary of which is reworking the output to suit all patterns. To do that we go the output by various kinds of activation features every fitted to the sample we wish to predict. For binary classification we use a sigmoid as an activation perform, for multiclass classification we use a softmax , if the context is to extract patterns in pictures we use a ReLu.

How about capturing completely different ranges of complexities? Will the essential type of a neural community with completely different activation features be sufficient?

Researchers have observed that after we add what we name ‘hidden layers’ in between the enter and output and begin coaching, the neural community is ready to symbolize more and more advanced points of our enter, purely by combining lower-level options from the earlier layer. To provide an instance: If we had 3 hidden layers and practice the algorithm to establish a smiling face, one layer would seize the presence of edges of the face, the subsequent hidden layer would seize the presence of cheeks and the ultimate layer would activate with the presence of a smile, the sophistication rising with the following hidden layer. So stacking the neural community with hidden layers allowed it to construct complexity. Infact the primary model of ResNet had 152 layers in whole!

Coaching A Neural Community: So how will we practice such a community? We want two issues: a loss perform and an optimizer. The loss perform works precisely because it did with conventional machine studying. Beginning with random weights for the parameters of the neural community, we predict an output, examine it in opposition to the precise output i.e. construct a loss perform, modify the parameters working backwards from the output in order that the prediction is corrected. To be actual we replace the weights primarily based on the gradient of the loss perform. As neural networks contain matrix multiplication, the mathematics can get a bit tedious however the course of largely stays the identical.

The loss perform we use to check predicted output with actual output will depend on the kind of output. For fixing regression issues, we use imply squared error loss:

for classification issues with a number of lessons we use the explicit cross entropy loss perform:

for binary classification we use the binary cross entropy:

In the case of the optimiser algorithm, the first function of which is to fine-tune the parameters throughout coaching, with the aim mimsing the loss perform we noticed earlier. We’ve got already seen one such optimizer algorithm referred to as Gradient Descent which updates weights primarily based on the educational charge and the primary order differential. There are a lot of different optimizer algorithms, most most well-liked of which is Adam(Adaptive second estimation). Not like gradient descent , which maintains a single studying charge all through coaching, Adam optimiser dynamically computes particular person studying charges primarily based on the previous gradients and their uncentered variance. This studying charge is adaptive and might navigate the optimization panorama effectively throughout coaching resulting in sooner convergence.

To summarise issues thus far: Throughout this complete course of of coaching, the community decides the way it needs to rearrange its weights, guided solely by its need to minimise the error in its predictions and the result’s a set of parameters which can be superb at capturing patterns in any type of knowledge and complexity. To actually perceive the neural networks i’d extremely advocate watching the very visible explainer achieved by 3blue1brown: https://www.youtube.com/watch?v=aircAruvnKk together with the three movies he did after this masking again propagation. I dont suppose I’m even remotely doing justice by making an attempt to clarify it away in textual content.

What we did up to now is construct a fundamental neural community that’s properly suited for a lot of superior duties in a method that conventional machine studying algorithms weren’t, like telling aside the picture of an animal from that of a ship.

Spectacular because it is likely to be, this fundamental configuration is not very correct in its predictions. The first cause for that is that in its structure we nonetheless ‘flatten’ the photographs from enter right into a single vector because the hidden layers after enter require a flat array versus a multi-dimensional one. This operation successfully kills all the knowledge we might have realized had our community structure captured spatial consciousness. To actually perceive this, simply think about the pixels of a picture specified by a single array, would you be capable of perceive its content material? clearly not! and if we put them in a multi-dimensional array? We see issues clearly. So what we would like is our community to seize this spatial consciousness and never flatten the picture pixels!

Additionally, if you wish to practice a fundamental neural community on pictures with even fundamental readability, say 32x32x3 and three hidden layers of 128(128,1) neurons every, the whole variety of parameters to optimise would already be 6.5 Billion! Not solely would coaching these variety of parameters be computationally costly however the mannequin could be overfit to the purpose the place it might memorise the coaching knowledge as is and produce good scores in coaching!

Convolutional Neural Community: To beat this subject and its lack of spatial consciousness we apply the idea of convolution to a neural community calling it a Convolutional Neural Community (CNN). On the coronary heart of the CNN is the convolution operation, which is carried out by matrix multiplication of a convolution filter with a portion of the picture. You possibly can consider the convolution filter as a matrix of parameters that yields a constructive outcome if it finds the factor that it was searching for within the picture, upon matrix multiplication with the picture. In a method they behave like human eyes however particularly educated for a job. For ex: if the convolution filter is educated to seek out nostril in a picture it should output a constructive quantity when it finds one.

If we transfer the convolution filter throughout a whole picture from left to proper and high to backside, recording the output as we go, we acquire a brand new array that picks out a selected function of the enter picture, relying on the values within the filter. And if now we have a brand new convolution filter that’s utilized to the output of the primary filter then this picks out a special function of the enter. So we stack convolution layers one after the opposite studying completely different options of our picture. In actuality the convolution layer closest to the picture has probably the most zoomed in view and identifies the nitty gritty particulars and as we transfer additional, the filters get zoomed out and establish broad particulars throughout the whole picture. Up thus far we’re simply studying the options of the picture with this convolution operation, we nonetheless want a completely related layer with an activation perform to go along with the output of our convolution layer and carry out the precise picture classification job. So in abstract that is how the general structure of a CNN would appear to be:

The time period pooling within the above picture refers to a type of downsizing that makes use of a 2D sliding filter. The filter passes over the output from the convolution operation in keeping with a configurable parameter referred to as the stride. The stride is the variety of pixels the filter strikes throughout the enter slice from one place to the subsequent. For instance, when strides = 2, the peak and width of the output tensor will likely be half the scale of the enter tensor. That is helpful for lowering the spatial measurement of the tensor because it passes by the community, whereas rising the variety of channels. We’ve got 2 sorts of pooling choices: Max and Avg Pooling illustrated beneath:

One other key factor to consider whereas constructing a CNN is batch normalisation. When coaching there’s a risk for the weights of a neural community to get too giant, this downside is named the exploding gradient downside. When this occurs the loss perform immediately returns an NaN after hours and hours of coaching. To beat this, we use a batch normalisation layer that calculates the imply and commonplace deviation of every of its enter channels throughout the batch and normalises them by subtracting the imply and dividing by the usual deviation. There are then two realized parameters for every channel, the size (gamma) and shift (beta). The output is solely the normalized enter, scaled by gamma and shifted by beta.

We’ve got seen within the case of an MLP how and why it will probably shortly begin overfitting to the coaching knowledge. Basically if an algorithm performs properly on the coaching knowledge, however not the take a look at knowledge, we are saying that it’s overfitting. To scale back this downside, we implement regularisation methods, identical to with conventional machine studying, which be sure that the mannequin is penalised if it begins to overfit. In machine studying now we have some ways of doing this however in deep studying we use dropout layers. Throughout coaching, every dropout layer chooses a random set of models from the previous layer and units their output to 0. Extremely, this easy addition drastically reduces overfitting by guaranteeing that the community doesn’t grow to be overdependent on sure models or teams of models that, in impact, simply bear in mind observations from the coaching set. If we use dropout layers, the community can’t rely an excessive amount of on anybody unit and due to this fact data is extra evenly unfold throughout the entire community, which is precisely what we would like!

So in abstract, CNN’s resolved 2 of the largest drawbacks of foundation neural community:

- Capturing spatial patterns in unstructured knowledge

- Bringing down the variety of parameters to be educated from billions to 50K–150K.

However not all unstructured datasets have a spatial sample, knowledge like textual content, audio, time collection have a sequential sample, the place order of the information is most necessary, to which CNN’s could be a horrible alternative as they solely bear in mind the picture they’re fed in that second.

With this we dive into the realm of ‘auto regressive fashions’:

Recurrent Neural Networks (RNN) that are in actuality a really fundamental type of the now infamous ‘generative fashions’ are constructed to seize the sequential nature of enter knowledge. Lets dive into its structure and perceive how:

An important problem that an RNN has to unravel is to seize the context of sequential knowledge. It’s the context that defines what the subsequent phrase within the sentence ought to be. If i say:

Wonderful restaurant! Nice meals, nice ______!

What comes subsequent is most definitely the phrase ‘service’. We will say this as a result of we all know the context: which is that its a restaurant and i’m pleased with it, commented on the meals and can most likely speak about service subsequent. Lets see how an RNN would protect the context on this instance, course of this sentence and predict the phrase ultimately. A fundamental type of an RNN takes 2 inputs and generates 2 outputs. 2 Inputs being: every phrase of the sentence one time step at a time and the hidden state weights from the earlier time step(a(t-1)). Outputs: The hidden state weights for the present time step(a(t)) and the anticipated output(y(t)). In several configurations an RNN could be:

In probably the most fundamental kind, that is how an RNN is configured:

Right here: a(t) = the hidden state weights for the time step t; Y(t) = the anticipated output for the time step t; X(t) = the enter for time step t; Wa, Wx, Wy being the parameter matrices plugging into the values of a, X, & Y respectively

The hidden state is what makes an RNN appropriate with sequences, you possibly can consider the hidden state at a time t because the community’s present understanding of the sequence at the moment. With the passing of every time step and new inputs being added, the hidden state is up to date. So the hidden state at time step t will depend on the enter at time step t and the earlier hidden state a(t-1). Placing it into an equation:

changing the hidden state worth from the primary equation into the second:

Now that we all know the structure lets perceive the way it works. We begin our time step at 0 with the primary phrase within the sentence as the primary enter X(0) = ‘Wonderful’. The opposite enter which is weight from the earlier time step is 0 as that is the primary time step. So a(0) = 0. We assign random preliminary weights to Wa, Wx and Wy and calculate each the outputs: weights for the subsequent time step- a(1) and the anticipated word- y(1). We then use the a(1) worth because the enter for the subsequent time step t=1. The opposite enter would be the subsequent phrase within the sequence- ‘Restaurant’. We will already see that the when working with the second phrase, the primary phrase can be handed as an enter. So the neural community has the whole sequence to that time as context. We transfer ahead by subsequent time steps in the identical method till we attain time step t=4, the place we predict the ultimate phrase.

A serious query arises at this level. We used random weights to get the outputs at every time step. So our predictions are certain to be random too. So how can we practice it to get higher weights and make correct predictions? Can we use the identical back-propagation methodology that we utilized in coaching CNN? Sure, we will! However the coaching takes place for every time step! Coaching the preliminary time steps is straightforward nevertheless it will get difficult because the time steps develop ensuing within the single largest downside of utilizing RNN’s at scale — The vanishing/exploding gradient! We’ve got 3 parameter matrices to optimize right here so we calculate gradient w.r.t all of them. The gradient with respect to the weights of the enter (Wx) is optimized shortly as its achieved at every present time step with out many dependancies, however the gradient with respect to the weights of the hidden layer (Wa) and the enter (Wx) is what can probably trigger points.

Each single hidden layer that participated within the calculation of the ultimate output, ought to have its weight up to date with a purpose to reduce that error. As altering the weights of the primary hidden layer impacts the output of even the ultimate layer, now we have to optimize its weights too:

Including the fee perform:

Making use of the chain rule to get the by-product of the output w.r.t Wa:

Right here within the final fraction ∂a4/∂Wa, a4 is a perform of a3 and Wa, each of that are variables right here and cant be handled as constants. So making use of the chain rule that if in a f(x, y) every of x and y are once more features of two variables u and v (i.e., x = x(u, v) and y = y(u, v)) then:

Making use of this to our equation, it turns into:

Right here now we have to use chain rule to get the partial by-product of a4 w.r.t a1:

Now now we have one in every of 2 conditions: The worth of every of the partial derivatives is both greater than 1 or if there’s a batch normalization course of or a squashing activation perform the values will likely be lower than 1. Lets assume first that they’re greater than 1, say 2, the gradient shortly turns into:

Gradient = 2 * 2 * 2 * 2 * 2 * 2 = 64 !

Now after we attempt to use this gradient worth to really again propagate by the community by altering the parameter weights utilizing:

It turns into tougher and tougher to regulate the method because the sentence size goes longer. That is the exploding gradient downside. In actuality we will cap the worth and work round it (gradient clipping). There’s a downside that we can’t work round and thats the vanishing gradient downside. Assuming we use batch normalization to make the weights between -1 and 1 we face a special subject. For a worth say 0.8 the gradient will now be:

Gradient = 0.8*0.8* 0.8* 0.8* 0.8*0.8= 0.26!

Now we wouldnt be capable of change the weights a lot in the beginning of the time steps, as a result of gradient has nearly vanished (therefore the identify: vanishing gradient downside). In a typical sentence with 10–20 phrases, we will think about these issues turning into a lot worse. A greater software of an RNN could be auto-fill because the characters in a phrase have a finite size, that is the rationale why they had been dropped at manufacturing a lot earlier:

So in abstract: RNNs are in a position to deal with sequential knowledge a lot better due to their ‘reminiscence’. However they endure from vanishing and exploding gradient issues, particularly when the sequences are lengthy, making them very onerous to coach. Additionally RNNs are solely good at remembering current inputs properly. The load of the earlier inputs decreases sooner as we go additional up the sequence. In language it is rather necessary to recollect particular inputs from earlier as they set the context for what’s to come back subsequent greater than the newest enter. So how can we overcome these points with an RNN?

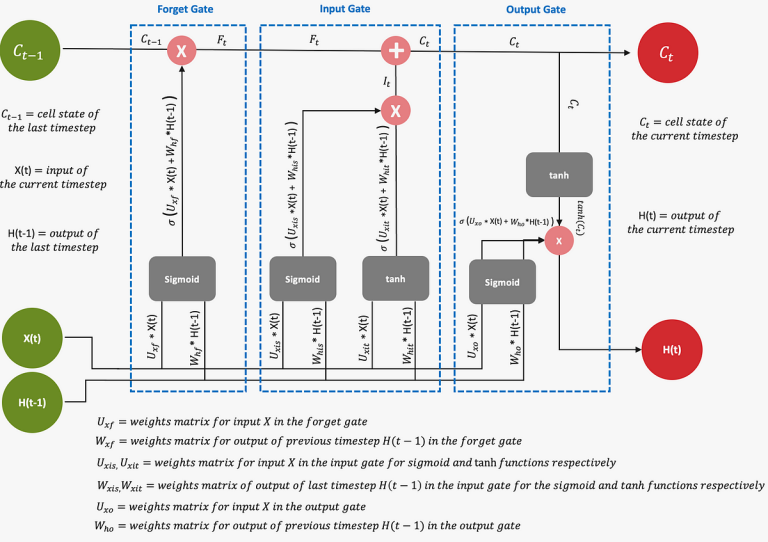

Lengthy brief time period reminiscence, makes use of many brief time period reminiscence cells to create a long run reminiscence. Its a kind of RNN that makes use of a reminiscence cell and gates to regulate the move of knowledge, so it will probably selectively retain or discard data avoiding the vanishing gradient downside of RNNs. Because of this nearly nobody makes use of a recurrent neural community anymore however use some or the opposite variant of LSTM. Lets take a look at the structure of the bottom variant: