The widespread improvement of synthetic intelligence, and particularly the launch of ChatGPT by OpenAI with its amazingly correct and logical solutions and dialogues, has stirred the general public consciousness and raised a brand new wave of curiosity in massive language fashions (LLMs). It has positively change into clear that their prospects are higher than we now have ever imagined. The headlines mirrored each pleasure and concern: Can robots write a canopy letter? Can they assist college students take assessments? Will bots affect voters by means of social media? Are they able to creating new designs as a substitute of artists? Will they put writers out of labor?

After the spectacular launch of ChatGPT, there are actually talks of comparable fashions at Google, Meta, and different corporations. Pc scientists are calling for higher scrutiny. They consider that society wants a brand new stage of infrastructure and instruments to guard these fashions, and have centered on growing such infrastructure.

Certainly one of these key safeguards might be a software that may present lecturers, journalists and residents with the flexibility to differentiate between LLM-generated texts and human-written texts.

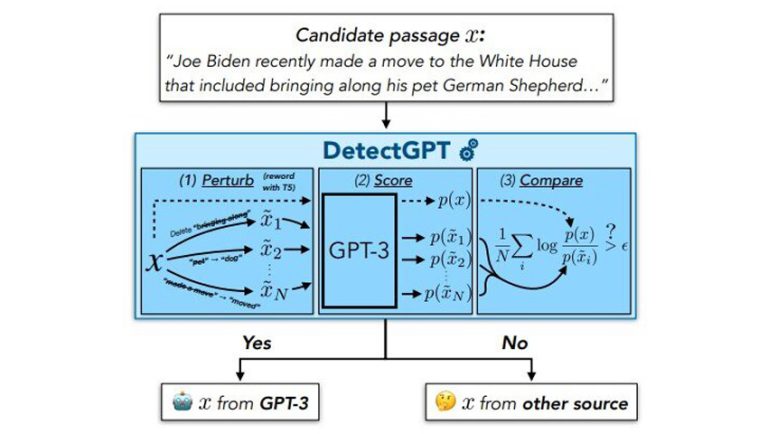

To this finish, Eric Anthony Mitchell, a fourth-year pc science graduate pupil at Stanford College, whereas engaged on his PhD collectively together with his colleagues developed DetectGPT. It has been launched as a demo and doc that distinguishes LLM-generated textual content from human-written textual content. In preliminary experiments, the software precisely determines authorship 95% of the time in 5 well-liked open supply LLMs. The software is in its early levels of improvement, however Mitchell and his colleagues are working to make sure that will probably be of nice profit to society sooner or later.

Some normal approaches to fixing the issue of figuring out the authorship of texts have been beforehand researched. One method, utilized by OpenAI itself, includes coaching the mannequin with texts of two varieties: some texts generated by LLMs and others created by people. The mannequin is then requested to determine the authorship of the textual content. However, in response to Mitchell, for this answer to achieve success throughout topic areas and in several languages, this methodology would require an enormous quantity of coaching information.

The second method avoids coaching a brand new mannequin and easily makes use of LLMs to find its personal output after feeding the textual content into the mannequin.

Basically, the approach is to ask the LLM how a lot it “likes” the textual content pattern, says Mitchell. And by “like” he doesn’t suggest that it is a sentient mannequin that has its personal preferences. Quite, if the mannequin “likes” a chunk of textual content, this may be thought-about as a excessive score from the mannequin for this textual content. Mitchell means that if a mannequin likes a textual content, then it’s possible that the textual content was generated by it or related fashions. If it would not just like the textual content, then most definitely it was not created by LLM. In line with Mitchell, this method works a lot better than random guessing.

Mitchell recommended that even essentially the most highly effective LLMs have some bias towards utilizing one phrasing of an thought over one other. The mannequin can be much less inclined to “like” any slight paraphrase of its personal output than the unique. On the similar time, when you distort human-written textual content, the likelihood that the mannequin will prefer it kind of than the unique is about the identical.

Mitchell additionally realized that this idea might be examined with well-liked open supply fashions, together with these accessible by means of the OpenAI’s API. In any case, calculating how a lot the mannequin likes a specific piece of textual content is actually the important thing to instructing the mannequin. This may be very helpful.

To check their speculation, Mitchell and his colleagues performed experiments during which they noticed how totally different publicly accessible LLMs favored human-created textual content in addition to their very own LLM-generated textual content. The collection of texts included pretend information articles, artistic writing, and tutorial essays. The researchers additionally measured how a lot LLM favored, on common, 100 distortions of every LLM and human-written textual content. After all of the measurements, the group plotted the distinction between these two numbers: for LLM texts and for human-written texts. They noticed two bell curves that hardly overlapped. The researchers concluded that it’s potential to differentiate the supply of texts very properly utilizing this single worth. This fashion a way more dependable consequence might be obtained in comparison with strategies that merely decide how a lot the mannequin likes the unique textual content.

Within the group’s preliminary experiments, DetectGPT efficiently recognized human-written textual content and LLM-generated textual content 95% of the time when utilizing GPT3-NeoX, a robust open supply variant of OpenAI’s GPT fashions. DetectGPT was additionally in a position to detect human-created textual content and LLM-generated textual content utilizing LLMs apart from the unique supply mannequin, however with barely decrease accuracy. On the time of the preliminary experiments, ChatGPT was not but accessible for direct testing.

Different corporations and groups are additionally in search of methods to determine textual content written by AI. For instance, OpenAI has already launched its new textual content classifier. Nevertheless, Mitchell doesn’t need to immediately evaluate OpenAI’s outcomes with these of DetectGPT, as there isn’t a standardized dataset to guage. However his group did some experiments utilizing the earlier era of OpenAI’s pre-trained AI detector and located that it carried out properly with information articles in English, carried out poorly with medical articles, and fully failed with information articles in German. In line with Mitchell, such blended outcomes are typical for fashions that rely on pre-training. In distinction, DetectGPT labored satisfactorily for all three of those textual content classes.

Suggestions from customers of DetectGPT has already helped determine some vulnerabilities.

For instance, an individual may particularly request ChatGPT to keep away from detection, reminiscent of particularly asking LLM to jot down textual content like a human. Mitchell’s group already has just a few concepts on mitigate this disadvantage, however they have not been examined but.

One other downside is that college students utilizing LLMs, reminiscent of ChatGPT, to cheat on assignments will merely edit the AI-generated textual content to keep away from detection. Mitchell and his group investigated this chance of their work and located that whereas the standard of detection of edited essays decreased, the system nonetheless does a fairly good job of figuring out machine-generated textual content when lower than 10-15% of the phrases have been modified.

In the long run, the purpose of the DetectGPT is to supply the general public with a dependable and environment friendly software for predicting whether or not textual content, and even a part of it, was machine generated. Even when the mannequin would not assume that the complete essay or information article was machine-written, there’s a want for a software that may spotlight a paragraph or sentence that appears significantly machine-generated.

It’s price emphasizing that, in response to Mitchell, there are a lot of respectable makes use of for an LLM in schooling, journalism, and different areas. Nevertheless, offering the general public with instruments to confirm the supply of knowledge has all the time been helpful and stays so even within the age of AI.

DetectGPT is only one of a number of works that Mitchell is creating for LLM. Final yr, he additionally revealed a number of approaches to modifying LLM, in addition to a method referred to as “self-destructing fashions” that disables LLM when somebody tries to make use of it for nefarious functions.

Mitchell hopes to refine every of those methods no less than another time earlier than finishing his PhD.

The examine is revealed on the arXiv preprints server.