What’s Function Scaling?

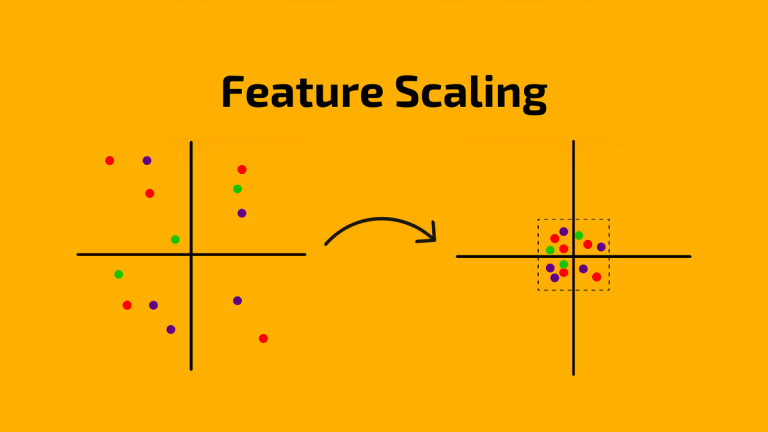

Feature scaling is a method utilized in machine studying to standardize the vary of options or variables within the information. It makes certain that every characteristic contributes equally to the evaluation, stopping one characteristic from dominating due to its bigger scale.

Think about you’ve gotten a dataset with two options, age and earnings. Age ranges from 0 to 100, whereas earnings ranges from $20,000 to $200,000. If you happen to feed this information instantly right into a machine studying algorithm with out scaling, the earnings characteristic would possibly dominate the age characteristic just because its values are a lot bigger. Function scaling helps to stop this.

There are two important approaches to characteristic scaling:

1. Normalization (Min-Max Scaling):

This methodology scales the information between a specified vary, often between 0 and 1. It subtracts the minimal worth from every worth after which divides by the vary (most worth minus minimal worth).

For instance, if we apply normalization to our dataset, the age values could be scaled to a spread between 0 and 1, and the earnings values may also be scaled to a spread between 0 and 1. So, an age of fifty (out of 100) would possibly change into 0.5, and an earnings of $100,000 (out of $200,000) would possibly change into 0.5 as nicely.

2. Standardization:

Standardization transforms the information to have a imply of 0 and an ordinary deviation of 1. It subtracts the imply worth from every worth after which divides it by the usual deviation.

With standardization, every worth is reworked to have a imply of 0 and an ordinary deviation of 1. So, for our dataset, if the imply age is 50 and the usual deviation is 25, an age of 75 can be reworked to (75–50) / 25 = 1. Equally, if the imply earnings is $100,000 and the usual deviation is $50,000, an earnings of $150,000 can be reworked to (150,000–100,000) / 50,000 = 1.

Why Do We Want Function Scaling?

We want characteristic scaling as a result of many Machine Studying algorithms carry out higher or converge quicker when options are on an identical scale. With out characteristic scaling, options with bigger scales would possibly disproportionately affect the result of the mannequin, resulting in biased outcomes. It ensures that each one options are equally weighted in the course of the studying course of, bettering the efficiency and accuracy of the mannequin.

Was this publish useful? Share it along with your connections and join with me at Farhan Ali for extra informative posts.