It’s continuously written that AI shall be an augmenter of human resolution making; the machine offering a lot wanted effectivity and pace, pushed by our unchallenged ‘get issues executed sooner’ tradition.

It must also result in a discount of the noise of the plenty, as described by the Behavioural Economics Grasp himself, Daniel Kahneman in Noise:A flaw amongst completely different human judgements. In different phrases, the machine can present for a softening across the edges of our human interpretations of the world round us. That are invariable, therefore the existence of such noise.

Nevertheless this may increasingly solely be the case on the margins.

The Authority Bias, Availability Bias, Loss Aversion and so forth, that give rise to such knowledge variability, aren’t lowered considerably because the machine works it means by means of to a conclusion. And for positive, not eradicated.

Such is the contamination of the enter, particularly throughout such an unlimited and unpredictable knowledge set because the Web, the harvest floor of our AI 1.0.

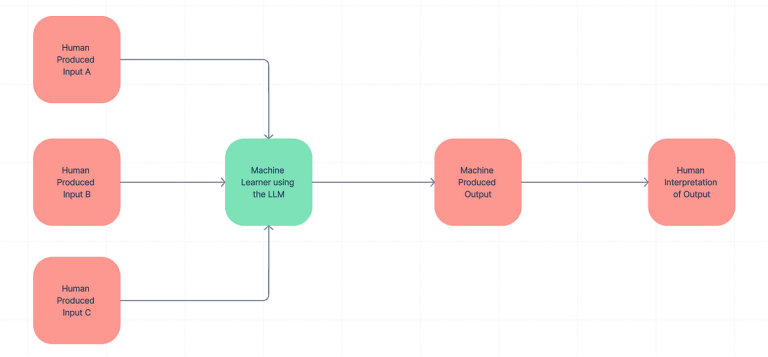

Moreover, not solely will we introduce a bias soup into our machine analysis (the poor factor doesn’t stand an opportunity!), however then we people are left to interpret the flawed outcomes.

The impact?

A double infusion of behavioural bias’s.

One within the analysis of our knowledge set (the enter), and the second within the human analysis (the output).

Augmenting our flawed resolution making with… imperfections, from which we are able to imperfectly interpret.

For all we have a look at machines because the risk, or concern, and in reality it’s us we (and the machine) ought to fear about.

Clowns to the Left of me, Jokers to the fitting, (Right here I’m) Caught within the center with you….

Supplied with a garbled sludge of human inputs, our Machine actually by no means stands an opportunity.

As a substitute it takes rubbish in, and produces rubbish out. Unable to untangle the net of bias-powered knowledge it has been fed.

Successfully its position will be seen as a properly that means reducer, confidently and shortly decreasing the noise, if not solely eliminating it.

Handing its findings on to our suspecting, however equally flawed, human on the finish of the chain.

Our greatest likelihood lays firstly in with the ability to sanitise the unique inputs for our knowledge hungry algorithm to then feed from

Perhaps, in decreasing the LLM (Giant Language Mannequin) right into a mere LM (Language Mannequin), we lay the groundwork for our machine to concentrate on the fitting factor, naturally cleansed of a large number (however not all) of human bias’s.

Nevertheless, opinions, and interpretation matter. None extra so when contemplating final arbiter of the information. The human analyst on the finish of the chain. The dotter of the I’d and the crosser of the t’s. The ‘extremely expert’ left over in our consulting factories as soon as the primary AI wave has run its course.

In my thoughts, this has to elevate the talents, of that individual, to by no means earlier than seen ranges, and that is the second mitigation.

The gatekeeper of the output, the conclusion, should be a grasp within the materials being evaluated (a site knowledgeable in regulation, finance, and wherever we selected to make use of our machines), and additionally within the heuristics they need to concentrate on in offering the final word judgement.

So not asking a lot then! As if our accountability on this new world order wasnt sufficient, we’ve to watch out what we practice our machines with, and in how we interpret its conclusions. Removed from a machine age that is certainly a brand new age of the people…