Researchers from IBM Analysis Zurich and ETH Zurich have just lately created and introduced a neuro-vector-symbolic structure (NVSA) to the neighborhood. This structure synergistically combines two highly effective mechanisms: deep neural networks (DNNs) and vector-symbolic architectures (VSAs) for encoding the interface of visible notion and a server of probabilistic reasoning. Their structure, introduced in Nature Machine Intelligence journal, can overcome the restrictions of each approaches, extra successfully fixing progressive matrices and different reasoning duties.

Presently, neither deep neural networks nor symbolic synthetic intelligence (AI) alone display the extent of intelligence that we observe in people. The principle motive for that is that neural networks can not share frequent knowledge representations to acquire separate objects. This is called the binding downside. Then again, symbolic AI suffers from rule explosion. These two issues are central in neuro-symbolic AI, which goals to mix the very best of each paradigms.

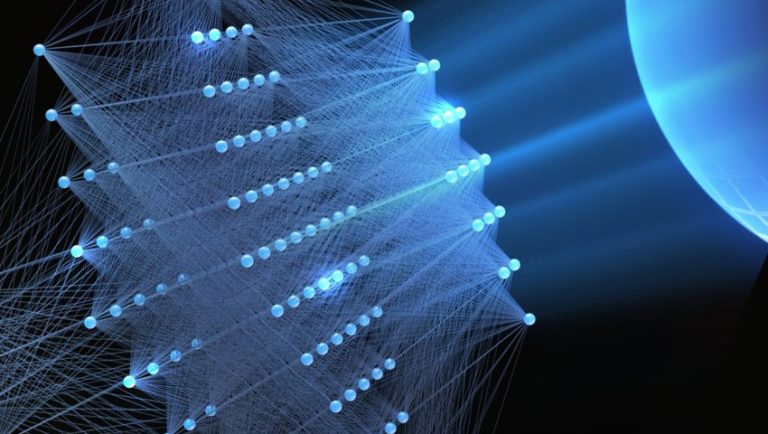

The neuro-vector-symbolic structure (NVSA) is particularly designed to deal with these two issues by using its highly effective operators in multidimensional distributed representations, serving as a typical language between neural networks and symbolic synthetic intelligence. NVSA combines deep neural networks, recognized for his or her proficiency in notion duties, with the VSA mechanism.

VSA is a computational mannequin that makes use of multidimensional distributed vectors and their algebraic properties to carry out symbolic computations. In VSA, all representations, from atomic to compositional buildings, are multidimensional holographic vectors of the identical mounted dimensionality.

VSA representations will be composed, decomposed, explored, and reworked in varied methods utilizing a set of well-defined operations, together with binding, unbinding, merging, permutation, inverse permutation, and associative reminiscence. Such compositional and clear traits allow the usage of VSA in analogy reasoning, however VSA doesn’t have a notion module to course of uncooked sensory inputs. It requires a notion system, comparable to a symbolic syntactic analyzer, that gives symbolic representations to help reasoning.

When creating NVSA, the researchers targeted on fixing issues of visible summary reasoning, particularly extensively used IQ assessments often called Raven’s Progressive Matrices.

Raven’s Progressive Matrices are assessments designed to evaluate the extent of mental growth and summary considering expertise. They consider the flexibility for systematic, deliberate, and methodical mental exercise, in addition to general logical reasoning. The assessments include a collection of things introduced in units, the place a number of gadgets are lacking. To resolve Raven’s Progressive Matrices, respondents are tasked with figuring out the lacking parts inside a given set from a number of out there choices. This requires superior reasoning talents, comparable to the flexibility to detect summary relationships between objects, which will be associated to their form, dimension, colour, or different traits.

In preliminary evaluations, NVSA demonstrated excessive effectiveness in fixing Raven’s Progressive Matrices. In comparison with fashionable deep neural networks and neuro-symbolic approaches, NVSA achieved a brand new common accuracy file of 87.7% on the RAVEN dataset. NVSA additionally achieved the very best accuracy of 88.1% on the I-RAVEN dataset, whereas most deep studying approaches suffered important drops in accuracy, averaging lower than 50%. NVSA additionally allows real-time computation on processors, which is 244 instances quicker than functionally equal symbolic logical reasoning.

To resolve Raven’s Matrices utilizing a symbolic strategy, a probabilistic abduction technique is utilized. It includes trying to find an answer in an area outlined by prior information in regards to the take a look at. The earlier information is represented in symbolic type by describing all attainable rule implementations that would govern the Raven’s assessments. On this strategy, to seek for an answer, all legitimate mixtures must be traversed, chances of guidelines must be computed, and their sums must be accrued. These calculations are computationally intensive, which turns into a bottleneck within the search as a result of giant variety of mixtures that can not be exhaustively examined.

NVSA would not encounter this downside as it’s able to performing such in depth probabilistic computations in only one vector operation. This enables it to unravel duties like Raven’s Progressive Matrices quicker and extra precisely than different AI approaches primarily based solely on deep neural networks or VSA. That is the primary instance demonstrating how probabilistic reasoning will be effectively executed utilizing distributed representations and VSA operators.

NVSA is a crucial step in direction of integrating completely different AI paradigms right into a unified framework for fixing duties associated to each notion and higher-level reasoning. The structure has proven nice promise in effectively and swiftly fixing advanced logical issues. Sooner or later, it may be additional examined and utilized to varied different issues, probably inspiring researchers to develop comparable approaches.

The library that implements NVSA capabilities is obtainable on GitHub.

Yow will discover a whole instance of fixing Raven’s Matrices here.