Clarify Transformers, the structure behind Google Translate and ChatGPT, utilizing on a regular basis language in 100 seconds.

Think about you’re having a dialog, and every phrase you hear helps you determine what’s coming subsequent. That’s a bit like how self-attention works in AI; take a look at my earlier publish to see the way it works. It’s a wise technique to pay extra consideration to essential phrases to raised predict the subsequent ones. However the actual magic begins with Transformer Fashions that flip this idea right into a full-fledged phrase era engine.

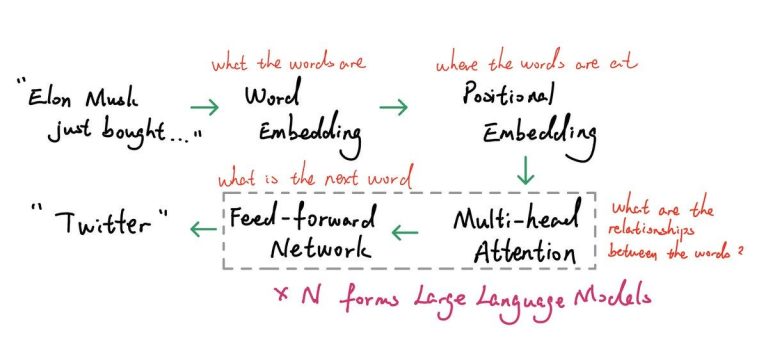

Right here’s the catch: Computer systems don’t get phrases. They see every little thing as zeros and ones. So, once you feed phrases to AI fashions like ChatGPT, every phrase will get remodeled right into a phrase embedding. Consider it as turning phrases right into a secret numerical code, the place comparable phrases have comparable codes. “Unhappy” and “sad” are shut in that means, in order that they’re shut on this numerical area too. Try @akshay_pachaar for his superior illustration on embeddings.

However right here’s the kicker: the order of phrases issues too. “Canine bites man” is an entire totally different story from “Man bites canine”. So, a positional embedding can also be given to every phrase, telling the mannequin the phrase’s place in line. As an alternative of utilizing easy counts like 1, 2, and three for positioning, which may get unwieldy in lengthy texts, the Transformer makes use of trig capabilities to maintain phrase positions in a neat -1 to 1 vary.

Subsequent, the Transformer dives into the that means and order of phrases utilizing multi-head consideration. Mainly, it’s a number of self-attention working in parallel, capturing totally different phrase relationships throughout the context, be it referential or emotional. See my earlier publish for a primer for self-attention.

With the that means, place, and relationships of phrases in hand, the Transformer understands the essence of context. Therefore, it ponders over this information with a feed-forward community — a kind of neural community. This a part of the AI mind mulls over the insights gathered and picks one of the best subsequent phrase, like selecting “Musk” to comply with “Tesla’s CEO Elon…”.

Combining multi-head consideration with this feed-forward community kinds the core of a Transformer Head. Stack up sufficient of those, and also you’ve received your self a language mannequin like ChatGPT that may chat, translate, or write tales. The graph beneath signifies the simplified pipeline of a Transformer Mannequin.

It is a glimpse into the Transformer know-how, a cornerstone of immediately’s AI wonders, impressed by the groundbreaking paper Consideration is All You Want. Kudos to the authors for this tech marvel.

In case you are enthusiastic about simplified and intuitive AI explanations, comply with me to carry this tech marvel nearer to everybody. #AI4ALL #TechForGood