Analysis in laptop imaginative and prescient is repeatedly increasing the horizons of potentialities for modifying and creating video content material, and one of many new modern instruments offered on the Worldwide Convention on Pc Imaginative and prescient in Paris is OmniMotion. It’s described within the paper “Tracking Everything Everywhere All at Once.” Developed by Cornell researchers, this can be a highly effective optimization device designed to estimate movement in video footage. It affords the potential to utterly remodel video modifying and generative content material creation utilizing synthetic intelligence. Historically, movement estimation strategies have adopted one among two major approaches: monitoring sparse objects and utilizing dense optical circulate. Nevertheless, none of them allowed us to totally simulate movement in video over massive time intervals and preserve observe of the motion of all pixels within the video. Approaches undertaken to deal with this downside are sometimes context-limited in time and house, resulting in accumulation of errors over lengthy trajectories and inconsistencies in movement estimates. Typically, the event of strategies for monitoring each dense and long-range trajectories stays a urgent concern within the discipline, together with three major facets:

- movement monitoring over very long time intervals

- movement monitoring even by way of occlusion occasions

- guaranteeing consistency in house and time

OmniMotion is a brand new optimization methodology designed to extra precisely estimate each dense and long-range movement in video sequences. Not like earlier algorithms that operated in restricted time home windows, OmniMotion gives an entire and globally constant illustration of movement. Because of this each pixel in a video can now be precisely tracked all through the complete video footage, opening the door to new potentialities for video content material exploration and creation. The tactic proposed in OmniMotion can deal with advanced duties equivalent to occlusion monitoring and modeling numerous mixtures of digicam and object movement. Checks carried out through the analysis have proven that this modern method simply outperforms pre-existing strategies in each quantitative and qualitative phrases.

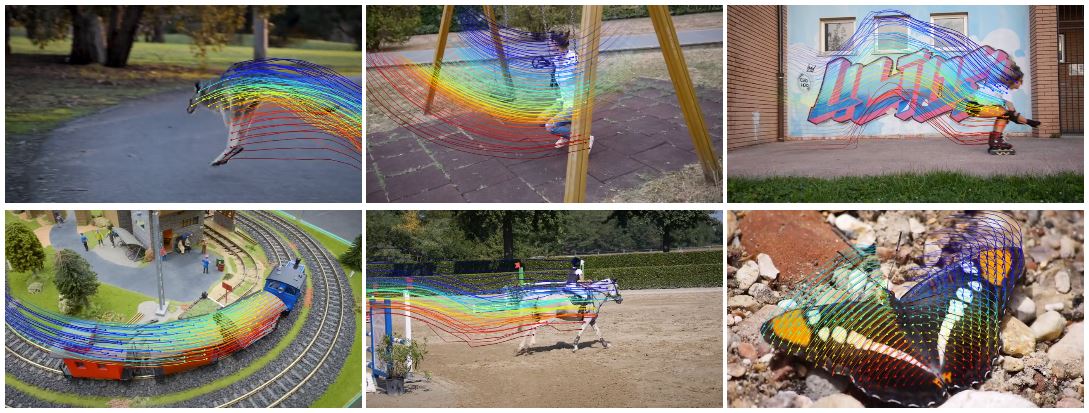

Determine 1. OmniMotion collectively tracks all factors in a video throughout all frames, even by way of occlusions.

As proven within the movement illustration above, OmniMotion means that you can estimate full-scale movement trajectories for each pixel in each body of video. The sparse trajectories of foreground objects are proven for readability, however OmniMotion additionally calculates movement trajectories for all pixels. This methodology gives exact, constant motion over lengthy distances, even for fast-moving objects, and reliably tracks objects even by way of occlusion moments, as proven within the examples with the canine and swing.

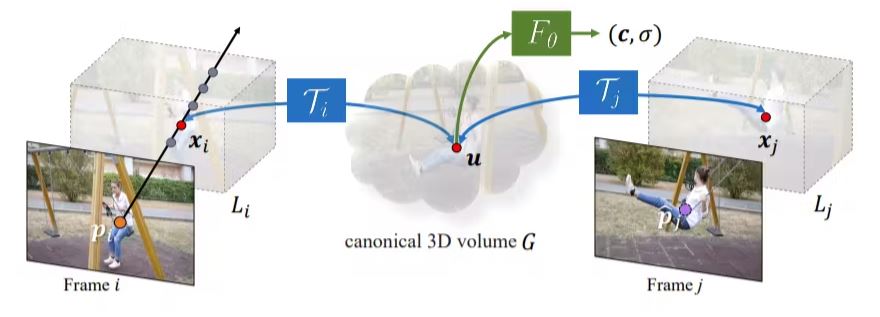

In OmniMotion, the canonical quantity G is a 3D atlas containing details about the video. It features a coordinate community Fθ based mostly on the NeRF methodology to ascertain a correspondence between every canonical 3D coordinate, density σ and shade c.

Density info helps determine surfaces in a body and decide whether or not objects are occluded, and shade is used to calculate photometric loss for optimization functions. The canonical 3D quantity performs an essential position in capturing and analyzing the movement dynamics in a scene.

OmniMotion additionally makes use of 3D bijections, which offer a steady one-to-one correspondence between 3D factors in native coordinates and the canonical 3D coordinate system. These bijections present movement consistency by guaranteeing that correspondence between 3D factors in several frames originates from the identical canonical level.

To signify advanced real-world movement, bijections are carried out utilizing invertible neural networks (INNs) that present expressive and adaptive show capabilities. This methodology permits OmniMotion to precisely seize and observe movement throughout frames whereas sustaining total information consistency.

Determine 2. Technique overview. OmniMotion is comprised of a canonical 3D quantity G and a set of 3D Bijections

To implement OmniMotion, a posh community consisting of six layers of affine transformation was created. It’s able to computing the latent code for every body utilizing a 2-layer community with 256 channels, and the dimension of this code is 128. Moreover, the canonical illustration is carried out utilizing a GaborNet structure outfitted with 3 layers and 512 channels. Pixel coordinates are normalized to the vary [-1, 1], and an area 3D house is specified for every body. Matched canonical areas are initialized throughout the unit sphere. Additionally, compression operations tailored from mip-NeRF 360 are utilized for numerical stability through the coaching.

This structure is educated on every video sequence utilizing the Adam optimizer for 200,000 iterations. Every coaching set contains 256 pairs of matches chosen from 8 picture pairs, leading to a complete of 1024 matches. It is usually essential to notice that 32 factors are chosen for every ray utilizing stratified sampling. This refined structure is a key to OmniMotion’s excellent efficiency and solves the advanced challenges related to movement estimation in video.

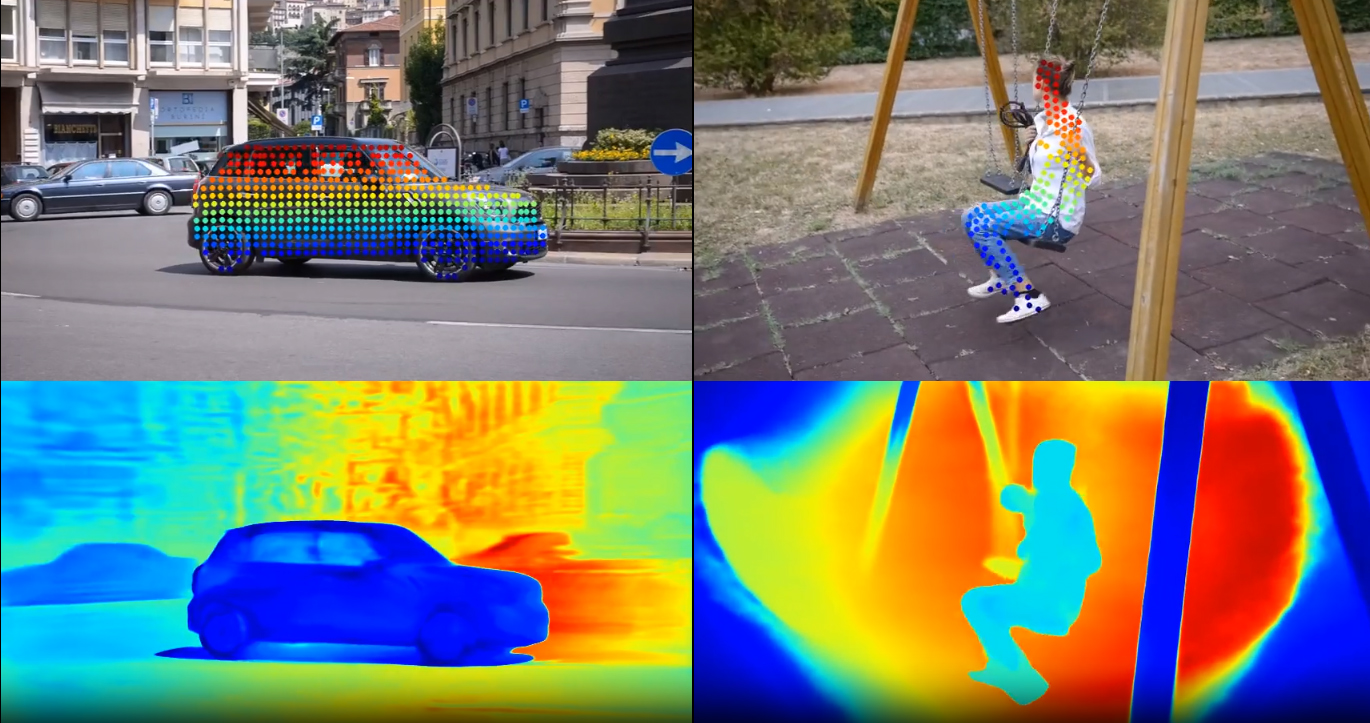

One of many very helpful facets of OmniMotion is its skill to extract pseudo-depth renderings from an optimized quasi-3D illustration. This gives details about the completely different depths of various objects within the scene and shows their relative positions. Beneath is an illustration of the pseudo-depth visualization. Close by objects are marked in blue, whereas distant objects are marked in crimson, which clearly demonstrates the order of the completely different components of the scene.

Determine 3. Pseudo-Depth Visualization

You will need to observe that, like many movement estimation strategies, OmniMotion has its limitations. It doesn’t at all times address very quick and inflexible actions, in addition to with skinny buildings within the scene. In these particular situations, pairwise correspondence strategies might not present sufficiently dependable matches, which might result in a scarcity of accuracy within the world movement calculation. OmniMotion continues to evolve to deal with these challenges and contribute to the development of video movement evaluation.

Check out the demo model here. Technical particulars can be found on GitHub