On the planet of synthetic intelligence, the function of deep studying is changing into central. Synthetic intelligence paradigms have historically taken inspiration from the functioning of the human mind, however it seems that deep studying has surpassed the training capabilities of the human mind in some elements. Deep studying has undoubtedly made spectacular strides, however it has its drawbacks, together with excessive computational complexity and the necessity for giant quantities of knowledge.

In mild of the above considerations, scientists from Bar-Ilan College in Israel are elevating an essential query: ought to synthetic intelligence incorporate deep studying? They introduced their new paper, revealed within the journal Scientific Reviews, which continues their previous research on the benefit of tree-like architectures over convolutional networks. The primary objective of the brand new research was to search out out whether or not complicated classification duties might be successfully skilled utilizing shallower neural networks based mostly on brain-inspired ideas, whereas lowering the computational load. On this article, we are going to define key findings that would reshape the unreal intelligence trade.

So, as we already know, efficiently fixing complicated classification duties requires coaching deep neural networks, consisting of dozens and even lots of of convolutional and totally related hidden layers. That is fairly totally different from how the human mind capabilities. In deep studying, the primary convolutional layer detects localized patterns within the enter information, and subsequent layers establish larger-scale patterns till a dependable class characterization of the enter information is achieved.

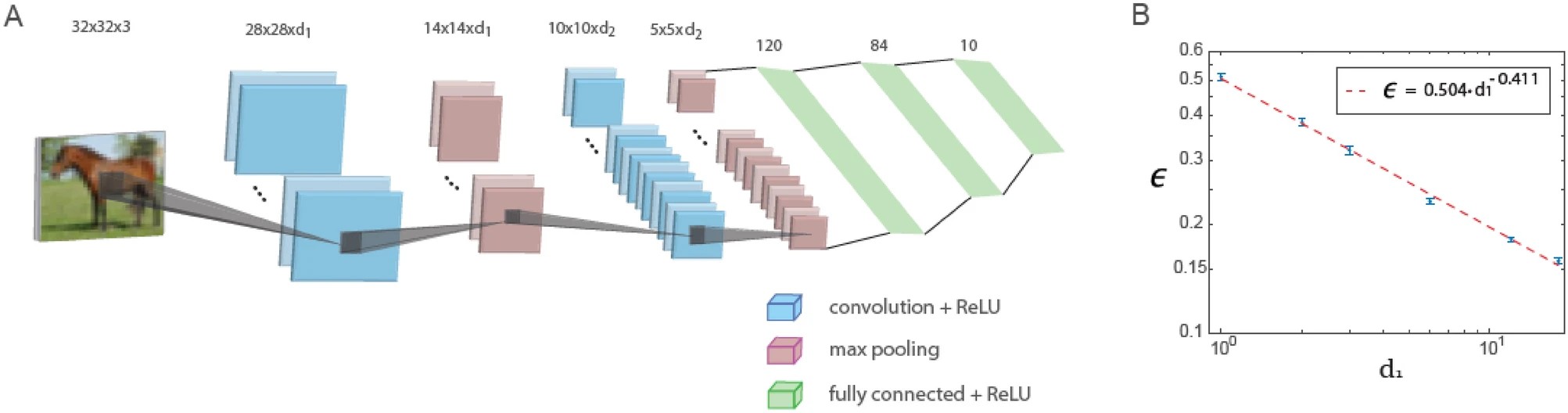

This research exhibits that when utilizing a hard and fast depth ratio of the primary and second convolutional layers, errors in a small LeNet structure consisting of solely 5 layers lower with the variety of filters within the first convolutional layer in accordance with an influence legislation. Extrapolation of this energy legislation means that the generalized LeNet structure is able to attaining low error values just like these obtained with deep neural networks based mostly on the CIFAR-10 information.

The determine under exhibits coaching in a generalized LeNet structure. The generalized LeNet structure for the CIFAR-10 database (enter dimension 32 x 32 x 3 pixels) consists of 5 layers: two convolutional layers utilizing most pooling and three totally related layers. The primary and second convolutional layers comprise d1 and d2 filters, respectively, the place d1 / d2 ≃ 6 / 16. The plot of the take a look at error, denoted as ϵ, versus d1 on a logarithmic scale, indicating a power-law dependence with an exponent ρ∼0.41. Neuron activation operate is ReLU.

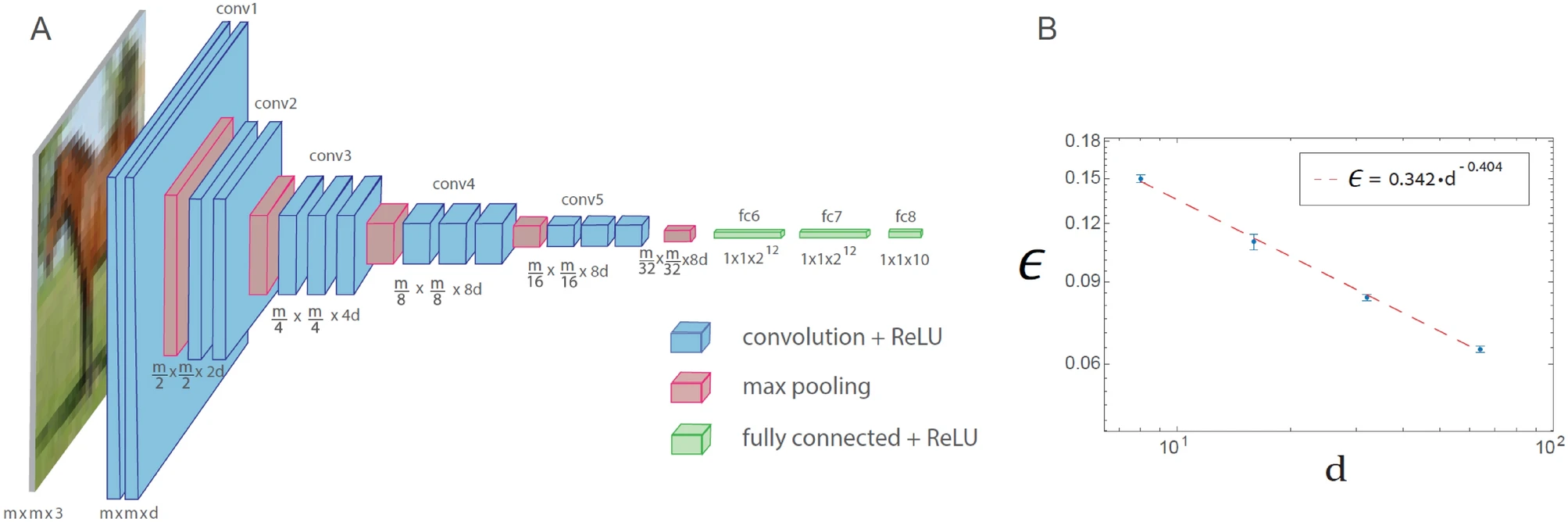

An identical energy legislation phenomenon can also be noticed for the generalized VGG-16 structure. Nevertheless, this results in a rise within the variety of operations required to realize a given error fee in comparison with LeNet.

Coaching within the generalized VGG-16 structure is demonstrated within the determine under. The generalized VGG-16 structure consisting of 16 layers, the place the variety of filters within the nth set of convolutions is d x 2n − 1 (n ≤ 4), and the sq. root of the filter dimension is m x 2 − (n − 1) (n ≤ 5), the place m x m x 3 is the scale of every enter (d = 64 within the authentic VGG-16 structure). Plot of the take a look at error, denoted as ϵ, versus d on a logarithmic scale for the CIFAR-10 database (m = 32), indicating a power-law dependence with an exponent ρ∼0.4. Neuron activation operate is ReLU.

The facility legislation phenomenon covers varied generalized LeNet and VGG-16 architectures, indicating its common conduct and suggesting a quantitative hierarchical complexity in machine studying. As well as, the conservation legislation for convolutional layers equal to the sq. root of their dimension multiplied by their depth is discovered to asymptotically decrease errors. The efficient strategy to floor studying demonstrated on this research requires additional quantitative research utilizing totally different databases and architectures, in addition to its accelerated implementation with future specialised {hardware} designs.