Overview

Grid computing is a computing infrastructure . It addresses the necessity to mix laptop or different sources . The sources that mixes could also be unfold over completely different geographical areas and it addresses the answer to realize a standard objective. All unused sources on a number of computer systems are pooled collectively and made obtainable for a single job.

ML coaching neural networks want computational energy. Single Laptop or Node can not do that and can take hours 10 -12 . For small information I’ve seen the place I depart multi CPU single node for hours simply to coach a small NN Mannequin.

Lets take a look at some og grid computing and in context with functions and venture

- Computational grid computing

- Knowledge grid computing

- Collaborative grid computing

- Manuscript grid computing

- Modular grid computing

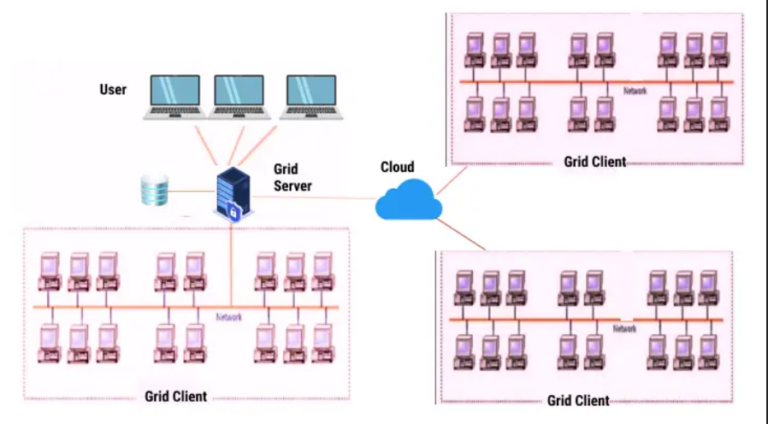

Grid Computing Structure

- Prime Layer

- Second layer -Middleware

- Third Layer — Computing Layer that has CPU, Storage, Reminiscence

- Backside Layer permits nodes or laptop to hook up with a grid computing community

We now have

- Person Node ( The one That request sources)

- Supplier Node (carry out subtasks wanted for consumer node)

- Management Node(adminster the community and handle allocations)

We now have been utilizing Cloud . AWS HPC is Excessive efficiency Cloud on AWS is suite of services and products. Amazon Elastic Compute Cloud is one instance. GCP Supplies Batch that I used to schedule information pipelines. AWS Batch can be there. Amazon FSx for Lsutre is there for enormous datasets — I’ve not used however be at liberty to discover.

We are able to use GridComputing that comes with Elasticsearch Clusters -new characteristic of Generative AI. I labored with Generative AI utilizing ElasticClusters that have been very massive (had lot no of Nodes and scalable) coupled with Licensed characteristic of generative AI for

- Semantic search utilizing ELSER and open supply textual content embedding

- Import an open supply mannequin for textual content embeddings into Elasticsearch

- Internet hosting our personal open supply LLM

- Utilizing our personal hosted mannequin to summarize semantic search outcomes

- Deploy Elasticsearch utilizing Elastic Discovered Sparse Encoder( Once more all this iS AI/ML Grid Computing)

Needles to say GPUS

Grid environments require efficient information administration. Frequent practices are to relocate or open any information or software module to completely different grid nodes.

Scheduling the duties to run on the sources obtainable . We now have used JobScheduler for years. Schedulers must course of job in sequential and parallel this may very well be patching, ansible jobs or batch scheduling of pipeline like ETL Pipelines, ML Pipelines, ML Knowledge Pipelines

ML Pipelines

Compute Engine supplies graphics processing items (GPUs) that we will add VM or Cloud Nodes, . We are able to use these GPUs to speed up particular workloads in your VMs comparable to machine studying and information processing

I’ve used LSTM . LSTM Unit consists of cell, enter gate, output gate, overlook gate. Overlook state helps by defining the data coming from earlier state(what the mannequin present state ought to keep in mind and what to foreget).

GCLOUD

Utilizing Gcloud you’ll be able to create VMS with GPU. I’ve used GPUS for ML Pipelines on premise. However on Cloud we now have extra higher choices with availability zones — GPU Areas and GPU Zones

AWS

on AWS use G3.G3 cases require AWS offered DNS decision for GRID licensing to work. We are able to Use

AMI with public NVDIA Drivers

- TESLA Driver

- Gaming Driver

- Grid Driver

GRID drivers (G5, G4dn, and G3 cases)

We are able to obtain software program and develop customized AMIs to be used with the NVIDIA A10G, NVIDIA Tesla T4, or NVIDIA Tesla M60 {hardware}.