As synthetic intelligence (AI) techniques grow to be more and more advanced, understanding their interior workings is essential for security, equity, and transparency. Researchers at MIT’s Pc Science and Synthetic Intelligence Laboratory (CSAIL) have launched an progressive answer known as “MAIA” (Multimodal Automated Interpretability Agent), a system that automates the interpretability of neural networks.

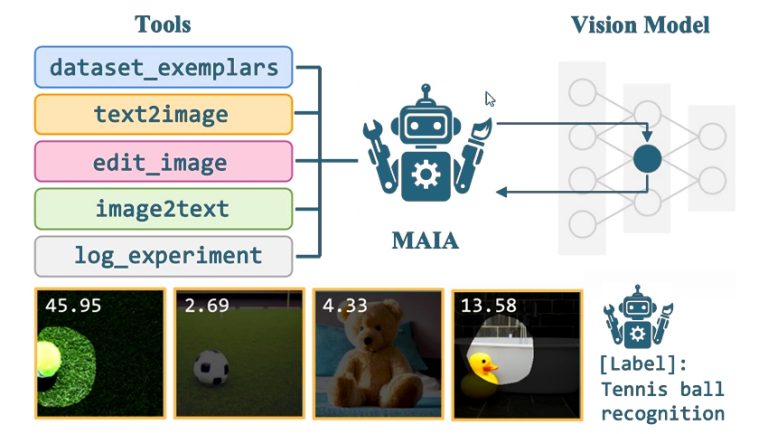

MAIA is designed to sort out the problem of understanding massive and complicated AI fashions. It automates the method of decoding laptop imaginative and prescient fashions, which consider totally different properties of photos. MAIA leverages a vision-language mannequin spine mixed with a library of interpretability instruments, permitting it to conduct experiments on different AI techniques.

In line with Tamar Rott Shaham, a co-author of the analysis paper, their aim was to create an AI researcher that may conduct interpretability experiments autonomously. Since current strategies merely label or visualize knowledge in a one-shot course of, MAIA, nonetheless, can generate hypotheses, design experiments to check them, and refine its understanding by means of iterative evaluation.

MAIA’s capabilities are demonstrated in three key duties:

- Part Labeling: MAIA identifies particular person parts inside imaginative and prescient fashions and describes the visible ideas that activate them.

- Mannequin Cleanup: by eradicating irrelevant options from picture classifiers, MAIA enhances their robustness in novel conditions.

- Bias Detection: MAIA hunts for hidden biases, serving to uncover potential equity points in AI outputs.

One among MAIA’s notable options is its capability to explain the ideas detected by particular person neurons in a imaginative and prescient mannequin. For instance, a consumer would possibly ask MAIA to establish what a particular neuron is detecting. MAIA retrieves “dataset exemplars” from ImageNet that maximally activate the neuron, hypothesizes the causes of the neuron’s exercise, and designs experiments to check these hypotheses. By producing and enhancing artificial photos, MAIA can isolate the precise causes of a neuron’s exercise, very similar to a scientific experiment.

MAIA’s explanations are evaluated utilizing artificial techniques with recognized behaviors and new automated protocols for actual neurons in educated AI techniques. The CSAIL-led methodology outperformed baseline strategies in describing neurons in varied imaginative and prescient fashions, typically matching the standard of human-written descriptions.

The sphere of interpretability is evolving alongside the rise of “black field” machine studying fashions. Present strategies are sometimes restricted in scale or precision. The researchers aimed to construct a versatile, scalable system to reply numerous interpretability questions. Bias detection in picture classifiers was a crucial space of focus. As an illustration, MAIA recognized a bias in a classifier that misclassified photos of black labradors whereas precisely classifying yellow-furred retrievers.

Regardless of its strengths, MAIA’s efficiency is restricted by the standard of its exterior instruments. As picture synthesis fashions and different instruments enhance, so will MAIA’s effectiveness. The researchers additionally carried out an image-to-text device to mitigate affirmation bias and overfitting points.

Wanting forward, the researchers plan to use comparable experiments to human notion. Historically, testing human visible notion has been labor-intensive. With MAIA, this course of might be scaled up, doubtlessly permitting comparisons between human and synthetic visible notion.

Understanding neural networks is troublesome resulting from their complexity. MAIA helps bridge this hole by robotically analyzing neurons and reporting findings in a digestible manner. Scaling these strategies up might be essential for understanding and overseeing AI techniques.

MAIA’s contributions prolong past academia. As AI turns into integral to varied domains, decoding its habits is crucial. MAIA bridges the hole between complexity and transparency, making AI techniques extra accountable. By equipping AI researchers with instruments that hold tempo with system scaling, we are able to higher perceive and deal with the challenges posed by new AI fashions.

For extra particulars, the analysis is revealed on the arXiv preprint server.