Step 1: Understanding the Drawback

We need to classify emails as “Spam” or “Not Spam” primarily based on the presence of sure phrases.

Instance Messages:

1. E mail 1: “Purchase low-cost merchandise now!”

2. E mail 2: “Unique provide only for you.”

3. E mail 3: “Assembly at 10 AM tomorrow.”

4. E mail 4: “Particular low cost on low-cost merchandise.”

Step 2: Getting ready the Dataset

First, we have to convert these e mail messages right into a dataset {that a} machine studying mannequin can perceive. This entails a number of preprocessing steps:

1. Tokenization

2. Cease Phrase Elimination

3. Stemming/Lemmatization

4. Featurization

5. Vectorization

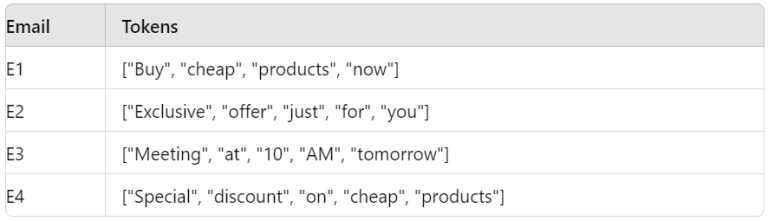

Step 2.1: Tokenization

Tokenization is the method of splitting uncooked textual content into particular person phrases or parts.

Step 2.2: Cease Phrase Elimination

Cease phrases are frequent phrases that don’t add a lot worth to the evaluation. We take away them to cut back noise.

Step 2.3: Stemming/Lemmatization

Lemmatization reduces phrases to their base or root kind.

Step 2.4: Featurization

Rework the phrases into options that the mannequin can use. Right here, we’ll create a binary presence/absence characteristic for every phrase.

Vocabulary:

[“Buy”, “cheap”, “product”, “exclusive”, “offer”, “meet”, “10”, “AM”, “tomorrow”, “special”, “discount”]

Step 2.5: Vectorization

Use a binary vectorizer to rework tokenized messages into binary vectors indicating the presence of phrases within the vocabulary.

Step 3: Bernoulli Naive Bayes Classifier

We use the Bernoulli distribution as a result of it evaluates outcomes as binary (sure/no). Every phrase within the e mail is both current (1) or absent (0).

Step 3.1: Calculate Chances

Step 3.2: Apply Laplace Smoothing

To deal with zero possibilities, we use Laplace smoothing, including 1 to every rely and adjusting the whole accordingly.

Step 4: Classify a New E mail

Let’s classify a brand new e mail with the options: “Purchase”, “Low cost”, “Unique”.

Options Vector: E mail=[1,1,0,1,0,0,0,0,0,0,0]

Calculate Posterior Chances:

Step 5: Conclusion

We classify the e-mail as Spam as a result of it’s 40%.

Abstract

Naive Bayes is an easy but highly effective classification algorithm. It really works effectively for spam detection and different textual content classification duties. By understanding the underlying ideas reminiscent of tokenization, cease phrase removing, stemming/lemmatization, featurization, vectorization, Bernoulli distribution, prior, chance, proof, posterior, and Laplace smoothing, we are able to successfully use Naive Bayes for varied classification issues.

This step-by-step information supplies a transparent understanding of the way to preprocess textual content information and apply the Naive Bayes algorithm for spam detection.