Context: With the growing complexity and dimension of deep studying fashions, environment friendly coaching strategies have change into essential, particularly in functions like wildfire danger prediction, the place massive datasets and complex fashions are customary.

Downside: Conventional information parallelism strategies should be revised for coaching large-scale fashions attributable to excessive reminiscence consumption and restricted scalability, hindering the development of extra correct predictive fashions for wildfire danger.

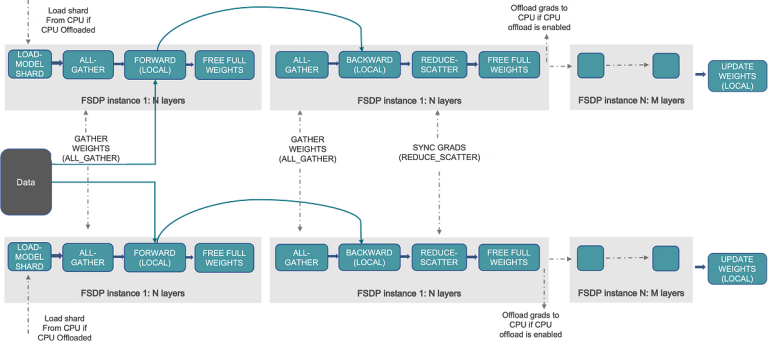

Strategy: This essay explores the implementation of Absolutely Sharded Information Parallel (FSDP) in PyTorch. This technique shards mannequin parameters and optimizer states throughout a number of GPUs, thus decreasing reminiscence overhead and bettering scalability. An artificial wildfire dataset is created and used to reveal the applying of FSDP in coaching a neural community mannequin.

Outcomes: The FSDP-enabled mannequin coaching exhibits important enhancements in reminiscence effectivity and scalability, permitting for the coaching of bigger fashions. Analysis metrics reminiscent of Imply Squared Error (MSE) and R-squared (R²) point out sturdy mannequin efficiency and visualization strategies verify the mannequin’s predictive accuracy.

Conclusions: Absolutely Sharded Information Parallel (FSDP) in PyTorch is an efficient resolution for coaching large-scale deep studying fashions. By optimizing reminiscence utilization and enhancing scalability, FSDP facilitates the event of extra complicated and…