Overview of mission:

On this mission, we goal to construct a ML mannequin that can obtain particular data of pateinets and may predict the value of their medical insurance coverage value. This mannequin shall be skilled utilizing a dataset of affected person data. The dataset comprise 1338 affected person entries and the next columns:

- age

- intercourse (gender)

- bmi

- youngsters

- smoker

- area

- fees (worth) i.e. our goal variable

Right here is the hyperlink to the dataset https://www.kaggle.com/datasets/mirichoi0218/insurance . After profitable coaching of the mannequin, we’ll consider the mannequin utilizing R squared error.

Importing the Dependencies

This primary step is to import all of the wanted libraries, we will verify the official documentation of the luibraries with a fast google search.

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LinearRegression

from sklearn import metrics

Knowledge Pre-processing

#Loading the information from csv file to a pandas dataframe

insurance_dataset = pd.read_csv('knowledge/insurance coverage.csv')#print the primary 5 rows

insurance_dataset.head()

#checking for details about the dataset

insurance_dataset.data()

We’ve 0 null values within the dataframe.

Knowledge Visualization

Within the earlier mission, we didn’t plot any graph since we weren’t coping with a numerical dataset. We’ll use the seaborn and the matplotlib libraries to plot the graphs.

#distribution of age worth

plt.determine(figsize=(3,3))

sns.distplot(insurance_dataset['age'])

plt.title('Age Distribution')

#distribution of gender

plt.determine(figsize=(5,5))

sns.countplot(knowledge=insurance_dataset, x='intercourse', hue='intercourse')

plt.title('Gender Distribution')

The graph doesn’t actually inform us the precise numbers of female and male so we’ll run a code to depend.

#counting the values

insurance_dataset['sex'].value_counts()

The output is:

#distribution of people who smoke

plt.determine(figsize=(5,5))

sns.countplot(knowledge=insurance_dataset, x='smoker', hue='smoker')

We additionally wish to know the precise variety of people who smoke and non-smokers.

#counting the values

insurance_dataset['smoker'].value_counts()

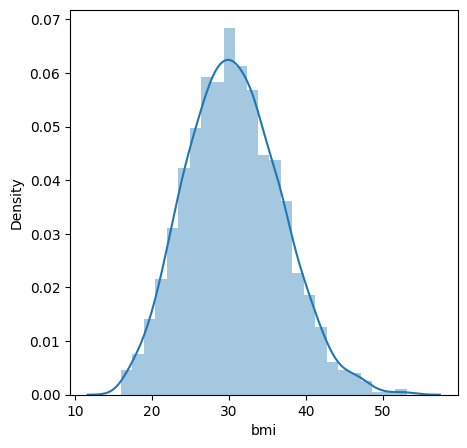

#checking the BMI distribution

plt.determine(figsize=(5,5))

sns.distplot(insurance_dataset['bmi'])

Regular BMI vary -> 18.5–24.9

#distribution of youngsters

plt.determine(figsize=(5,5))

sns.countplot(knowledge=insurance_dataset, x='youngsters', hue='youngsters')

#counting the kids's column distribution

insurance_dataset['children'].value_counts()

#counting the regioon's column distribution

insurance_dataset['region'].value_counts()

The areas have actually shut numbers so we wont be plotting it on a graph because the graph wouldnt be exhibiting us a lot.

And for the ultimate column, teh fees column;

#fees column distribution

plt.determine(figsize=(7,7))

sns.distplot(insurance_dataset['charges'])

Label Encoding

Right here, we wish to convert the specific options (intercourse, smoker and area) into numerical values.

#encoding the intercourse column

insurance_dataset.exchange({'intercourse':{'male':0, 'feminine':1}}, inplace=True)#encoding the smoker column

insurance_dataset.exchange({'smoker':{'sure':0, 'no':1}}, inplace=True)

#encoding the area column

insurance_dataset.exchange({'area':{'southeast':0, 'southwest':1, 'northeast':2, 'northwest':3}}, inplace=True)

The above traces of code ought to change, female and male within the intercourse columns to 0 and 1 respectively sure and no within the smoker column to 0 and 1 and Southeast, southwest, northeast and northwest to 0, 1, 2, and three respectively.

Now, we break up the dataframe into options and goal variables.

#splitting into feautures and goal variables

x = insurance_dataset.drop(columns='fees', axis=1)

y = insurance_dataset['charges']

Mannequin Coaching and Analysis

#splitting coaching and take a look at knowledge

x_train, x_test, y_train, y_test = train_test_split(x,y, test_size=0.2, random_state=3)

We’ve splitted the variables into take a look at and practice units. I didnt clarify the next earlier than so Iwill now.

test_size = 0.2 → this can break up the the information and the take a look at knowledge shall be 20% of the dataset and 80% would be the practice set.

random_state = 3 → This may enable for replicability of the take a look at, if one other individual runs the take a look at with the identical dataset, the identical take a look at dimension and random_state, we’ll get the identical end result.

#loading linear regression and becoming

mannequin = LinearRegression()mannequin.match(x_train, y_train)

After coaching and becoming of the mannequin with our coaching knowledge, we’ll consider utilizing R-squared error. So r squared error offers the diploma of variability within the goal variable that’s defined by the mannequin or the impartial variables. If this worth is 0.7, then it signifies that the impartial variables clarify 70% of the variation within the goal variable.

#mannequin analysis on coaching knowledge

train_data_predction = mannequin.predict(x_train)#R-Squared error

error_score = metrics.r2_score(train_data_predction, y_train)

print("R squared error is : ", error_score)

R squared error is : 0.6717962504722256

Because of this impartial variables clarify 67% of the variation within the goal variable

#Precise costs vs predicted costs graph

plt.determine(figsize=(5,5))

plt.scatter(y_train, train_data_predction)

plt.ylabel('Predicted pries')

plt.title('Precise costs vs predicted costs')

From the graph above, our coaching predicted values arent that removed from the precise values so our prediction on the coaching knowledge has performed properly

#mannequin analysis on take a look at knowledge

test_data_predction = mannequin.predict(x_test)#R-Squared error

error_score = metrics.r2_score(test_data_predction, y_test)

print("R squared error is : ", error_score)

R squared error is : 0.659796237277297

Because of this impartial variables clarify 66% of the variation within the goal variable

#Precise costs vs predicted costs graph

plt.determine(figsize=(5,5))

plt.scatter(y_test, test_data_predction)

plt.ylabel('Predicted pries')

plt.title('Precise costs vs predicted costs')

From the graph above, Like our coaching knowledge, our take a look at predicted values arent that removed from the precise values so our prediction on the take a look at knowledge has additionally performed properly.

Constructing a predictve system

input_data = (19,1,27.900,0,0,1)#changng enter knowledge to numpy array

input_data_np = np.asarray(input_data)

#reshape array

input_reshaped = input_data_np.reshape(1,-1)

prediction = mannequin.predict(input_reshaped)

print('The insurance coverage value is USD', prediction)

The insurance coverage value is USD [25236.68461814]

First, we gave the system a random affected person’s data, then we modifications it to a numpy array then reshaped the array to permit prediction by the mannequin. The system predicted that the affected person with that data may have an insurance coverage value of $25,237.

This brings us to the top of the second Supervsied Studying mission and we will go to the ultimate and Unsupervised Studying mission.