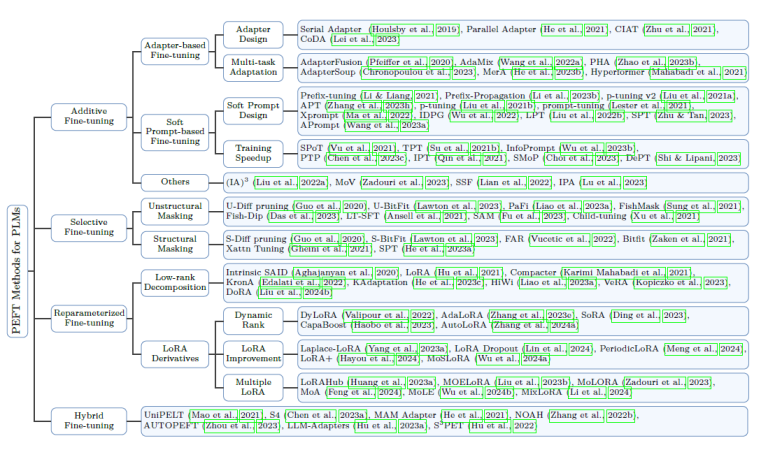

So, you’re in all probability questioning how PEFT pulls off its magic. Let’s dive into the 4 fundamental strategies below the PEFT umbrella:

Additive PEFT

Additive PEFT strategies are like including new components to a recipe with out altering the core dish. They introduce new parameters or modules into the mannequin whereas protecting the pre-trained spine principally unchanged. This strategy cuts down on the necessity for full mannequin fine-tuning, saving a ton of computational and reminiscence assets.

Methodology: Adapters

Adapters are designed to fine-tune massive fashions with minimal fuss by inserting small, trainable modules inside the pre-trained mannequin. These layers sometimes have down-projection and up-projection matrices with a non-linear activation perform sandwiched in between. The purpose right here is to take care of the unique mannequin’s weights intact whereas studying new, task-specific parameters by means of these added modules.

There are various kinds of adapters, together with Serial Adapter, which place adapter layers after the self-attention and feed-forward layers in every transformer block. Moreover, there are Sparse and Computationally Environment friendly Adapters, like AdapterFusion and CoDA, which improve inference effectivity by optimizing the adapter layer operations.

whereas yellow represents trainable. (credit: arxiv5)

You may implement adapters utilizing the Hugging Face PEFT library. Right here’s a fast instance to get you began. The HuggingFace documentation supplies an in depth information for utilizing Adapters, which incorporates the next steps:

1. Load a base

transformersmannequin with theAutoAdapterModelclass supplied by Adapters.2. Use the

load_adapter()technique to load and add an adapter.3. Activate the adapter by way of

active_adapters(for inference) or activate and set it as trainable by way oftrain_adapter()(for coaching). Make sure that to additionally take a look at composition of adapters.

from adapters import AutoAdapterModel

# 1.

mannequin = AutoAdapterModel.from_pretrained("FacebookAI/roberta-base")

# 2.

adapter_name = mannequin.load_adapter("AdapterHub/roberta-base-pf-imdb")

# 3.

mannequin.active_adapters = adapter_name

# or mannequin.train_adapter(adapter_name)

Extra particulars will be discovered on the Hugging Face documentation.

Methodology: Tender Prompting

Tender immediate tuning is an interesting strategy that entails appending learnable vectors, generally known as smooth prompts, to the enter sequence. These vectors are fine-tuned whereas protecting the remainder of the mannequin unchanged. This technique leverages the pre-trained mannequin’s data and adjusts the enter embeddings to raised go well with particular duties. The first purpose of soppy immediate tuning is to reinforce task-specific efficiency by optimizing the enter representations.

One key method on this strategy is prefix-tuning, which provides learnable vectors (prefixes) to the keys and values throughout all transformer layers. This technique ensures stability by utilizing a multi-layer perceptron (MLP) reparameterization technique. There are additionally numerous prompt-tuning variants, akin to p-tuning v2 and adaptive prefix-tuning (APT), which adaptively generate or optimize these smooth prompts. An implementation of soppy immediate tuning will be seen within the following instance, the place smooth prompts are concatenated with the enter embeddings to change the enter sequence:

def soft_prompted_model(input_ids):

x = Embed(input_ids)

x = concat([soft_prompt, x], dim=seq)

return mannequin(x)

Right here, soft_prompt has the identical characteristic dimension because the embedded inputs produced by the embedding layer. Consequently, the modified enter matrix extends the unique enter sequence with further tokens, making it longer. For extra particulars, you may consult with the Google Analysis Immediate Tuning Implementation GitHub repository. This repository comprises the unique implementation of immediate tuning, as described in “The Power of Scale for Parameter-Efficient Prompt Tuning“. The strategy makes use of a immediate module to generate immediate parameters, that are added to the embedded enter as a substitute of utilizing particular digital tokens with updatable embeddings. You’ll find the core implementation within the prompts.py file, designed for flexibility and ease of use.

Methodology: (IA)3

Along with smooth immediate tuning, there are different additive strategies value noting. One such technique is (IA)3, which introduces learnable rescaling vectors to rescale activations in the important thing, worth, and feed-forward community modules. The Hugging Face documentation supplies a complete information on the right way to implement and use the (IA)3 technique, together with parameter settings and utilization examples. Right here’s an instance of the right way to configure and use IA3:

from peft import IA3Config, get_peft_model, TaskType# Outline IA3 Configuration

peft_config = IA3Config(

task_type=TaskType.SEQ_CLS,

target_modules=["k_proj", "v_proj", "down_proj"],

feedforward_modules=["down_proj"]

)

# Wrap the bottom mannequin with IA3

mannequin = get_peft_model(base_model, peft_config)

# Nice-tune the mannequin

mannequin.practice()

Methodology: SSF (Scale-Shift Nice-tuning)

One other notable technique is Scale-Shift Fine-tuning (SSF), which applies scaling and shifting transformations to mannequin activations after main operations like multi-head self-attention and feed-forward networks. This technique defines scale and shift parameters which are fine-tuned to regulate the mannequin’s options. Right here is an instance implementation:

# Outline the dimensions and shift parameters

scale_params = nn.Parameter(torch.ones(hidden_dim))

shift_params = nn.Parameter(torch.zeros(hidden_dim))# Apply the dimensions and shift to the mannequin's options

def apply_ssf(options):

return scale_params * options + shift_params

# Nice-tune the mannequin by solely updating the dimensions and shift parameters

for knowledge, goal in train_loader:

options = mannequin.extract_features(knowledge)

modulated_features = apply_ssf(options)

output = mannequin.classifier(modulated_features)

loss = criterion(output, goal)

loss.backward()

optimizer.step()

The paper “Scaling & Shifting Your Features: A New Baseline for Efficient Model Tuning” supplies an in-depth clarification and efficiency evaluation of SSF throughout numerous datasets and mannequin architectures. The official code implementation will be discovered on GitHub, providing worthwhile assets for implementing this environment friendly tuning technique.

Selective PEFT

Selective PEFT focuses on fine-tuning solely a subset of the present parameters inside a mannequin, recognized as essential for the goal activity, whereas protecting the remainder of the parameters frozen. This strategy enhances effectivity by avoiding the necessity for full mannequin retraining and concentrating assets on probably the most impactful parameters.

Methodology: Unstructured Parameter Masking

One key method in selective PEFT is unstructured parameter masking. This technique entails fine-tuning a small subset of mannequin parameters deemed necessary for the precise activity. The number of these parameters is usually based mostly on their significance, measured by metrics akin to Fisher data. By updating solely probably the most essential parameters, the mannequin can adapt to new duties with out requiring a whole retraining. Varied methods, akin to Diff pruning, PaFi, FishMask, and Child-tuning, dynamically choose parameters based mostly on completely different significance metrics like Fisher data or magnitude.

Methodology: Structured Parameter Masking

One other method is structured parameter masking. Not like unstructured masking, which randomly selects particular person parameters, structured parameter masking fine-tunes teams of parameters in a daily sample. This strategy goals to enhance computational effectivity by organizing parameter updates in a structured method, making the method extra hardware-friendly and efficient. Methods akin to FAR, Bitfit, and SPT (Sensitivity-Conscious Visible Parameter-Environment friendly Nice-Tuning) use structured approaches to group parameters and selectively fine-tune them. By making use of common patterns to parameter updates, these strategies improve each computational and {hardware} effectivity, making the fine-tuning course of extra streamlined and efficient.

Reparameterized PEFT

Reparameterized PEFT strategies are all about making a low-dimensional illustration of mannequin parameters to make fine-tuning extra environment friendly. Basically, they use a low-rank parameterization throughout coaching after which remodel it again for inference, permitting for resource-effective mannequin adaptation.

Methodology: LoRA (Low-Rank Adaptation)

LoRA is a way that introduces low-rank matrices to signify task-specific updates to the mannequin weights. These matrices are designed to seize the important modifications wanted for a activity with out altering the whole weight matrix. By specializing in fine-tuning these low-rank matrices, the mannequin can effectively adapt to new duties. Right here’s a simplified instance of utilizing LoRA in a typical PyTorch workflow:

import torch

import loralib as lora# Outline a mannequin with LoRA-adapted layers

class MyModel(torch.nn.Module):

def __init__(self):

tremendous(MyModel, self).__init__()

self.fc1 = lora.Linear(768, 768, r=16)

self.fc2 = lora.Linear(768, 10, r=16)

def ahead(self, x):

x = torch.relu(self.fc1(x))

x = self.fc2(x)

return x

# Initialize and mark LoRA parameters as trainable

mannequin = MyModel()

lora.mark_only_lora_as_trainable(mannequin)

# Outline optimizer and loss perform

optimizer = torch.optim.Adam(mannequin.parameters(), lr=0.001)

criterion = torch.nn.CrossEntropyLoss()

# Coaching loop

for epoch in vary(10):

for batch in dataloader:

inputs, labels = batch

outputs = mannequin(inputs)

loss = criterion(outputs, labels)

optimizer.zero_grad()

loss.backward()

optimizer.step()

# Save LoRA parameters

torch.save(lora.lora_state_dict(mannequin), 'lora_params.pth')

Sources for additional exploration embody the LoRA GitHub repository, which comprises the supply code for loralib and examples for utilizing LoRA with numerous fashions. Sebastian Raschka’s Implementation Guide gives an in-depth clarification and pseudocode for LoRA. Moreover, the LoRA analysis paper supplies detailed descriptions and outcomes of the strategy.

Variations of LoRA, akin to DyLoRA, AdaLoRA, and SoRA, dynamically alter the rank and construction of those low-rank matrices throughout coaching to optimize efficiency and effectivity.

Different Reparameterization Strategies

One other notable technique is Compacter, which makes use of Kronecker merchandise and low-rank matrices to create light-weight adapter modules. DoRA (Weight-Decomposed Low-Rank Adaptation), then again, decomposes mannequin weights into magnitude and course, fine-tuning solely the directional elements utilizing a LoRA-like strategy. These strategies, like LoRA, intention to make the fine-tuning course of extra environment friendly by lowering the variety of parameters that must be adjusted, thereby saving computational assets and time.

Hybrid PEFT

Hybrid PEFT is an modern strategy that mixes a number of Parameter Environment friendly Nice-Tuning (PEFT) strategies to harness their particular person strengths. This technique integrates additive, selective, and reparameterized methods right into a cohesive framework, aiming to maximise effectivity and efficiency throughout numerous duties.

Methodology: UniPELT

One notable method on this area is UniPELT, which merges Low-Rank Adaptation (LoRA), prefix-tuning, and adapters inside every transformer block (Thrilling!). It employs a gating mechanism to manage the activation of those elements, enabling a dynamic and versatile fine-tuning course of. By leveraging the distinctive advantages of every technique, UniPELT can successfully adapt to numerous duties whereas sustaining computational effectivity.

The aforementioned survey supplies a streamlined implementation of UniPELT as follows, specializing in the core elements and omitting the complexity of a number of consideration heads for readability.

First, we have now a perform that modifies a regular transformer block by incorporating UniPELT methods:

def transformer_block_with_unipelt(x):

residual = x

x = unipelt_self_attention(x)

x = LN(x + residual)residual = x

x = FFN(x)

adapter_gate = gate(x)

x = adapter_gate * FFN(x)

x = LN(x + residual)

return x

The perform begins by preserving the enter as a residual connection. It then applies UniPELT self-attention, which incorporates methods like LoRA and prefix tuning. After the eye mechanism, a layer normalization (LN) step ensures stability and consistency. The perform proceeds with a regular feed-forward community (FFN), adopted by making use of an adapter gate that dynamically controls the affect of the adapter on the FFN output. Lastly, one other normalization step is carried out earlier than returning the output.

Subsequent, the unipelt_self_attention perform integrates LoRA for question and worth updates and applies prefix tuning:

def unipelt_self_attention(x):

okay, q, v = x @ W_k, x @ W_q, x @ W_v# LoRA for queries and values

lora_gate = gate(x)

q += lora_gate * (W_qA @ W_aB)

v += lora_gate * (W_vA @ W_vB)

# Prefix tuning

pt_gate = gate(x)

q_prefix = pt_gate * P_q

k_prefix = pt_gate * P_k

return softmax(q @ okay.T) @ v

On this perform, the queries, keys, and values are initially calculated utilizing normal consideration mechanisms. LoRA is then utilized to change the question (q) and worth (v) matrices utilizing low-rank variations. Prefix tuning follows, the place learnable prefixes are utilized to the queries and keys. The perform concludes by computing the eye output utilizing the modified queries and values.

Lastly, UniPELT incorporates Gate Mechanisms the place the gate perform dynamically adjusts the affect of the PEFT strategies:

def gate(x):

x = Linear(x)

x = sigmoid(x)

return imply(x, dim=seq)

This perform applies a linear transformation adopted by a sigmoid activation to make sure values are between 0 and 1. The ultimate step is averaging, which controls the gate’s output based mostly on the enter options.

If you wish to get your palms soiled with UniPELT, take a look at the UniPELT GitHub repository. This repository consists of the complete codebase, particular scripts for numerous PEFT strategies, their configurations, and utilization directions.

Methodology: S4

One other key method is S4, which explores the design areas of assorted PEFT strategies to establish optimum mixtures and configurations. This technique systematically evaluates completely different PEFT approaches to find out the simplest methods for particular purposes. By doing so, S4 supplies worthwhile insights into the perfect practices for implementing hybrid PEFT options.

Methodology: LLM-Adapters

Moreover, LLM-Adapters construct a complete framework that comes with completely different PEFT methods. This framework gives an in depth understanding of the contexts and configurations during which every technique excels. By integrating a number of PEFT approaches, LLM-Adapters facilitate the event of versatile and environment friendly fashions tailor-made to a variety of duties.

For a complete information on the right way to arrange and use these adapters, you may consult with the LLM-Adapters GitHub repository. This repository consists of set up directions, examples of coaching and inference, and particulars on numerous adapter configurations. It additionally supplies scripts for fine-tuning and evaluating fashions on completely different duties, akin to arithmetic and commonsense reasoning.