A deep dive into extra classification metrics: Precision/ Recall Tradeoff and ROC Curve!

Beforehand, we mentioned Precision, Recall, and F-1 Rating. This weblog continues from my earlier one. When you haven’t checked it out but, you are able to do so here. Now, let’s get into our primary subject!

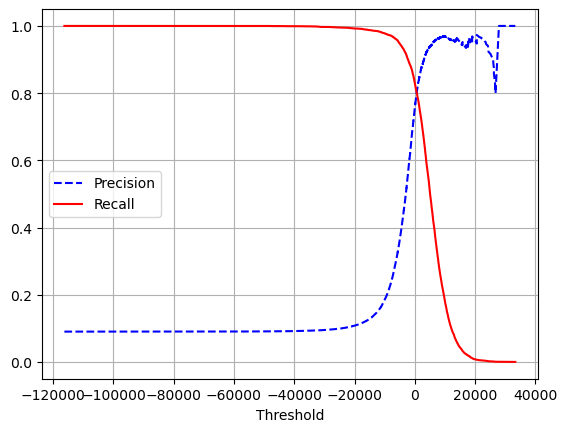

In classification, we can not have each Precision and Recall equally excessive. Bettering one decreases the opposite, often called the Precision/Recall Tradeoff. To grasp this, let’s return to our SGDClassifier. For every occasion, our mannequin computes a rating based mostly on its choice operate. For now, let’s say if the rating is larger than its threshold, the result’s optimistic; if it’s much less, damaging.

For this instance, suppose the edge is within the heart. Now, based mostly on this data, let’s calculate our Precision and Recall. Out of 5 optimistic predictions, 4 of them efficiently recognized the digit as 5, whereas 1 prediction was mistaken. This offers:

Now, let’s barely transfer our threshold to the left. This offers,

As we will see, the Precision went down whereas we’ve an ideal Recall.

Scikit-learn doesn’t allow us to set the edge immediately however it does enable us to entry the choice scores utilizing decision_function

y_scores=sgd_clf.decision_function(X[0].reshape(1,-1))

threshold=0

y_score_predict=(y_scores>threshold)

y_score_predict# Output: array([ True])

threshold=6700

y_score_predict=(y_scores>threshold)

y_score_predict# Output: array([False])

This exhibits that by altering the edge, we decreased the Recall. However how do we all know which threshold to make use of? For this, we will discover out the choice scores of all of the situations after which compute Precision & Recall for all doable thresholds.

y_scores=cross_val_predict(sgd_clf,X_train,y_train_5,cv=5,technique='decision_function')from sklearn.metrics import precision_recall_curve

precision,recall,threshold=precision_recall_curve(y_train_5,y_scores)

def plot_prec_recall_thrshld(precision,recall,threshold):

plt.plot(threshold,precision[:-1],"b--",label="Precision")

plt.plot(threshold,recall[:-1],"r",label="Recall")

plt.legend()

plt.xlabel("Threshold")

plt.grid(seen=True)plot_prec_recall_thrshld(precision,recall,threshold)

plt.present()

One other strategy to visualize that is by plotting Precision vs. Recall.

plt.plot(recall,precision,"b-",label="Precision")

plt.xlabel("precision")

plt.ylabel("Recall")

plt.grid(seen=True)

plt.present()

The Receiver Working Attribute Curve is one other technique utilized in binary classifiers. As a substitute of plotting the Precision vs. Recall curve, the ROC curve plots the True Optimistic Fee (one other identify for Recall) vs. False Optimistic Fee (1 — True Detrimental Fee). To grasp higher, let’s take the above instance.

Right here,

AUC

The Space Underneath the ROC curve is a single scalar worth that summarizes the classifier’s general efficiency. Starting from 0–1, the upper the worth, the higher performing the mannequin. Now that we all know what a ROC curve is, let’s use it for our mannequin.

from sklearn.metrics import roc_auc_scoreroc_auc_score(y_train_5,y_scores)

# Output: 0.9648211175804801

As a rule of thumb, we use the P-R curve each time the optimistic class is uncommon or once we care extra about False Positives. In our case, because the optimistic class (5) happens way more hardly ever than the damaging class (not 5), the PR curve is best suited.

With this, let’s do that on a special mannequin — a RandomForest!

from sklearn.ensemble import RandomForestClassifierforest_clf=RandomForestClassifier(random_state=42)

y_probas_predict=cross_val_predict(forest_clf,X_train,y_train_5,cv=5,technique='predict_proba')

from sklearn.metrics import roc_curvefpr,tpr,threshold=roc_curve(y_train_5,y_scores)

y_scores_forest=y_probas_predict[:,1] #For chance of optimistic outcomes

fpr_forest,tpr_forest,threshold=roc_curve(y_train_5,y_scores_forest)

plt.plot(fpr,tpr,"b:",label="SGD")

plt.plot(fpr_forest,tpr_forest,"r-",label="Random Forest")

plt.plot([0,1], [0, 1], 'k--')

plt.grid(seen=True)

plt.xlabel("False Optimistic Fee")

plt.ylabel("True Optimistic Fee")plt.legend()

plt.present()

From this, we will conclude that the Random Forest Classifier appears a lot better than the SGD Classifier: the world underneath the curve is increased for Random Forest than it’s for SGD.

roc_auc_score(y_train_5,y_scores_forest)# Output: 0.998402186461512

This additional justifies our remarks because the ROC-AUC for SGD is 0.965 in comparison with 0.998 for Random Forest.

With the top of Binary Classification, I’ll now be transferring on to Multiclass Classification.