Recently, I’ve launched into a journey into the world of AI and Machine Studying, the place I stumbled upon the realm of AI Brokers and determined to create my very own agent-oriented challenge. However earlier than that, let me reply some ideas you could be having. Ai brokers are utilized in many methods that course of consumer inputs like buyer assist.

Think about if the features you create might suppose for themselves and make their very own selections. They interpret information identical to you possibly can, perceive the context of their duties, and even seek for real-time information to finish their assignments. They don’t simply throw errors. As a substitute, they inform you when one thing doesn’t align with their given job. That is the essence of an AI Agent.

It really works due to LangGraph. LangGraph is a framework designed for creating multi-agent workflows by offering a less complicated technique to create cycles, controllability, and persistence, together with NLP capabilities for producing human-like responses. LangGraph permits you to outline workflows that preserve state and emulate a graph, supplying you with the power to revert and resume at any node whereas providing human-in-the-loop options for verifying outputs.

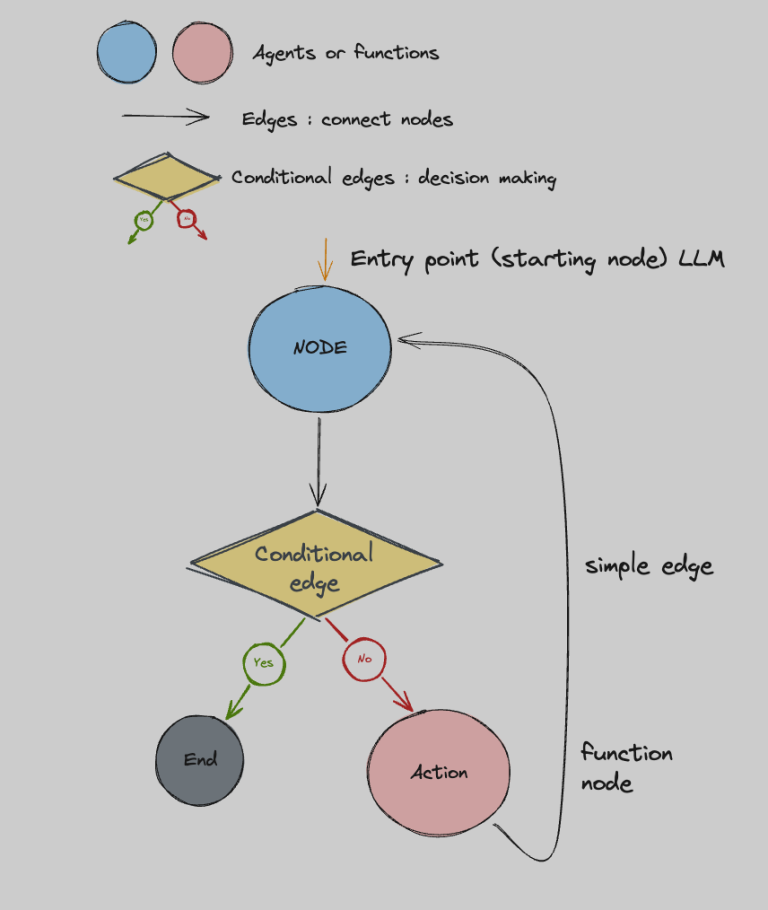

Check out this diagram

- The blue and pink circles symbolize both brokers or features. The entry level, on this case, is the agent which is an LLM (Giant Language Mannequin).

- LLM (Giant Language Mannequin): A sort of synthetic intelligence mannequin that’s skilled on huge quantities of textual content information. It may perceive and generate human-like textual content, making it able to performing duties comparable to writing, summarizing, and answering questions. For instance, ChatGPT.

- The black arrows are easy edges. These edges join nodes, which means when a node completes its objective, it strikes on to the subsequent node or place.

- The yellow triangle is a conditional edge. This edge acts like the easy edges however is predicated on conditionals. On this case, the situation will both finish the graph or recurse again to the agent.

All through this complete course of, the state is being tracked. That is referred to as the agent state. The agent state is obtainable at each a part of the graph, each node, and each edge. Each LLM node has a system or immediate that features a set of directions for it to observe and to know its objective when receiving inputs, much like directions from a supervisor however far more particular. We’ll go over why it generates queries later.

Right here’s an instance of a researcher immediate

To get a grasp of how an agent state works, let’s take a look at a easy instance from an essay author challenge. The agent state is normally a category for the reason that agent counts as an entity that retains monitor of items of knowledge that the brokers would possibly must reference or replace.

Instance

- TypedDict and Checklist Import: We import TypedDict and Checklist from the typing module. This helps us outline the construction of our agent state.

- Defining AgentState: We outline a TypedDict named AgentState. This dictionary incorporates keys comparable to job, plan, draft, critique, content material, revision_number, and max_revisions to retailer items of knowledge that the brokers will use and replace all through the workflow.

- Initializing the State: We create an occasion of AgentState named state with some preliminary values. The duty incorporates the consumer’s enter request. The plan, draft, critique, and content material are initially empty strings or lists. The revision_number is ready to 0, and max_revisions is ready to three.

This agent state can be handed across the nodes and edges of the graph, permitting every agent or operate to entry and replace it as wanted. As an example, the plan key can be up to date by the planner agent, the draft key can be up to date by the author agent, and so forth.

Right here’s a diagram on an essay author workflow

- Entry Level : The entry level is the consumer enter, which defines the duty in our AgentState.

- Planner : The Planner agent generates an overview for the essay, together with notes and key factors.

- Researcher : The Researcher agent takes the define from the Planner agent. That is the place we implement RAG (Retrieval-Augmented Technology).

- RAG is when an agent retrieves its information to work on from an exterior supply or database and makes use of that data for era functions.

- Within the Researcher agent, we implement RAG by utilizing the LLM (Giant Language Mannequin) to generate the very best queries primarily based on the context of the duty (consumer enter) and the essay define. These queries are then handed into Tavily.

Tavily is a search engine optimized for LLMs and RAG. It focuses on optimizing the search course of for AI brokers and builders by dealing with the looking, scraping, filtering, and extracting probably the most related data from on-line sources with a single API name.

5. Generator : The Generator agent writes the draft primarily based on the content material supplied by Tavily and the context (consumer job + the plan from the Planner). It additionally is aware of that if it receives critiques it can rewrite its earlier try.

6. The Conditional Edge : On this case our conditional edge is that if our variety of revisions exceeds the max quantity of revisions which is up to date in the course of the generator. That is wanted to finish the workflow and resolve to critique the draft or ship it again to the consumer.

7. Critique & Search : This Agent implements RAG once more by utilizing the LLM to seek out critiques inside the draft after which utilizing Tavily to analysis easy methods to counter these critiques. The consequence can be given to the generator once more.

8. Finish Level : The workflow will finish at any time when the revision quantity exceeds max revisions so if max revisions is 2 we are going to generate 2 drafts and the second can be our essay!

Needed Imports

Planning Agent

Researcher Agent

Generator Agent

Critique & Search Agent

Hopefully that offers a greater understanding of how the entire workflow works . Normally the analysis agent is all the time included and a few type of RAG. Now onto the challenge I made.

Personalised meal plan suggestion system that generates tailor-made meal plans primarily based on consumer dietary preferences , obtainable components targets and so forth.

Agent State

System Diagram

I’ll skip over the components that behave equally to the essay author to avoid wasting time.

After the Researcher Agent, the content material goes into the Analysis Grader, which is able to filter out content material that’s irrelevant to the unique job. This helps by giving our Generator solely related data to create the meal plan. If the filtered content material quantity doesn’t meet the requirement (let’s say 60%), it can return and analysis once more. This creates a corrective RAG course of. Throughout this course of, there may be additionally a state restrict on the utmost variety of searches to stop an infinite loop.

After this, we transfer into our Generator, which is instructed to create the meal plan solely utilizing the content material supplied to it.

After that we go into our Overview Agent.

The Overview Agent will assess whether or not the plan generated by the Generator was solely primarily based on the filtered content material from the grading course of. It is going to output True or False for every assertion inside the meal plan.

Earlier than what’s subsequent let’s breakdown what hallucinations are.

In machine studying, hallucinations are cases the place the LLM generates incorrect or nonsensical data that isn’t supported by the precise information it was skilled on or retrieved. This occurs for a number of causes, comparable to errors and information gaps, that are addressed on this a part of the method.

The Hallucinations Conditional will take the share of False statements from the Overview Agent and verify if it exceeds a sure restrict. If it does, it can recurse to regenerate the meal plan. If not, it can cross the consequence to the consumer, together with the meal plan, the ultimate grading rating, and the ultimate hallucination rating. This conditional additionally has a revision restrict to stop an infinite loop.

This implements Self-RAG, the place the system provides self-grading on generations for hallucinations and the power to offer an correct meal plan primarily based on the related content material.

When the utmost revisions are hit, the consumer will obtain the ultimate meal plan together with the hallucination rating and grading rating.

One of many benefits of constructing this technique is its flexibility. By merely altering the prompts for my Brokers, I can alter the complete matter or objective of the challenge. As an example, it might remodel right into a common search device, or it might actually change into a pet centered search device permitting for excessive versatility with out altering a lot in code.

One other benefit is that AI brokers can keep in mind the context of the interplay via persistence. This permits them to not solely increase upon the present dialog but additionally produce other conversations concurrently via a thread like system.

Different methods the place an LLM makes selections to make use of instruments additionally require minimal further code when including new performance. The one addition wanted is the device itself, making it straightforward to increase the system’s capabilities.

If you wish to experiment with Ai Brokers your self you possibly can take a look at these sources.

For the particular implementation particulars and code of RecipeAgents you possibly can take a look at my repository.

Thanks for studying!