To construct a question-answering system, Retrieval Augmented Language Fashions (RALMs) have been used because the de facto customary for producing responses based mostly on externally retrieved data related to the question. Nonetheless, when incorrect exterior data is retrieved, the RALM’s responses could be misguided.

Then again, with the rise in mannequin scale and the quantity of pre-training knowledge, the capabilities of language fashions themselves have improved considerably since they memorize an unlimited quantity of data of their parameters.

This raises an vital query for constructing a dependable RALM-based QA system: When is retrieval useful, and when does it hinder the language mannequin’s efficiency?

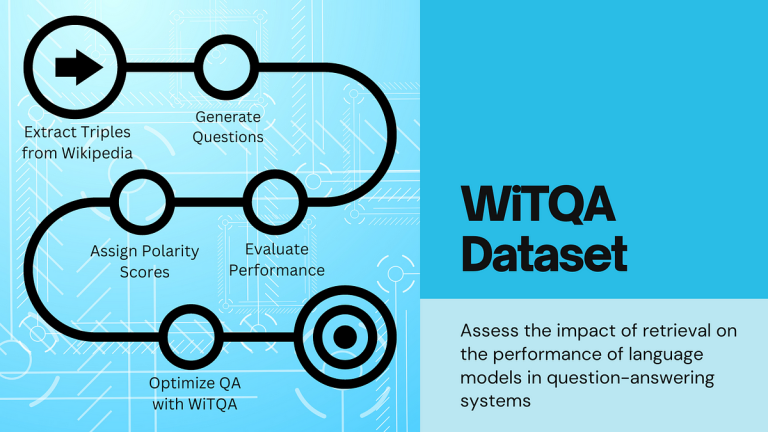

To handle this query, we constructed a brand new question-answering dataset known as WiTQA and comprehensively evaluated language fashions of various sizes at the side of retrieval fashions. Via this in depth analysis, we gained invaluable insights for constructing RALM-based QA methods in real-world use circumstances through which we have to determine whether or not to make use of language fashions with or with out retrieval augmentation for higher QA accuracy.

Let’s first discover the dataset creation course of intimately!

To investigate the interaction between LMs and retrieval methods successfully, we launched the WiTQA (Wikipedia Triple Query Solutions) dataset. We give an instance under:

- Triple: (Topic: ”Nausicaä of the Valley of the Wind”, Relation: revealed in, Object: Animage)

- Query: “What Japanese anime and leisure journal was “Nausicaä of the Valley of the Wind” revealed in?”

- Reply: “Animage”

- Supporting passage in Wikipedia: “… Hayao Miyazaki’s internationally famend manga, “Nausicaä of the Valley of the Wind”, was serialized in “Animage” from 1982 by way of 1994…”

The WiTQA dataset is exclusive within the following elements:

- For every query, the WiTQA gives two recognition scores:

- The frequency depend of the subject-entity (query entity) in Wikipedia

- The frequency depend of the precise subject-relation pair (entity-relation pair) in Wikipedia

- Every QA pair is related to a supporting passage from Wikipedia

The topic-relation recognition rating permits for the evaluation of the factual data capabilities of language fashions by way of a fine-grained, fact-centric lens. In distinction, subject-entity recognition considers all info related to the identical entity to be of equal recognition. The gold-supporting passages allow the isolating reasoning talents from retrieval errors when evaluating fashions. These allow us to conduct deep evaluation on evaluating LLMs’ functionality from numerous elements.

Creating WiTQA concerned a number of steps, beginning with the extraction of triples from Wikipedia. We then utilized a meticulous sampling course of to make sure a various illustration of entities and relations based mostly on their prevalence frequencies. Our aim was to seize the real-world problem LMs face: recalling info throughout a large spectrum. With 14,837 QA pairs (13,251 distinctive topic entities, 32 relations, and seven,642 distinctive object entities), WiTQA gives a complete playground for evaluating the efficiency of RALMs in numerous eventualities. We display that the distributions of the subject-relation recognition (S-R counts) in WiTQA are extra various than these of present QA datasets, EntityQuestions and PopQA.

Our in depth experiments with WiTQA make clear a number of vital elements of RALMs. We noticed that:

- Recall vs. Retrieval: LMs display a excessive potential to recall standard info while not having retrieval augmentation. The bigger the LM, the higher its recall capabilities. Notably, for standard info, bigger LMs exhibit higher QA accuracy than RALMs attributable to retrieval errors. To substantiate this assertion, we demonstrated a robust correlation between RALM efficiency and retrieval errors.

- When Retrieval Helps: For questions involving much less frequent entities and relations, retrievers persistently outperform the recall talents of LMs. This implies that retrieval augmentation is especially helpful for answering questions on obscure or hardly ever talked about info. For uncommon entity-relation pairs about standard entities, the retrieval accuracy drops as a result of precisely figuring out related passages from a big pool of passages containing the entity turns into difficult. Even probably the most superior fashions like GPT-4 battle with much less frequent entity-relation pairs, highlighting an important space the place retrieval augmentation might play a major function.

- Adaptive Retrieval Methods: Leveraging insights from our evaluation, we proposed a selective reminiscence integration that adaptively decides whether or not to have interaction retrieval based mostly on the frequencies of entities and relations within the query. This strategy enhances QA efficiency by as much as 10.1%, demonstrating the potential of extra nuanced, context-aware RALMs.

Our exploration into the efficacy of retrieval augmentation utilizing the WiTQA dataset gives invaluable insights into the strengths and limitations of present QA methods. By highlighting when retrieval helps and when it would damage, we offer insights into growing extra refined and nuanced RALMs. As we proceed to push the boundaries of NLP, datasets like WiTQA will play an important function in guiding our journey in the direction of extra clever and versatile language fashions.

Try the Github repository for WiTQA and experiment with the way forward for question-answering at this time!

Are you intrigued by the probabilities of adaptive retrieval and need to dive deeper into our findings? Don’t miss out on our detailed research paper, and be part of us in advancing the state-of-the-art in query answering and language mannequin augmentation.

Written by Seiji Maekawa, Hayate Iso, and Megagon Labs.

Observe us on LinkedIn and X to remain updated with new analysis and tasks.