Conventional ML workflows typically undergo from a number of ache factors:

- Lack of reproducibility in mannequin coaching

- Issue in versioning fashions and information

- Guide, error-prone deployment processes

- Inconsistency between improvement and manufacturing environments

- Challenges in scaling mannequin serving infrastructure

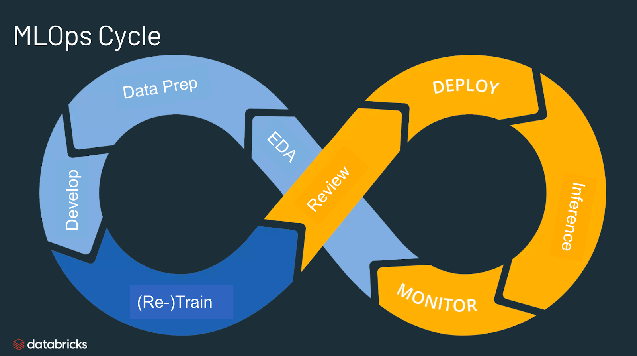

Our MLOps structure addresses these challenges head-on, offering a streamlined, automated, and scalable answer.

Present Picture

Let’s break down the important thing parts of this structure:

The journey begins in SageMaker Studio, the place information scientists and ML engineers create and handle tasks. This supplies a centralized setting for creating ML fashions.

This part leverages AWS CodePipeline for steady integration:

- Knowledge scientists commit code to a CodeCommit repository.

- CodePipeline robotically triggers the construct course of.

- SageMaker Processing jobs deal with information preprocessing, mannequin coaching, and analysis.

- The skilled mannequin is saved as an artifact in Amazon S3.

Efficiently skilled fashions are registered within the SageMaker Mannequin Registry. This significant step allows model management and lineage monitoring for our fashions.

A separate CodePipeline handles the continual deployment:

- The pipeline deploys the mannequin to a staging setting.

- A handbook approval step ensures high quality management.

- Upon approval, the mannequin is deployed to the manufacturing setting.

The pipeline creates SageMaker Endpoints in each staging and manufacturing environments, offering scalable and safe mannequin serving capabilities.

- Reproducibility: Your complete ML workflow is automated and version-controlled.

- Steady Integration/Deployment: Modifications set off automated construct and deployment processes.

- Staged Deployments: The staging setting permits for thorough testing earlier than manufacturing launch.

- Model Management: Each code and fashions are versioned for simple monitoring and rollback.

- Scalability: AWS managed providers guarantee the answer can deal with growing hundreds.

- Infrastructure as Code: Terraform permits for version-controlled, reproducible infrastructure.

Your complete structure is outlined and deployed utilizing Terraform, embracing the Infrastructure as Code paradigm.

Github Hyperlink –https://github.com/gursimran2407/mlops-aws-sagemaker

Right here’s a glimpse of how we arrange the SageMaker mission:

useful resource "aws_sagemaker_project" "mlops_project" {

project_name = "mlops-pipeline-project"

project_description = "Finish-to-end MLOps pipeline for mannequin coaching and deployment"

}

We create CodePipeline sources for each mannequin constructing and deployment:

useful resource "aws_codepipeline" "model_build_pipeline" {

identify = "sagemaker-model-build-pipeline"

role_arn = aws_iam_role.codepipeline_role.arnartifact_store {

location = aws_s3_bucket.artifact_store.bucket

kind = "S3"

}

stage {

identify = "Supply"

# Supply stage configuration...

}

stage {

identify = "Construct"

# Construct stage configuration...

}

}

SageMaker endpoints for staging and manufacturing are additionally outlined in Terraform:

useful resource "aws_sagemaker_endpoint" "staging_endpoint" {

identify = "staging-endpoint"

endpoint_config_name = aws_sagemaker_endpoint_configuration.staging_config.identify

}useful resource "aws_sagemaker_endpoint" "prod_endpoint" {

identify = "prod-endpoint"

endpoint_config_name = aws_sagemaker_endpoint_configuration.prod_config.identify

}

This MLOps structure supplies a sturdy, scalable, and automatic answer for managing your complete lifecycle of machine studying fashions. By leveraging AWS providers and Terraform, we create a system that enhances collaboration between information scientists and operations groups, quickens mannequin improvement and deployment, and ensures consistency and reliability in manufacturing.

Implementing such an structure demonstrates a deep understanding of cloud providers, MLOps rules, and infrastructure as code practices — abilities which might be extremely valued in at the moment’s data-driven world.

The whole Terraform code and detailed README for this structure can be found on GitHub. Be happy to discover, use, and contribute to the mission!