Machine Studying (ML) has grow to be such a buzzword within the expertise fraternity as we speak that many technologists need to go deep into this and get their fingers on constructing ML fashions that may do predictive forecasting, customized suggestions, and lots of extra issues.

Like many technologists, just a few months again the passionate learner in me additionally bought so intrigued by this fascinating world that I enrolled myself in a complicated course (for AI and ML) at IIT Delhi (one of many premier expertise training institutes in India). That is my first medium article, however I plan to put in writing usually about my learnings on this platform.

ML is deeply rooted in highschool and engineering arithmetic coursework that many people used to search out boring once we had been learning it! Matrices, operations on matrices, linear algebra, vectors, dot merchandise of vectors, statistical operations of discovering imply, commonplace deviation, variance, covariance, correlation coefficient, and linear regression are the fundamental constructing blocks of what we as we speak fancy as machine studying fashions.

On this article, I’ll deep-dive into the idea of “Precept Part Evaluation” (PCA). I’ve additionally virtually carried out PCA through Python on a freely out there common and huge dataset, and I’ll display step-by-step code-based implementation of this key ML method.

PCA is a superb knowledge evaluation method to extract precious insights out of your knowledge and improve the efficiency of your ML fashions and is usually used for the next sorts of usecases:

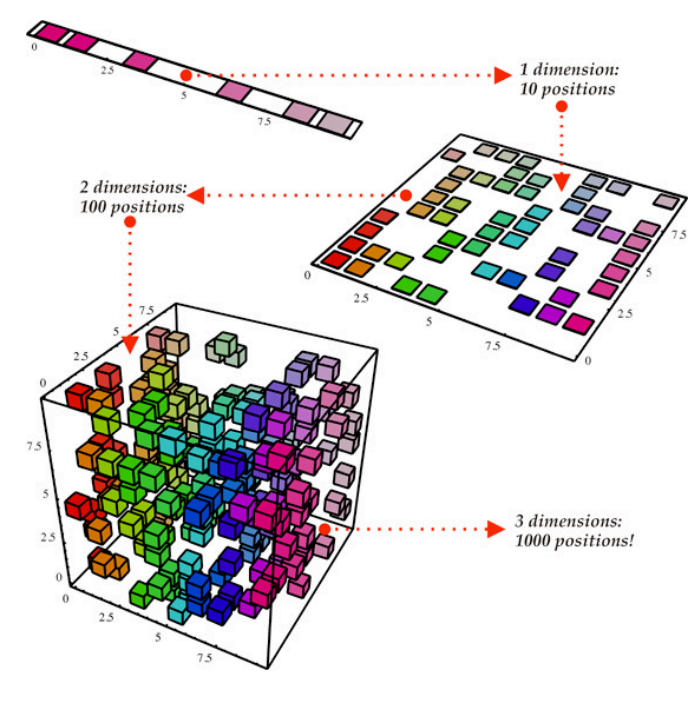

- Dimensionality Discount — that is the core usecase of PCA. In datasets with many options (aka dimensions), PCA identifies a smaller set of uncorrelated options (additionally referred to as as principal parts) that seize many of the knowledge’s variance. That is useful for:

- Decreasing computational & storage value: Coaching machine studying fashions with fewer options is quicker and requires much less reminiscence / storage.

- Enhancing mannequin efficiency: In some instances, excessive dimensionality can result in overfitting i.e. the ML mannequin would carry out worse with extra options. PCA helps mitigate this by specializing in essentially the most informative / vital options of the dataset being skilled.

- Visualization: When coping with high-dimensional knowledge (greater than 3D), it’s not simple to visualise it instantly. PCA means that you can venture the info onto a lower-dimensional area (normally 2D or 3D) for simpler visualization and understanding of sample

2. Characteristic Extraction — Principal parts symbolize essentially the most vital instructions of variance within the unique dataset. By utilizing these parts as new options, you’ll be able to seize the essence of the unique high-dimensional area extra compactly. That is helpful for:

- Characteristic engineering: The extracted parts can be utilized instantly as options in ML fashions, doubtlessly resulting in improved efficiency.

- Information exploration: Analyzing the principal parts may also help reveal underlying buildings and relationships throughout the knowledge.

3. Anomaly Detection — PCA may also help set up a standard vary (centered across the imply) for the info and establish the principal parts. Important deviations from this vary can then be flagged as potential anomalies. That is useful for:

- Fraud detection: Figuring out uncommon transactions or monetary actions that deviate from typical patterns.

- Sensor knowledge evaluation: Detecting anomalies in sensor readings which may point out gear malfunctions or sudden occasions.

4. Information Compression — PCA may also help compress picture datasets by discarding parts with the bottom variance. This may be particularly helpful for:

- Storing and transmitting massive photos/information: Decreased dimensionality results in smaller file sizes, facilitating environment friendly storage and transmission.

- Enhancing processing velocity: Algorithms typically carry out higher on smaller datasets, so utilizing PCA earlier than different processing steps can result in sooner computation.

5. Preprocessing Information — PCA is commonly used as a preprocessing step earlier than making use of different ML algorithms. By lowering dimensionality and doubtlessly extracting significant options, PCA can enhance the general efficiency of:

- Classification fashions: Classifying knowledge factors primarily based on their options.

- Clustering algorithms: Grouping comparable knowledge factors collectively.

- Regression fashions: Predicting steady goal variables.

Some particular industrial purposes of PCA embody noise discount, knowledge compression, picture processing, gene expression evaluation, face recognition, and textual content mining.

On this a part of my article, you’ll notice how highschool maths ideas have been meaningfully leveraged into modern-day machine studying implementation. I nonetheless bear in mind chatting with my college mates shortly after I joined the IT workforce (greater than 2 many years in the past) — “no matter boring ideas we studied in highschool and engineering arithmetic lessons are nowhere leveraged within the software program business, God is aware of why we had been taught them!” However after enrolling myself within the ML course at IIT Delhi, I thanked almighty — “Thank God, I had studied these mathematical ideas in my youthful years else following this course would have been fairly a fancy effort !”

PCA goals to remodel a high-dimensional dataset right into a lower-dimensional illustration whereas retaining as a lot info as attainable. It accomplishes this by figuring out the principal parts of the dataset, that are linear mixtures of the unique options that seize essentially the most variance within the knowledge.

Pre-requisite Maths ideas to get essentially the most out of this PCA deep dive

You could have studied linear algebra lengthy again and maybe have forgotten its core ideas. Or perhaps, you by no means studied linear algebra and are very new to machine studying. In both of those circumstances, I strongly suggest you spend a while on the sources listed within the “References” part as a pre-requisite. These sources will enable you grasp/refresh mathematical formulations for vectors, matrices, matrix operations, Eigenvectors, Eigenvalues, orthogonal vectors, dot product, cross-product. and so on.

Mathematical Formulation of PCA

Let X be a n × p knowledge matrix, the place n is the variety of observations and p is the variety of options.

► The objective of PCA is to discover a p × ok matrix V that represents the principal parts of X , the place ok ≤ p is the variety of dimensions within the diminished area.

► The principal parts are given by the eigenvectors of the covariance matrix of X , and the corresponding eigenvalues symbolize the variance defined by every element.

► The reduced-dimension illustration of X is then given by XV, the place V is the matrix of chosen ok topmost eigenvectors.

Mathematical Steps to carry out PCA

- Carry out Imply Centering of the dataset

- Calculating the covariance matrix

- Calculate eigenvalues and eigenvectors of the covariance matrix

- Choose the highest ok eigenvectors with the most important eigenvalues — these are the principal parts (i.e. topmost vital dimensions of the given matrix)

- Undertaking the unique knowledge factors alongside the highest ok eigenvectors and remodel the unique dataset to diminished dimensionality

I’m maintaining this mathematical formulation part concise as I’ve given many reference hyperlinks under that will help you perceive the maths in depth (and if wanted carry out PCA by hand on a small matrix). In the remainder of this text, I need to display PCA implementation on massive datasets utilizing Python. I used to be requested to do a hands-on venture by IIT Delhi professors and the implementation for a similar is what I need to cowl in the remainder of this text that will help you perceive the sensible implementation of PCA and its usefulness in your ML studying journey!

Palms-on Undertaking implementation (through Python)

The MNIST dataset is a complete assortment of handwritten digits starting from 0 to 9. It contains 60,000 grayscale photos for coaching functions and 10,000 photos for testing. Every picture is 28 by 28 pixels in measurement, with pixel values starting from 0 to 255.

To maintain issues simple, we’ll focus solely on the check dataset and use the primary 2000 samples, which is useful if reminiscence is restricted. Scale the pictures to the vary [0, 1] by dividing every by 255. Convert every picture xi (which belongs to Rd) right into a vector and create a matrix X = [x1, . . . , xn]T ∈ Rn×d. Notice that d = 784 and n = 2000.

Right here’s the python code snippet to import the wanted libraries and packages.

Code to fetch the MNIST dataset, into coaching knowledge and check knowledge units and test the size of the ensuing matrices is given under

Notice: MNIST dataset can be downloaded from https://www.kaggle.com/datasets/hojjatk/mnist-dataset after which fetched by studying the downloaded information. There are a number of methods to fetch and extract this dataset (utilizing different Python libraries), I’ve simply used one such method.

We get the next output indicating x_train has knowledge for 60,000 photos and every picture has 28 x 28 pixels, x_test has knowledge for 10,000 photos

Subsequent, I extracted check dataset of 2000 samples from x_train and scaled the pictures to vary (0,1):

To make sure that I can have every of the pictures as a vector consisting of 784 dimensions (28 x 28), I reshaped the check dataset matrix and printed a random picture from this check dataset (see code snippet under):

I bought the next output from above logic confirming that I’ve appropriately reshaped the check dataset to 2000 x 784 matrix

Subsequent, let’s deep dive into 4 completely different sensible duties (associated to PCA implementation and its sensible usefulness on MNIST dataset)

Activity-1

Compute the eigendecomposition of the pattern covariance matrix and use the eigenvalues to calculate the share of variance defined (given by the eigenvalues).Plot the cumulative sum of those percentages versus the variety of parts.

For Activity 1 of this venture, I took 2 completely different samples of 2000 photos (from the principle MNIST dataset ) to match if outcomes differ primarily based on pattern check set choice. The unique job anticipated to do that for simply 1 check set however within the pursuit of quenching my curiosity and higher understanding, I made a decision to do that additional bit!

I’ve given under the code snippet for figuring out the covariance matrix, eigen values and eigen vectors for every of the check knowledge matrices x_test_flattened and y_test_flattened. It’s attention-grabbing to notice that whereas mathematical formulations for every of those ideas seemed fairly complicated to derive by hand, numpy library in Python has made it a breeze to compute these with out the necessity to memorize or programmatically implement sophisticated statistical formulation!

The output that I bought for plotting the cumulative sum of variance vs, variety of dimensions (aka parts) for picture vectors in dataset x_test_flattened is proven under

The output that I bought for plotting the cumulative sum of variance vs, variety of dimensions (aka parts) for picture vectors in dataset y_test_flattened is proven under

Phew! The selection of check dataset didn’t matter — the sample noticed for every of the check units is comparable!

Activity-2

Apply the PCA through Eigendecomposition to scale back the dimensionality of the pictures for every p ∈ {50, 250, 500}.

From this job onwards I’ll now use solely X_test_flattened matrix (i.e first 2000 samples of MNIST check dataset as in job 1 i’ve already seen and learnt that outcomes are comparable / similar no matter which 2000 samples we use from this check set

PCA is utilized through discovering principal_components matrix that accommodates the highest p eigenvectors (see code snippet under):

As might be seen within the under output, we get reduced_data_X matrix with diminished dimension 2000 x p for every iteration of p ∈ {50, 250, 500}.

Activity-3

After performing PCA, are you able to consider getting again the info utilizing the diminished knowledge? (Trace: Use the property of orthonormal matrices.)

Utilizing the given trace, I carried out the next code to reconstruct the pictures utilizing reduced_data_X obtained above (after making use of PCA)

I additional did a side-by-side plotting of the unique picture (from x_data_flattened) and the reconstructed picture utilizing reconstructed_image_X matrix obtained above. Proven under is the output I bought for a randomly chosen picture for every p worth.

As you’ll be able to see in above visualization, reconstructed photos utilizing principal parts (of PCA) do NOT distort the unique picture. The diminished dimensionality photos can simply be acknowledged and have the clear good thing about requiring much less storage and computing energy.

Activity-4

Examine the error (PSNR) between the unique and reconstructed picture of 5 random photos.

Peak signal-to-noise ratio (PSNR) is the ratio between the utmost attainable energy of a picture and the facility of corrupting noise that impacts the standard of its illustration. To estimate the PSNR of a picture, it’s obligatory to match that picture to a perfect clear picture with the utmost attainable energy. The mathematical formulation of PSNR might be understood from one of many reference hyperlinks that I’ve listed down under.

Python code to find out PSNR is shared under:

Output that I bought for PSNR for randomly chosen photos for reconstructed photos utilizing completely different p values :

The above output demonstrated that the upper the variety of principal parts used for the reconstructed picture, the upper the PSNR. Increased worth of PSNR signifies higher high quality of the reconstructed picture (i.e. lesser impression of noise). This inference was additionally visibly noticed after I had visually plotted the reconstructed picture subsequent to the unique picture for every of the p values in Activity-3.

With that, we come in the direction of the top of my 1st article on medium. I plan to proceed writing on AI/ML subjects as I continue learning and go deeper on this intriguing area. Sooner or later, I can even write on different subjects of curiosity as time permits.

When you’d prefer to get the entire Python pocket book (with your entire code) for the above venture / particular person duties) — please join with me/ comply with me and ship me a DM at my LinkedIn deal with: https://www.linkedin.com/in/parora7/

References

- Essence of Linear Algebra — free video programs by 3Blue1brown https://www.youtube.com/playlist?list=PLZHQObOWTQDPD3MizzM2xVFitgF8hE_ab

- Gilbert Strang Lectures on Linear Algebra (MIT) — https://www.youtube.com/playlist?list=PL49CF3715CB9EF31D

- https://www.geeksforgeeks.org/mathematical-approach-to-pca/

- https://towardsdatascience.com/the-mathematics-behind-principal-component-analysis-fff2d7f4b643

- https://online.stat.psu.edu/stat508/book/export/html/639/

- PSNR: https://www.geeksforgeeks.org/python-peak-signal-to-noise-ratio-psnr/