Machine Studying (ML) continues to evolve at a fast tempo, and staying up to date with the newest tendencies and applied sciences is essential for each aspiring and seasoned ML professionals. As we step into 2024, right here’s a complete roadmap to information you thru your ML journey, masking important expertise, instruments, and ideas.

Day 1 — Get to know a bit about Machine Studying (A literature survey)

One should have a broad understanding of what the topic is at hand. Machine studying is a large subject with varied domains. It will be actually useful if one goes by a few YouTube movies and/or blogs to get a short cling of it, and its significance. This video by TedEd is a should watch. It additionally cowl the various kinds of machine studying algorithms you’ll face.

Day 2 & Day 3 — Study a programming language, duh!

There are various programming languages on the market, of which solely two are appropriate for ML, particularly Python and R. We suggest any newbie begin with Python. Why?

For one, it gives an enormous number of libraries, particularly NumPy, pandas, sklearn, TensorFlow, PyTorch, and so on., that are tremendous useful and require little effort for Machine Studying and information science. Earlier than beginning, it is very important setup python in your machine, utilizing this as a reference.

Studying python isn’t arduous. Listed here are a couple of sources which can train you concerning the language swiftly:-

Python Tutorial by CodeWithHarry.

Day 4 — Begin to get a cling of among the inbuilt libraries like NumPy

Arithmetic is the center of Machine Studying. You’ll get a style of this assertion from Week 2. Implementing varied ML fashions, loss capabilities, and confusion matrix want math. Arithmetic is thus the muse of machine studying. Many of the mathematical duties will be carried out utilizing NumPy.

NumPy, brief for Numerical Python, is a strong library in Python that gives help for giant multi-dimensional arrays and matrices together with a set of mathematical capabilities to function on these arrays. It’s a foundational library for scientific computing and information evaluation in Python, and lots of different libraries, equivalent to SciPy, Pandas, and TensorFlow, are constructed on prime of NumPy.

This Numpy Tutorial by FreeCodeCamp.org.

Day 5 — Proceed by exploring the opposite library, Pandas

Pandas is an open-source information manipulation and evaluation library for Python. Constructed on prime of NumPy, Pandas presents highly effective, versatile, and easy-to-use information constructions, equivalent to DataFrames and Sequence, for working with structured information. It’s extensively utilized in information evaluation, information cleansing, information visualization, and machine studying.

This Pandas Tutorial by FreeCodeCamp.org.

Day 6 — Matplotlib — a strong software for visualization

Matplotlib is a complete library for creating static, animated, and interactive visualizations in Python. It’s extensively used for information visualization in scientific computing, engineering, and information evaluation. Matplotlib makes it simple to supply publication-quality plots and graphs with only a few strains of code.

This Matplotlib Tutorial by FreeCodeCamp.org.

Day 7 — What to do on Seventh Day ??

Simply Calm down! watch some films on Netflix. I’m severe about it, I do know guys Machine studying is essential , you need job and so forth however when you’ve got accomplished first week truthfully, I have to chill out .

Day 1 — Descriptive Statistics

By now, you should have been snug with processing information utilizing python libraries. Earlier than going additional, allow us to recall some primary ideas of Maths and Statistics. Observe these sources:

● Imply, Variance, Normal Deviation — Learn principle from the e-book, Statistics, eleventh Version by Robert S. Witte, sections 4.1–4.6.

● Correlation in Variables — Learn principle from the e-book, Statistics, eleventh Version by Robert S. Witte, Chapter 6.

Day 2 — Regression Issues

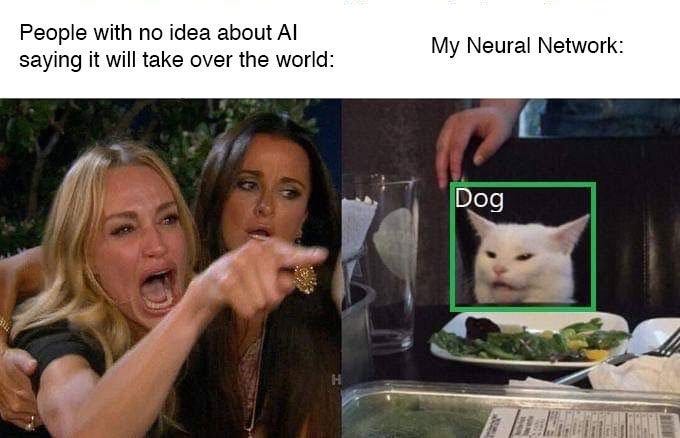

The widespread issues you’ll try to unravel utilizing supervised machine studying will be categorised into both a regression or a classification one. For e.g., Predicting the worth of a home is a regression drawback whereas classifying a picture as a canine or cat is a classification drawback. As you may clearly see, when you find yourself outputting a worth (actual quantity), it’s a regression drawback, whereas predicting a class (class), we are able to name it a classification drawback.

Day 3 — Classification utilizing KNNs

Okay-Nearest Neighbors (KNN) is a straightforward, non-parametric, and lazy studying algorithm used for classification and regression duties in machine studying. It really works by figuring out the ‘ok’ closest information factors (neighbors) to a given enter based mostly on a distance metric, usually Euclidean distance. For classification, the bulk class among the many neighbors is assigned to the enter, whereas for regression, the typical worth of the neighbors is used because the prediction.

Day 4 — Likelihood and Inferential Statistics

Data of chance is all the time helpful in ML Algorithms. It’d sound a little bit of an overkill, however for the subsequent two days we’ll revise some ideas in chance. You should utilize your JEE Notes or cowl principle from the e-book, Statistics, eleventh Version by Robert S. Witte, sections 8.7–8.10. Undergo essential theorems like Baye’s Theorem and Conditional Likelihood.

Day 5 — Inferential Statistics Continued

Full remaining portion of Week 1 and Week 2 of the Inferential Statistics course. You may also use the e-book, Statistics, eleventh Version by Robert S. Witte, as a reference.

Day 6 — Naïve Bayes Classifier (Each Multinomial and Gaussian)

These are one other type of classifiers, which simply work on Bayes Theorem. For Multinomial Naïve Bayes Classifier, watch this. Then watch the corresponding Gaussian Classifier here.

Day 7 — Preparation for Subsequent Week

In direction of the tip of the week, allow us to revise some instruments in linear algebra. Revise Vectors, Dot Product, Outer Product of Matrices, Eigenvectors from MTH102 course here.

By now you need to have a grasp over what regression means. Now we have seen that we are able to use linear regression to foretell a steady variable like home pricing. However what if I need to predict a variable that takes on a sure or no worth. For instance if I give the mannequin an electronic mail, can it inform me whether or not it’s spam or not? Such issues are referred to as classification issues and so they have very widespread purposes from most cancers detection to forensics. This week we shall be going over two introductory classification fashions: logistic regression and choice timber.

Day 1 — Overview of Value capabilities, speculation capabilities and gradient descent.

As chances are you’ll recall, the speculation perform was the perform that our mannequin is meant to study by wanting on the coaching information. As soon as the mannequin is educated, we’re going to feed unseen information into speculation perform and it’s magically going to foretell an accurate (or almost right) reply! The speculation perform is itself a perform of the weights of the mannequin. These are parameters related to the enter options that we are able to tweak to get the speculation nearer to the bottom reality. However how will we confirm whether or not our speculation perform is sweet sufficient? That’s the job of the associated fee perform. It offers us a measure of how poor or how flawed the speculation perform is performing compared to the bottom reality. Listed here are some generally used price capabilities: https://www.javatpoint.com/cost-function-in-machine-learning .

Gradient descent is solely an algorithm that optimizes the weights of the speculation perform to attenuate the associated fee perform (i.e to get nearer to the precise output).

Day 2: Logistic Regression

The logistic regression mannequin is constructed equally to the linear regression mannequin, besides that now as a substitute of predicting values in a steady vary, we have to output in binary i.e 0 and 1.

Let’s take a step again and check out to determine what our speculation needs to be like. An inexpensive declare to make is that our speculation is mainly the chance that y=1 (Right here y is the label i.e the variable we are attempting to foretell). If our speculation perform outputs a worth nearer to 1, say 0.85 it means it’s fairly assured that y needs to be 1. Then again if the speculation outputs 0.13 it means there’s a very low chance that y is 1, which suggests it’s possible that y is 0. Now, constructing upon the ideas from linear regression, how will we prohibit (or within the immortal phrases of 3B1B — “squishify”) the speculation perform between 0 and 1? We feed the output from the speculation perform of the linear regression drawback into one other perform that has a site of all actual numbers however has a spread of (0,1). A perfect perform with this property is the logistic perform which appears to be like like this: https://www.desmos.com/calculator/se75xbindy. Therefore the title logistic regression.

Day 3 & 4 — Getting your fingers soiled with code

One factor you should all the time bear in mind is that whereas studying about new ideas, there isn’t a substitute for truly implementing what you have got learnt. Spend today attempting to give you your individual implementation for logistic regression. You might check with different individuals’s implementations. This may absolutely not be a simple job however even in case you fail, you’ll have learnt lots of new issues alongside the way in which and have gotten a glimpse into the interior workings of Machine Studying.

Logistic Regression in Python.

Day 5 & 6 — Resolution Timber

Resolution timber are a well-liked and intuitive sort of machine studying algorithm used for each classification and regression duties. They work by recursively splitting the info into subsets based mostly on the worth of enter options, making a tree-like mannequin of choices. Every inside node represents a check on an attribute, every department represents the result of the check, and every leaf node represents a category label or steady worth (for regression). The objective is to create a mannequin that predicts the goal variable by studying easy choice guidelines inferred from the info options.

Benefits of Resolution Timber

- Interpretability: Straightforward to know and visualize.

- Versatility: Can deal with each numerical and categorical information.

- Minimal Knowledge Preparation: Requires little information preprocessing, equivalent to scaling or normalization.

Disadvantages of Resolution Timber

- Overfitting: Can create advanced timber that don’t generalize effectively to unseen information.

- Bias: Delicate to small adjustments within the information, which can lead to completely different splits.

- Complexity: Giant timber can grow to be unwieldy and tough to interpret.

Implementing Decision trees using Python.

Day 7: Revision Revision and Revision !

Up until now, you have got a few of primary and most used algorithms in Machine studying, subsequent is Deep studying( lastly Neural Networks !). So, revise all the pieces until now and will get your fingers soiled by implementing ML ideas on varied datasets.

Lastly, resolve the Titanic problem on Kaggle: https://www.kaggle.com/competitions/titanic

The final 3 weeks of this roadmap shall be dedicated to Neural Networks and their purposes.

Day 1 — The perceptron and Neural Networks

The last word objective of Synthetic Intelligence is to imitate the way in which the human mind works. The mind makes use of neurons and firing patterns between them to acknowledge advanced relationships between objects. We intention to simulate simply that. The algorithm for implementing such an “synthetic mind” known as a neural community or a synthetic neural community (ANN). At its core, an ANN is a system of classifier items which work collectively in a number of layers to study advanced choice boundaries.

The fundamental constructing block of a neural web is the perceptron. These are the “neurons” of our neural community.

First Video on NN collection by 3Blue1Brown.

Day 2: Backpropagation

Now you is likely to be questioning the right way to practice a neural community. We’re nonetheless going to be utilizing gradient descent (and its optimized varieties) however to calculate the gradients of the weights we shall be utilizing a technique referred to as backpropagation.

To get a visible understanding you might be inspired to observe the remaining 3 movies of 3B1B’s series on Neural Networks.

Day 3 & 4 — Implementation

Dedicate these two days to attempt to implement a neural community in python from scratch. Attempt to have one enter, one hidden and one output layer.

I discovered this playlist the place you’ll perceive the right way to implement NN utilizing Numpy.

Day 5, 6 & 7 — PyTorch

We’ll now begin constructing fashions based mostly on neural Networks like ANN, CNN, RNN, LSTM and so forth. For constructing these fashions, now we have to decide on one deep studying frameworks like PyTorch, Tensorflow or JAX.

I personally suggest PyTorch. You possibly can checkout my deep studying collection utilizing PyTorch on Medium itself.

PyTorch is an open-source machine studying library developed by Fb’s AI Analysis lab (FAIR). It’s extensively used for deep studying purposes equivalent to pc imaginative and prescient and pure language processing. PyTorch gives a versatile and environment friendly framework for constructing and coaching neural networks, providing dynamic computation graphs that permit for extra intuitive and Pythonic growth.

Hyperlink to PyTorch official website .

Checkout this playlist for studying fundamentals for framework, you need to perceive coaching pipeline, loss perform, various kinds of optimizers, information loader and information transforms, activation capabilities and so forth.

Day 1: Introduction to Pc Imaginative and prescient

Now having understood the fundamentals of logistic regression, pytorch and picture processing, its time to additional our understanding and transfer into the area of Pc Imaginative and prescient. Pc Imaginative and prescient is all about giving 1s and 0s the power to see. A Convolutional Neural Community is a Deep Studying algorithm that may absorb enter pictures and be capable to differentiate one from the opposite or perhaps establish some objects within the picture.

Convolution is a mathematical operation to merge two units of knowledge.

That is blog is superior to know the fundamentals of CNNs.

Day 2

Now its time to revise and implement some a part of what we did yesterday and implement it in one of many purposes.

The assignments of the course can be found on this GitHub repository. You possibly can clone the entire repository and open the C4 convolutional neural networks folder adopted by week 1. You’ll discover all of the programming assignments right here. GitHub Repository. Please take a look at the problems part of this repository as effectively.

Day 3

This completes our primary understanding of CNNs. Now, Lets perceive some primary networks which are required to implement CNNs. On this roadmap, for the week, we’ll inform you to cowl Residual Networks solely, nonetheless to get an in depth data of the subject, you may learn concerning the different networks (MobileNet, and so on) afterward.

For day 3, Watch the week 2 lectures on ResNet (First 5 movies of week 2) of that course on coursera and resolve the primary task on residual networks (utilizing the identical GitHub repository).

Day 4 & 5

Watch the primary 9 lectures of week 3 (till the YOLO detection algorithm) and resolve the task on automobile detection with YOLO. A pleasant weblog on the working of YOLO is accessible right here. You possibly can check with it alongside the course. Read Here

YOLOv1 from Scratch — YouTube tutorial

Day 6 & 7

Cowl the remaining portion of week 3 and attempt to do the task on picture segmentation with U web from the GitHub repository.

Additional sources

- https://www.youtube.com/playlist?list=PLoROMvodv4rMiGQp3WXShtMGgzqpfVfbU

- https://www.coursera.org/specializations/deep-learning

The sector of Machine Studying is huge and constantly evolving. By following this roadmap, you may construct a powerful basis, concentrate on cutting-edge applied sciences, and keep abreast of the newest tendencies in 2024. Keep in mind, consistency and curiosity are key to mastering ML. Pleased studying!