Introduction to Chatbots:

Chatbots are pc applications created to imitate human speech. They will reply to inquiries routinely and talk with individuals through textual content or voice. Chatbots are utilized in quite a lot of industries, together with customer support, e-commerce, healthcare, training, and leisure, to simplify interactions, enhance consumer expertise, and cut back the workload on people. They’re able to doing quite a lot of jobs, together with managing sophisticated transactions and providing tailor-made suggestions along with responding to fundamental inquiries.

On this weblog, we are going to information you thru the method of making a base information chatbot utilizing Python.

A Step-by-Step Information :

When starting your chatbot venture, it is very important select the suitable growth atmosphere. Listed here are a couple of well-liked decisions:

- VS Code: A easy and versatile code editor with many useful instruments for Python.

- Jupyter: An interactive instrument nice for attempting out code, making charts, and writing notes.

- PyCharm: A strong program made for Python with good options like code checking and debugging.

For chatbot growth, we suggest utilizing VS Code. It strikes a steadiness between ease of use and highly effective options, making it appropriate for each newcomers and skilled builders. With its intensive extension library, you may customise it to suit your particular wants.

Begin by selecting the info that your chatbot will make the most of with care. Take into account the queries that can be posed and ensure the data base is reliable and proper. This is a vital step as a way to stop inaccurate responses.

Sources for Your Chatbot’s Data :

You may collect info to your chatbot from these sources:

- Textual content Recordsdata: Paperwork, articles, or written content material. These will be extracted utilizing libraries like pdfplumber after which cleansing and structuring the textual content.

- Databases: Shops of structured info. Question languages like SQL are used to retrieve particular knowledge units.

- APIs: Interfaces to fetch knowledge from on-line companies. APIs require authentication and use of particular endpoints to entry and retrieve knowledge in a structured format.

What’s Embedding?

Embedding in pure language processing (NLP) is a strategy to signify phrases or sentences as numerical vectors. These vectors seize semantic relationships and context, permitting algorithms to know and course of language extra successfully.

Varieties of Embedding :

- Phrase Embeddings: Word2Vec and GloVe create vectors primarily based on phrase co-occurrences in textual content, capturing semantic meanings.

- Contextual Phrase Embeddings: ELMo and BERT generate embeddings that change primarily based on phrase context inside sentences, bettering understanding of nuanced meanings.

- Doc Embeddings: Doc2Vec and Paragraph Vectors create fixed-length vectors for paperwork, contemplating the semantic content material of phrases inside them.

- Picture Embeddings: CNNs and fashions like ResNet generate embeddings for pictures, capturing visible options.

- Graph and Data Graph Embeddings: Node2Vec and fashions like TransE embed entities and relations in graphs, helpful for duties involving structured knowledge.

Hugging Face additionally provides fashions which might be appropriate for producing embeddings in pure language processing duties. You may discover them by clicking here.

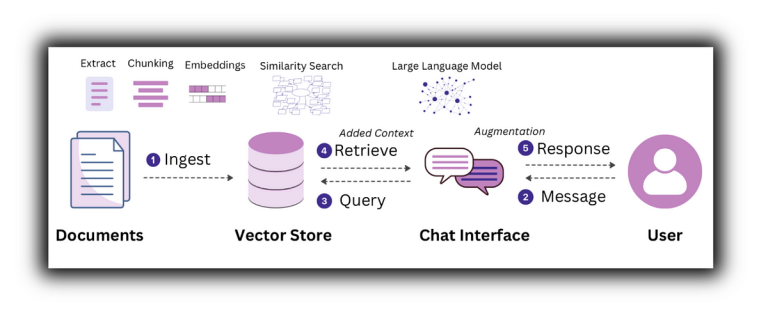

As soon as embeddings are generated, protecting them in a vector retailer corresponding to Qdrant ensures that they could be used and retrieved simply with out requiring additional regeneration. This methodology minimizes computational sources and improves effectivity, permitting for sooner retrieval and software of embeddings for functions corresponding to similarity search.

Overview of LLMs

Giant Language Fashions (LLMs) are superior AI fashions skilled on huge quantities of textual content knowledge to know and generate human-like language. They excel in duties corresponding to textual content era, translation, and understanding context in pure language.

Varieties of LLMs :

- Autoregressive Fashions: These fashions use the beforehand generated tokens as a foundation to generate textual content one token at a time. Google’s BERT and OpenAI’s GPT sequence are two examples.

- Conditional Generative Fashions: These fashions produce textual content primarily based on an enter, like a immediate or context. Purposes like textual content completion and textual content era with sure properties or kinds continuously make use of them.

How LLM’s Works?

Giant language fashions (LLMs) corresponding to Llama / Claude / Gemma … works in a particular manner:

- Studying from Textual content: These fashions start by studying an enormous quantity of textual content from the web. It’s like finding out from an enormous library of data.

- Distinctive Structure: They use a particular construction known as a transformer, which helps them perceive and bear in mind huge quantities of data successfully.

- Breaking Down Language: LLMs break down sentences into smaller elements, like breaking phrases into items. This makes it simpler for them to work with language.

- Understanding Context: Not like easy applications, LLMs perceive particular person phrases and the way phrases match collectively in sentences. They grasp the complete which means of textual content.

- Specialised Coaching: After studying broadly, they will obtain further coaching for particular duties, like answering questions or writing about particular matters.

- Performing Duties: When given a immediate (a query or instruction), they use their information to generate responses. It’s akin to having an clever assistant that comprehends and creates textual content.

When selecting the best LLM to your chatbot, maintain these components in thoughts:

- Process Specificity: Decide a mannequin that matches the precise duties your chatbot must carry out, like producing responses, understanding advanced queries, or translating languages.

- Computational Assets: Verify the mannequin’s dimension and the way a lot computing energy it requires. Be sure it runs easily in your infrastructure or cloud atmosphere with out slowing down. Take into account each CPU and GPU capabilities.

- Efficiency Metrics: Take a look at how nicely the mannequin performs on duties related to your wants. Verify metrics corresponding to accuracy in understanding language and the way fluent it’s in producing textual content.

- Open-Supply Neighborhood: Select a mannequin with an lively neighborhood of builders. This ensures steady updates and enhancements, protecting your chatbot appropriate with the most recent developments in pure language processing.

Examples of Open-Supply LLMs :

Setup Information:

- Downloading the Mannequin: Get hold of the specified LLM from repositories like Hugging Face or GitHub. For LLaMA3, go to the official website or devoted repositories.

- Putting in Dependencies: Set up vital libraries (e.g., transformers, TensorFlow or PyTorch) utilizing instruments like pip, making certain compatibility along with your Python atmosphere.

- Configuration: Alter mannequin parameters (e.g., enter dimension, reminiscence allocation) via configuration information or APIs offered by the mannequin’s documentation.

- Integration: Combine your LLM by both utilizing its API and endpoint, or by operating it regionally and invoking it inside your script.

Why Docker?

Docker simplifies the deployment of LLMs by offering a constant atmosphere throughout completely different methods, making certain reproducibility and scalability. It additionally isolates dependencies, making deployment extra dependable and environment friendly.

Setting Up Docker:

- Set up Docker: If not already put in, obtain Docker from Docker’s official website and comply with the set up directions to your working system.

- Pulling LLM Containers: Many LLMs can be found as pre-built Docker containers. You may seek for the specified LLM picture on Docker Hub or one other container registry. For instance, fashions like Hugging Face Transformers or LLaMA3 might have pre-configured Docker pictures.

- Working the Container: Use Docker instructions (

docker pullanddocker run) to tug and run the LLM container. Configure the container as wanted to your particular software, corresponding to setting atmosphere variables or exposing ports. - API Integration: If the LLM container offers an API endpoint, combine it into your chatbot framework by making HTTP requests to the endpoint. Make sure the API configuration aligns along with your software’s necessities.

Utilizing Docker streamlines the deployment course of, decreasing setup time and complexity whereas sustaining flexibility and scalability to your LLM-powered chatbot.

- Outline Immediate Templates: Create structured prompts that information the LLM on what info to generate primarily based on consumer queries.

- Modify Integration: Replace your chatbot scripts to incorporate these immediate templates, making certain they dynamically generate prompts primarily based on consumer inputs.

- Course of Responses: Implement logic to deal with and refine LLM-generated responses for accuracy and relevance.

- Testing and Refinement: Check the prompts totally, iterate primarily based on consumer suggestions, and refine to enhance chatbot efficiency over time.

By integrating immediate engineering, your chatbot can ship extra related and helpful responses to consumer queries successfully and it’d as nicely cut back hallucinations.

Conclusion :

On this weblog, we’ve outlined the steps to create a Python-based information chatbot utilizing LLMs like GPT and LLaMA3, establishing Python, gathering knowledge, and deploying through Docker. Integration through API endpoints or native scripts was mentioned, alongside strategies to handle LLM inaccuracies (hallucinations) via fine-tuning, context filtering, and human evaluation. This course of aligns with Accountable AI Pointers (RAG), making certain moral and dependable chatbot interactions.