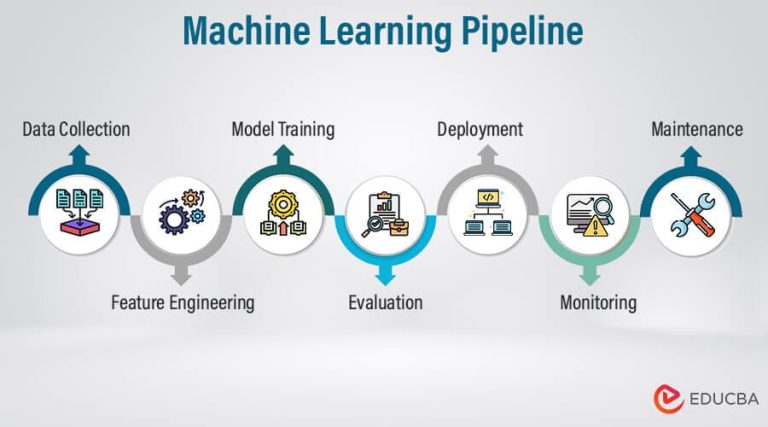

Machine studying (ML) has revolutionized numerous industries by enabling programs to study from knowledge and make clever choices. Constructing an efficient ML mannequin entails a collection of steps, collectively generally known as the ML pipeline. This weblog will information you thru every stage of the ML pipeline, offering each technical explanations and layman’s phrases with real-world examples.

Technical Rationalization: Knowledge assortment is the method of gathering uncooked knowledge from numerous sources. This knowledge may be structured (like databases) or unstructured (like textual content, photographs, or movies). Knowledge can come from APIs, internet scraping, sensors, or guide entry.

Layman’s Phrases: Consider knowledge assortment as gathering components for a recipe. Simply as you want completely different components to cook dinner a meal, you want numerous forms of knowledge to construct a machine-learning mannequin. For example, in the event you’re constructing a mannequin to foretell home costs, you would possibly accumulate knowledge on home sizes, places, variety of bedrooms, and so forth.

Sources of knowledge embrace:

- APIs

- Net Scraping

- Sensors and IoT Gadgets

- Handbook Entry

- Public Datasets

- Batch Assortment: That is the method of Gathering knowledge in giant batches at particular intervals. It’s Appropriate for static datasets the place knowledge doesn’t change often, corresponding to historic knowledge evaluation.

- Actual-Time Assortment: That is the method of Repeatedly amassing knowledge as it’s generated. It’s Excellent for purposes that require up-to-the-minute data, like monetary buying and selling programs or real-time monitoring programs.

- Knowledge High quality

- Knowledge Quantity

- Knowledge Selection

- Knowledge Privateness

Technical Rationalization: Knowledge preprocessing entails cleansing and remodeling the uncooked knowledge right into a format appropriate for evaluation. This step contains dealing with lacking values, normalizing knowledge, encoding categorical variables, and splitting the information into coaching and testing units.

Layman’s Phrases: Knowledge preprocessing is like washing and chopping the components earlier than cooking. It’s good to clear the information, fill in any lacking items, and ensure it’s in a constant format. For instance, changing all textual content to lowercase or turning classes into numbers.

Key Steps in Knowledge Preprocessing:

- Knowledge Cleansing:

- Dealing with Lacking Values: Strategies embrace eradicating information with lacking values, imputing lacking values utilizing statistical strategies (imply, median), or utilizing superior imputation methods like Okay-Nearest Neighbors (KNN).

- Eradicating Duplicates: Figuring out and eradicating duplicate information to make sure knowledge integrity.

- Noise Removing: Filtering out outliers or irrelevant knowledge factors that may skew the evaluation.

2. Knowledge Transformation:

- Normalization/Standardization: Scaling numerical options to an ordinary vary, typically [0, 1] or [-1, 1], to make sure that all options contribute equally to the mannequin.

- Encoding Categorical Variables: Changing categorical knowledge into numerical format utilizing methods like one-hot encoding, label encoding, or binary encoding.

3. Function Scaling:

- Normalization: Scaling knowledge to a spread of [0, 1].

- Standardization: Scaling knowledge to have a imply of 0 and an ordinary deviation of 1.

4. Knowledge Splitting:

- Coaching and Testing Units: Dividing the dataset into coaching and testing subsets, sometimes in an 80–20 or 70–30 break up, to judge mannequin efficiency on unseen knowledge.

- Validation Set: In some instances, an extra validation set is used to fine-tune mannequin parameters.

5. Dimensionality Discount:

- Principal Part Evaluation (PCA): Lowering the variety of options whereas retaining many of the variance within the knowledge.

- Function Choice: Choosing essentially the most related options based mostly on statistical strategies or model-based approaches.

Technical Rationalization: Function engineering is the method of choosing, modifying, or creating new options from the uncooked knowledge that may enhance the efficiency of the ML mannequin. This step typically entails area information and creativity.

Layman’s Phrases: Function engineering is like deciding which components and cooking methods will convey out one of the best flavors in your dish. You would possibly want to mix, take away, or create new components to enhance the recipe.

Key Steps in Function Engineering:

- Function Choice:

- Relevance: Determine and choose options which have a big impression on the goal variable.

- Correlation Evaluation: Use statistical strategies to seek out correlations between options and the goal variable.

- Dimensionality Discount: Apply methods like Principal Part Evaluation (PCA) to cut back the variety of options whereas retaining vital data.

2. Function Transformation:

- Normalization/Standardization: Scale options to a standard vary or distribution.

- Encoding Categorical Variables: Convert categorical knowledge into numerical format utilizing one-hot encoding, label encoding, and so on.

- Polynomial Options: Create new options by combining present ones via polynomial expressions.

3. Function Creation:

- Area-Particular Options: Use area information to create new options that seize important elements of the information.

- Aggregation: Create options by aggregating knowledge factors, corresponding to calculating averages, sums, or counts over particular intervals.

- Interplay Options: Mix two or extra options to seize interactions that could be predictive.

4. Function Extraction:

- Textual content Knowledge: Use methods like TF-IDF, phrase embeddings, or subject modeling to extract significant options from textual content.

- Picture Knowledge: Use convolutional neural networks (CNNs) or different methods to extract options from photographs.

- Time Collection Knowledge: Extract options corresponding to developments, seasonality, or autocorrelation from time collection knowledge.

Technical Rationalization: Mannequin choice entails selecting the suitable machine studying algorithm to coach in your knowledge. Widespread algorithms embrace linear regression, choice timber, help vector machines, and neural networks. The selection will depend on the issue sort (classification or regression), knowledge measurement, and complexity.

Layman’s Phrases: Mannequin choice is like selecting the best cooking methodology. Whether or not you’re baking, frying, or grilling, every methodology fits several types of meals. Equally, completely different ML algorithms are higher suited to several types of issues.

Key Issues in Mannequin Choice:

- Nature of the Drawback:

- Classification: Fashions like Logistic Regression, Resolution Timber, Random Forests, Help Vector Machines (SVM), and Neural Networks are widespread.

- Regression: Fashions like Linear Regression, Ridge Regression, Lasso Regression, Resolution Timber, Random Forests, and Neural Networks are used.

- Clustering: Fashions like Okay-Means, Hierarchical Clustering, and DBSCAN are typical.

- Dimensionality Discount: Strategies like Principal Part Evaluation (PCA) and t-Distributed Stochastic Neighbor Embedding (t-SNE) are employed.

2. Knowledge Dimension and Complexity:

- Small Datasets: Easier fashions corresponding to Linear Regression or Resolution Timber might suffice.

- Massive Datasets: Extra advanced fashions like Deep Neural Networks or Ensemble Strategies could also be required.

- Excessive-Dimensional Knowledge: Fashions that may deal with many options, corresponding to Lasso Regression, Ridge Regression, or dimensionality discount methods, are most well-liked.

3. Mannequin Complexity:

- Bias-Variance Tradeoff: Easy fashions (excessive bias, low variance) vs. advanced fashions (low bias, excessive variance).

- Overfitting and Underfitting: Guaranteeing the mannequin generalizes effectively with out becoming too intently to the coaching knowledge.

4. Efficiency Metrics:

- Classification: Accuracy, Precision, Recall, F1-Rating, ROC-AUC.

- Regression: Imply Absolute Error (MAE), Imply Squared Error (MSE), Root Imply Squared Error (RMSE), R-squared.

- Clustering: Silhouette Rating, Davies-Bouldin Index.

5. Computational Effectivity:

- Coaching Time: Time required to coach the mannequin.

- Inference Time: Time required to make predictions utilizing the skilled mannequin.

- Useful resource Utilization: Reminiscence and computational energy required.

- Linear Regression: Predicting steady values with a linear relationship between options and the goal variable. e. g. Predicting home costs based mostly on measurement, location, and variety of bedrooms.

- Logistic Regression: Binary classification issues the place the output is a binary variable. e. g. Predicting whether or not an e mail is spam or not.

- Resolution Timber: Each classification and regression duties, appropriate for datasets with non-linear relationships. e. g. Predicting mortgage default danger based mostly on buyer knowledge.

- Random Forest: Ensemble methodology for each classification and regression, improves accuracy and reduces overfitting. e. g. Predicting buyer churn in a telecom firm.

- Help Vector Machines (SVM): Classification duties with clear margin separation between lessons. e. g. Handwritten digit recognition.

- Neural Networks: Advanced duties with giant datasets, particularly for picture, textual content, and speech knowledge. e. g. Picture recognition in self-driving vehicles.

- Okay-Means Clustering: Unsupervised studying for grouping related knowledge factors. e. g. Buyer segmentation based mostly on buying habits.

- Perceive the Drawback: Clearly outline the issue and decide the kind of process (classification, regression, clustering, and so on.).

- Begin Easy: Start with easier fashions to determine a baseline earlier than shifting to extra advanced algorithms.

- Cross-Validation: Use methods like k-fold cross-validation to judge mannequin efficiency and keep away from overfitting.

- Examine A number of Fashions: Prepare and evaluate a number of fashions to seek out one of the best performer based mostly on analysis metrics.

- Hyperparameter Tuning: Optimize mannequin parameters to boost efficiency.

- Area Data: Leverage area information to tell mannequin choice and have engineering.

Technical Rationalization: Mannequin coaching is the method of feeding the ready knowledge into the chosen algorithm to study patterns and relationships. The mannequin adjusts its parameters to attenuate error and enhance prediction accuracy.

Layman’s Phrases: Mannequin coaching is like working towards a sport. The extra you apply, the higher you get. Right here, the ML mannequin practices by studying from the coaching knowledge, adjusting itself to enhance its efficiency.

Sorts of Mannequin Coaching:

- Supervised Studying: e. g. Linear Regression, Logistic Regression, Resolution Timber, Help Vector Machines (SVM), Neural Networks.

- Unsupervised Studying: e. g. Okay-Means Clustering, Hierarchical Clustering, Principal Part Evaluation (PCA), t-Distributed Stochastic Neighbor Embedding (t-SNE).

- Semi-Supervised Studying :e. g. self-training, Co-training, Generative Adversarial Networks (GANs).

- Reinforcement Studying: e. g. Q-Studying, Deep Q-Networks (DQN), Coverage Gradient strategies.

- Knowledge Preparation:

- Splitting the Knowledge: Divide the dataset into coaching, validation, and testing units.

- Knowledge Augmentation: For sure duties like picture recognition, increase the information to extend variety with out amassing new knowledge.

2. Mannequin Initialization:

- Select a Mannequin: Choose the algorithm that most accurately fits the issue (e.g., Linear Regression, Resolution Tree).

- Set Parameters: Initialize the mannequin’s parameters, corresponding to weights and biases for neural networks.

3. Coaching the Mannequin:

- Ahead Propagation: Enter knowledge is fed into the mannequin to make predictions.

- Loss Calculation: Calculate the error or loss by evaluating predictions to precise outcomes utilizing a loss perform.

- Backward Propagation: Regulate the mannequin’s parameters to attenuate the loss. Strategies like gradient descent are used to replace the parameters.

4. Validation:

- Analysis on Validation Set: Assess the mannequin’s efficiency on a separate validation set to fine-tune hyperparameters and keep away from overfitting.

- Cross-Validation: Use methods like k-fold cross-validation to make sure strong mannequin analysis.

5. Testing:

- Ultimate Analysis: Take a look at the mannequin on the unseen testing set to judge its generalization efficiency.

- Efficiency Metrics: Use applicable metrics like accuracy, precision, recall, F1-score for classification, or imply squared error (MSE) for regression.

- Guarantee Knowledge High quality: Clear and preprocess knowledge to take away noise and inconsistencies.

- Use Sufficient Coaching Knowledge: Guarantee you will have sufficient knowledge to coach the mannequin successfully.

- Keep away from Overfitting: Use methods like cross-validation, regularization, and dropout (for neural networks) to stop the mannequin from becoming too intently to the coaching knowledge.

- Monitor Coaching Course of: Observe metrics like loss and accuracy throughout coaching to detect points early.

- Hyperparameter Tuning: Experiment with completely different hyperparameters to seek out the optimum settings in your mannequin.

Technical Rationalization: Mannequin analysis entails assessing the skilled mannequin’s efficiency utilizing the testing dataset. Widespread analysis metrics embrace accuracy, precision, recall, F1 rating, and ROC-AUC.

Layman’s Phrases: Mannequin analysis is like tasting your dish to see if it turned out effectively. You take a look at your mannequin on new, unseen knowledge to test how effectively it performs and if it meets your expectations.

- For Classification:

- Accuracy: The ratio of appropriately predicted cases to the whole cases.

- Precision: The ratio of true constructive predictions to the whole constructive predictions.

- Recall (Sensitivity): The ratio of true constructive predictions to the whole precise positives.

- F1-Rating: The harmonic imply of precision and recall, offering a steadiness between the 2.

- ROC-AUC (Receiver Working Attribute — Space Underneath Curve): Measures the mannequin’s skill to differentiate between lessons.

- Confusion Matrix: A desk that outlines true positives, true negatives, false positives, and false negatives.

2. For Regression:

- Imply Absolute Error (MAE): The common of absolutely the variations between the anticipated and precise values.

- Imply Squared Error (MSE): The common of the squared variations between the anticipated and precise values.

- Root Imply Squared Error (RMSE): The sq. root of the MSE, offering error in the identical items because the goal variable.

- R-Squared (Coefficient of Dedication): Measures the proportion of variance within the dependent variable that’s predictable from the impartial variables.

3. For Clustering:

- Silhouette Rating: Measures how related an object is to its personal cluster in comparison with different clusters.

- Davies-Bouldin Index: Evaluates the typical similarity ratio of every cluster with its most related cluster.

- Adjusted Rand Index (ARI): Measures the similarity between the anticipated and precise clustering.

Technical Rationalization: Mannequin deployment is the method of integrating the skilled ML mannequin right into a manufacturing atmosphere the place it could actually make predictions on new knowledge. This typically entails organising APIs, integrating with internet or cellular purposes, and guaranteeing scalability and reliability.

Layman’s Phrases: Mannequin deployment is like serving your completed dish to friends. After perfecting the recipe, you current it so others can get pleasure from it. Equally, you make your mannequin accessible so it could actually make predictions in real-time.

- Batch Deployment: The mannequin processes knowledge in batches at scheduled intervals. This strategy is appropriate for duties that don’t require real-time predictions.

- On-line (Actual-Time) Deployment: The mannequin processes knowledge and makes predictions in real-time or close to real-time. This strategy is good for purposes that require instant responses.

- Embedded Deployment: The mannequin is embedded into {hardware} units or software program purposes, enabling native predictions without having steady web connectivity.

- Cloud Deployment: The mannequin is deployed on cloud platforms, permitting for scalable and versatile entry to computing sources.

- Edge Deployment: The mannequin is deployed on edge units nearer to the place knowledge is generated, lowering latency and bandwidth utilization.

- Mannequin Packaging:

- Containerization: Use instruments like Docker to bundle the mannequin and its dependencies, guaranteeing consistency throughout completely different environments.

- Exporting Fashions: Save the skilled mannequin in a format appropriate for deployment, corresponding to ONNX, PMML, or TensorFlow SavedModel.

2. Infrastructure Setup:

- Server Configuration: Arrange servers or cloud cases to host the mannequin.

- API Growth: Develop RESTful or gRPC APIs to show the mannequin’s performance for integration with different purposes.

3. Monitoring and Logging:

- Efficiency Monitoring: Observe metrics like response time, throughput, and useful resource utilization to make sure the mannequin operates effectively.

- Error Logging: Log errors and exceptions to establish and resolve points shortly.

4. Scaling:

- Horizontal Scaling: Add extra cases of the mannequin to deal with elevated site visitors.

- Vertical Scaling: Enhance the computational energy of present cases.

5. Upkeep and Updates:

- Mannequin Retraining: Periodically retrain the mannequin with new knowledge to make sure it stays correct and related.

- Model Management: Handle completely different variations of the mannequin to trace modifications and roll again if wanted.

- Batch Deployment

- On-line (Actual-Time) Deployment

- Embedded Deployment

- Cloud Deployment

- Edge Deployment

Mastering the ML pipeline entails understanding every step from knowledge assortment to mannequin deployment. By following these steps meticulously, you’ll be able to construct strong and correct machine-learning fashions. Whether or not you’re predicting home costs or figuring out cats in photographs, a well-constructed ML pipeline is important for reworking uncooked knowledge into precious insights.