Chain-Of-Thought Prompting at a foundational degree is so profitable, that it gave rise to one thing some consult with because the Chain-Of-X phenomenon. Google Analysis explored generate a CoT information ontology for current datasets utilizing LLMs after which fine-tune smaller Language Fashions on the CoT.

As most everybody is aware of, Chain-Of-Thought prompting improves the reasoning capabilities of huge language fashions.

Google asserts that reasoning capabilities solely emerge in fashions with no less than tens of billions of parameters. This analysis from Google explores transferring these capabilities to smaller fashions through data distillation.

They fine-tuned a scholar mannequin utilizing the Chain-Of-Thought outputs from a bigger trainer mannequin.

Researchers from Google discovered that this methodology improves job efficiency in arithmetic, frequent sense, and symbolic reasoning datasets.

Chain of thought (CoT) prompting teaches Language Fashions (LMs) to decompose a reasoning job right into a collection of intermediate steps.

It’s demonstrated that this prompting considerably will increase the duty accuracy of huge language fashions (LLMs) throughout frequent sense, symbolic and mathematical reasoning datasets.

Nonetheless, the reasoning capabilities of smaller LMs don’t enhance with CoT prompting, largely producing illogical CoT. Notably, CoT prompting even reduces the accuracy of fashions with lower than 10 billion parameters.

Analysis attributes this to skills, comparable to semantic understanding and symbolic mapping, solely rising at bigger scale fashions.

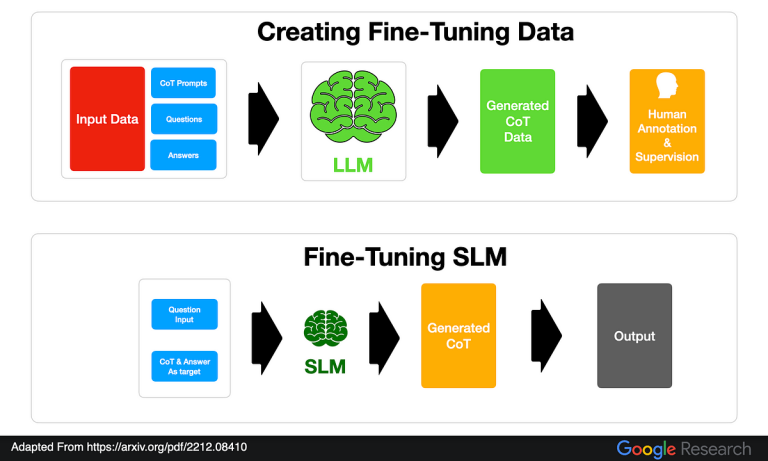

Google Analysis suggest a two-step pipeline for CoT (Chain-Of-Thought) data distillation.

Annotation with CoT Reasoning

- Use a trainer mannequin, like PaLM 540B or GPT-3 175B, to annotate an current supervised dataset with CoT reasoning.

- Carry out few-shot prompting with 8 examples to generate CoTs, adapting prompts to offer the goal reply after the query and earlier than the instance CoT. This helps appropriate small errors.

- Take away incorrect CoTs primarily based on the goal reply to make sure high quality.

Positive-Tuning the Pupil Mannequin

- Positive-Tune a scholar mannequin utilizing trainer forcing.

- Present the query as enter and the CoT and reply because the goal.

- This coaching eliminates the necessity for prompting throughout fine-tuning.

An summary of the proposed methodology is proven within the determine beneath: