Think about you’re attempting to assemble a successful crew for a trivia evening, however your folks are solely consultants in a single matter every. One is aware of sports activities, one other is aware of motion pictures, and a 3rd is a historical past buff. Individually, they may wrestle, however collectively, they kind a robust trivia machine. That’s form of what Gradient Boosting Machines (GBMs) do on this planet of machine studying.

GBMs are like a squad of mini-experts, every specializing in correcting the errors of the final. They take a bunch of weak fashions (assume: not-so-great trivia gamers) and mix them to kind a supermodel (assume: highly effective trivia crew). This methodology of “boosting” weak fashions into a powerful one is what makes GBMs a robust device within the machine studying arsenal. They’ve turn out to be the go-to alternative for tackling complicated issues, from predicting inventory costs to diagnosing ailments, proving that generally, two (or 100) heads are higher than one.

What are GBMs?

Gradient Boosting Machines (GBMs) are a robust device in machine studying used for making correct predictions. Consider GBMs as a crew of fashions working collectively to unravel an issue.

Iterative Course of

The method is iterative, which means it occurs in steps. Right here’s the way it works:

- First Mannequin: The primary mannequin makes its predictions.

- Error Correction: The second mannequin appears to be like on the errors from the primary mannequin and tries to repair them.

- Mixture: The predictions from each fashions are mixed.

- Repeat: This course of repeats, with every new mannequin specializing in the errors of the mixed fashions to this point.

By the top of this course of, you’ve got a powerful mannequin made up of many smaller fashions, every bettering on the final. This crew effort makes GBMs extremely efficient at making correct predictions.

Boosting

Boosting is like coaching a bunch of rookie detectives to unravel a thriller. Every detective (or mannequin) isn’t superb on their very own. Nevertheless, by studying from their errors and dealing collectively, they turn out to be an excellent crew. The primary detective makes some guesses and inevitably messes up. The subsequent detective then steps in to repair these errors, and this course of continues till they crack the case. In machine studying vocabulary, the primary mannequin makes predictions and inevitably makes some errors. The subsequent mannequin then tries to appropriate these errors, and the method continues. This fashion, every mannequin focuses on what the earlier fashions bought fallacious.

Studying Fee

The training price is sort of a spice degree in cooking. When you add an excessive amount of spice, the dish turns into too sizzling to deal with; when you add too little, it lacks taste. Equally, in GBMs, the training price controls how a lot every new mannequin can appropriate the earlier ones. A excessive studying price makes fast adjustments (too spicy), whereas a low studying price makes gradual, regular enhancements (good).

Loss Capabilities

Loss capabilities are like report playing cards for fashions. They let you know how properly your mannequin is doing by measuring its errors. In GBMs, frequent loss capabilities embody:

- Imply Squared Error (MSE): Used for regression issues, it measures how far off the predictions are from the precise values. Consider it because the “Oops, I used to be method off” rating.

- Log-Loss: Used for classification issues, it measures the accuracy of probabilistic predictions. It’s like a “How assured was I?” rating for every guess.

Random Forests (Bagging)

- Strategy: Random forests create many resolution bushes independently after which common their predictions. Every tree is constructed on a random subset of knowledge.

- Execs: They’re simple to coach, much less liable to overfitting, and deal with giant datasets properly.

- Cons: They won’t seize complicated patterns as successfully as GBMs.

GBMs (Boosting)

- Strategy: GBMs construct bushes sequentially, with every new tree correcting the errors of the earlier ones.

- Execs: They usually present increased accuracy and might seize complicated patterns.

- Cons: They are often extra liable to overfitting and take longer to coach.

When to Use Every

- Random Forests: Nice for fast, sturdy fashions which might be simple to tune and interpret. Use them if you want a quick answer that handles giant datasets properly.

- GBMs: Best for attaining top-notch accuracy and coping with complicated patterns. Select them when you’ve got time to fine-tune and wish the perfect efficiency.

Briefly, use random forests for simplicity and velocity, and GBMs for max accuracy and complexity.

On this part, we are going to discover the right way to practice and consider fashions utilizing XGBoost and LightGBM, showcasing their accuracy on the check set. This instance will stroll you thru using these highly effective instruments for classification duties.

Setting Up

First, set up the required libraries:

pip set up xgboost lightgbm scikit-learn

Knowledge Preparation

We are going to use the favored Iris dataset for this instance.

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score# Load dataset

information = load_iris()

X = information.information

y = information.goal

# Break up information

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

Mannequin Coaching with XGBoost

import xgboost as xgb

# Practice mannequin

mannequin = xgb.XGBClassifier()

mannequin.match(X_train, y_train)

# Predict and consider

y_pred = mannequin.predict(X_test)

print(f'Accuracy: {accuracy_score(y_test, y_pred)}')

Mannequin Coaching with LightGBM

import lightgbm as lgb

# Practice mannequin

mannequin = lgb.LGBMClassifier()

mannequin.match(X_train, y_train)

# Predict and consider

y_pred = mannequin.predict(X_test)

print(f'Accuracy: {accuracy_score(y_test, y_pred)}')

Drawing ROC Curve for Multi-Class Classification

For multi-class classification, we compute ROC curves and AUC values for every class individually. Every curve reveals the trade-off between true optimistic price and false optimistic price for that class.

import numpy as np

import matplotlib.pyplot as plt

from sklearn.metrics import roc_curve, auc

from sklearn.preprocessing import label_binarize

from itertools import cycle# Binarize the output

y_test_bin = label_binarize(y_test, courses=np.distinctive(y_test))

# Compute ROC curve and ROC space for every class

fpr = dict()

tpr = dict()

roc_auc = dict()

for i in vary(len(np.distinctive(y_test))):

fpr[i], tpr[i], _ = roc_curve(y_test_bin[:, i], y_prob[:, i])

roc_auc[i] = auc(fpr[i], tpr[i])

# Compute micro-average ROC curve and ROC space

fpr["micro"], tpr["micro"], _ = roc_curve(y_test_bin.ravel(), y_prob.ravel())

roc_auc["micro"] = auc(fpr["micro"], tpr["micro"])

# Plot ROC curves for every class

plt.determine()

colours = cycle(['aqua', 'darkorange', 'cornflowerblue'])

for i, colour in zip(vary(len(np.distinctive(y_test))), colours):

plt.plot(fpr[i], tpr[i], colour=colour, lw=2,

label='ROC curve of sophistication {0} (space = {1:0.2f})'

''.format(i, roc_auc[i]))

plt.plot([0, 1], [0, 1], 'k--', lw=2)

plt.xlim([0.0, 1.0])

plt.ylim([0.0, 1.05])

plt.xlabel('False Constructive Fee')

plt.ylabel('True Constructive Fee')

plt.title('ROC Curve for Multi-Class Classification')

plt.legend(loc="decrease proper")

plt.present()

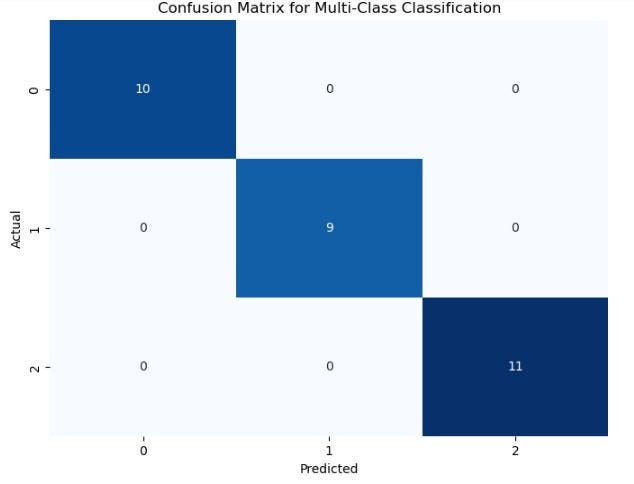

Drawing Confusion Matrix for Multi-Class Classification

This matrix visualizes the mannequin’s predictions throughout all courses. Every row represents the situations in an precise class, whereas every column represents the situations in a predicted class. The diagonal components characterize the variety of appropriately categorized situations for every class.

from sklearn.metrics import confusion_matrix

import seaborn as sns# Predict courses

y_pred = mannequin.predict(X_test)

# Generate confusion matrix

cm = confusion_matrix(y_test, y_pred)

# Plot confusion matrix

plt.determine(figsize=(8, 6))

sns.heatmap(cm, annot=True, cmap='Blues', fmt='g', cbar=False,

xticklabels=np.distinctive(y_test), yticklabels=np.distinctive(y_test))

plt.title('Confusion Matrix for Multi-Class Classification')

plt.xlabel('Predicted')

plt.ylabel('Precise')

plt.present()

On this article, we’ve delved into Gradient Boosting Machines (GBMs), exploring how they rework weak learners into sturdy fashions via iterative error correction. We’ve demystified key ideas reminiscent of boosting, studying price management, and loss capabilities, important for maximizing mannequin accuracy. GBMs are broadly relevant, excelling in fields demanding exact predictions like finance, healthcare diagnostics, and customized suggestions. Trying forward, additional developments in optimizing computational effectivity and tailoring loss capabilities to particular domains will improve GBMs’ capabilities, opening doorways to much more refined purposes sooner or later.