Introduction of Deep Studying (DL):

Deep Studying is a subset of Machine Studying that entails the usage of synthetic neural networks to research and interpret knowledge. Impressed by the construction and performance of the human mind, DL fashions are composed of a number of layers of interconnected nodes (neurons) that course of and rework inputs into significant representations.

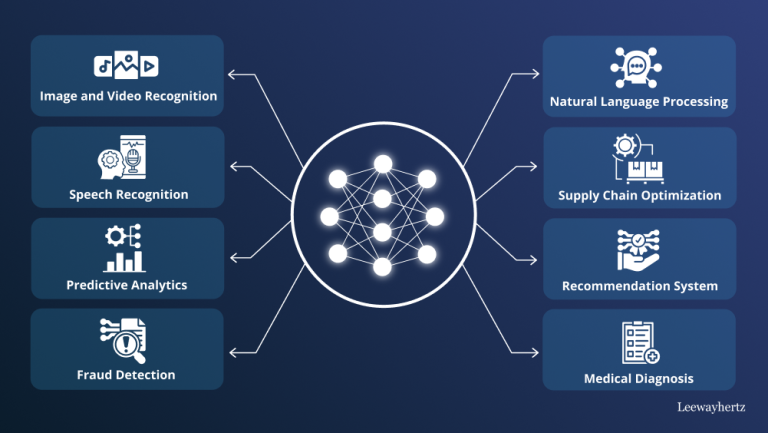

Deep Studying has achieved state-of-the-art efficiency in varied functions, together with:

- Picture and speech recognition

- Pure Language Processing (NLP)

- Recreation taking part in and choice making

A Temporary Historical past of Deep Studying :

Deep Studying, a subset of Machine Studying, has a wealthy and interesting historical past that spans a number of many years. From its humble beginnings to its present standing as a driving drive behind AI, Deep Studying has undergone important transformations, formed by the contributions of quite a few researchers, scientists, and engineers.

The Early Years (Forties-Nineteen Sixties)

The idea of synthetic neural networks, the muse of Deep Studying, dates again to the Forties. Warren McCulloch and Walter Pitts launched the primary synthetic neural community mannequin, the Threshold Logic Unit (TLU), in 1943. This pioneering work laid the groundwork for the event of neural networks.

Within the Fifties and Nineteen Sixties, researchers like Alan Turing, Marvin Minsky, and Seymour Papert explored the probabilities of synthetic intelligence, together with neural networks. The perceptron, a kind of feedforward neural community, was launched by Frank Rosenblatt in 1957.

The Darkish Ages (Nineteen Seventies-Nineteen Eighties)

Regardless of the preliminary pleasure, the event of neural networks slowed down within the Nineteen Seventies and Nineteen Eighties. This era is sometimes called the “Darkish Ages” of neural networks. The constraints of computing energy, knowledge, and algorithms hindered progress, and plenty of researchers shifted their focus to different areas of AI.

The Resurgence (Nineteen Eighties-Nineties)

The Nineteen Eighties noticed a resurgence of curiosity in neural networks, pushed partly by the work of John Hopfield, David Rumelhart, and Geoffrey Hinton. They launched the backpropagation algorithm, which enabled environment friendly coaching of multi-layer neural networks.

Within the Nineties, researchers like Yann LeCun, Yoshua Bengio, and Andrew Ng made important contributions to the event of convolutional neural networks (CNNs) and recurrent neural networks (RNNs).

The Deep Studying Revolution (2000s-2010s)

The twenty first century marked the start of the Deep Studying revolution. The supply of huge datasets, advances in computing energy, and the event of recent algorithms and methods enabled the creation of deeper and extra advanced neural networks.

- 2006: Geoffrey Hinton, Simon Osindero, and Yee-Whye Teh launched the idea of deep perception networks, which laid the muse for contemporary Deep Studying.

- 2011: Andrew Ng and Fei-Fei Li developed the primary large-scale deep studying system, which achieved state-of-the-art efficiency on picture recognition duties.

- 2012: Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton developed the AlexNet mannequin, which gained the ImageNet Massive Scale Visible Recognition Problem (ILSVRC) and marked a turning level within the historical past of Deep Studying.

The AI Renaissance (2010s-present)

The 2010s noticed an explosion of curiosity in Deep Studying, pushed by its functions in pc imaginative and prescient, pure language processing, and speech recognition. The event of recent architectures, comparable to ResNet, Inception, and Transformers, additional accelerated progress.

- 2014: Google acquired DeepMind, a number one Deep Studying analysis group, and launched the Google Mind challenge.

- 2016: AlphaGo, a Deep Studying-based AI system, defeated a human world champion in Go, marking a big milestone in AI analysis.

- 2019: The Transformer structure, launched by Vaswani et al., revolutionized the sphere of pure language processing.

1. Neuron (Perceptron)

A single neuron, or perceptron, is the essential unit of a neural community. It receives inputs, applies weights, provides a bias, and passes the consequence by an activation operate.

Mathematical method:

The place:

- xi are the enter options.

- wi are the weights.

- b is the bias time period.

- f is the activation operate.

- y is the output.

2. Activation Features

Activation capabilities introduce non-linearity into the mannequin, permitting the community to be taught extra advanced patterns. Frequent activation capabilities embrace:

- ReLU (Rectified Linear Unit): ReLU (x) = max(0,x)

- Tanh:

3. Loss Perform

The loss operate measures the distinction between the expected output and the precise output. Frequent loss capabilities embrace:

- Imply Squared Error (MSE):

4. Gradient Descent

Gradient descent is an optimization algorithm used to attenuate the loss operate by updating the mannequin’s parameters (weights and biases).

Weight replace rule:

The place:

- η is the training fee.

- L is the loss operate.

5. Backpropagation

Backpropagation is the method of calculating the gradients of the loss operate with respect to the mannequin’s parameters and utilizing these gradients to replace the parameters. It entails the chain rule of calculus.

Chain rule:

6. Neural Community Layers

Neural networks are composed of a number of layers:

- Enter Layer: Receives the enter options.

- Hidden Layers: Intermediate layers the place computations are carried out. These might be absolutely related layers, convolutional layers, and so forth.

- Output Layer: Produces the ultimate output.

7. Convolutional Neural Networks (CNNs)

CNNs are a kind of neural community notably efficient for picture knowledge. They use convolutional layers to detect spatial hierarchies in knowledge.

Convolution operation:

In discrete phrases for photos:

8. Recurrent Neural Networks (RNNs)

RNNs are designed for sequential knowledge. They keep a hidden state that’s up to date at every time step.

Hidden state replace:

9. Lengthy Brief-Time period Reminiscence (LSTM)

LSTMs are a kind of RNN designed to raised seize long-term dependencies. They use gates to manage the circulation of data.

LSTM cell equations:

Deep studying has reworked the panorama of synthetic intelligence, revolutionizing how machines understand and work together with knowledge. Its skill to robotically be taught intricate patterns and representations from huge quantities of knowledge has fueled breakthroughs in picture and speech recognition, pure language processing, and plenty of different domains. As we stand on the forefront of this expertise, the continual development and integration of deep studying promise to additional reshape industries, improve decision-making processes, and unlock new frontiers in scientific discovery and societal influence. Embracing its potential whereas addressing challenges comparable to interpretability and moral issues will likely be pivotal in guiding its future functions responsibly and successfully.