Attention is all you need is a landmark analysis paper revealed in 2017 (7 years in the past!) by Vaswani et al. at Google.

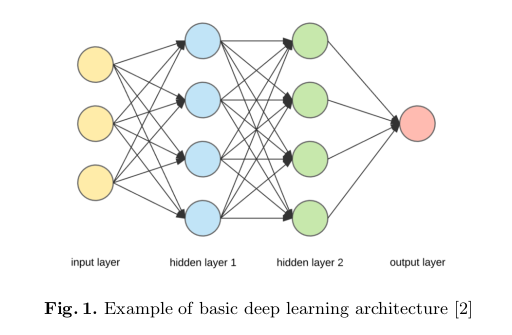

It launched a brand new deep studying structure known as the Transformer, a selected kind of neural community structure, which relied solely on consideration mechanisms to realize state-of-the-art ends in pure language processing (NLP) duties.

What was the problem it sought to deal with?

Earlier than transformers, recurrent neural networks (RNNs) have been generally used for NLP duties. RNNs course of knowledge sequentially, one factor at a time, and therefore excel the place the order of textual content issues. Nevertheless, they’re unable to handle long-range dependencies between phrases in a sentence and are unable to know extra advanced sentences.

Resolution

Transformers launched the eye mechanism that permits the mannequin to concentrate on particular elements of the enter sentence which are most related to the present processing step.

Transformers use an encoder-decoder construction. The encoder processes the enter sentence, and the decoder generates the output sequence. Self-attention permits the mannequin to take care of all elements of the enter sentence to know relationships, whereas masked self-attention is used notably within the decoder to forestall the mannequin from referencing future phrases throughout era.

Transformers, can attend to all elements of an enter sentence concurrently, permitting it to seize relationships between phrases regardless of their distance within the sequence, making them extra highly effective for advanced language duties. The transformer structure additionally permits for parallel processing of knowledge, making them quicker to coach in comparison with RNNs.

How does the transformer work?

The paper covers the important steps concerned in coaching a Transformer mannequin, together with the ahead cross, loss calculation, backpropagation, and weight replace.

Coaching knowledge is first ready, relying on the precise NLP activity the mannequin is being educated for. For example, to coach a mannequin for translation, it might require a dataset of paired sentences in two languages (supply and goal). To coach a mannequin to reply questions, it might require a dataset of questions, corresponding solutions, and the related passages the place the solutions might be discovered.

Information is preprocessed (e.g. punctuations might be eliminated, textual content might be transformed to lowercase, textual content is tokenised with numerical representations). Textual content is cut up into particular person phrases known as ‘tokens’ to permit the Transformer to course of data in smaller, extra manageable items. Every token is transformed right into a numerical illustration known as ‘token embedding’. The embedding acts like a code that captures the which means and context of the token. The Transformer mannequin makes use of these embeddings in the course of the consideration mechanism to compute relevance scores to know the relationships between completely different elements of the sentence.

Much like different neural networks, data flows by the encoder and decoder layers of the Transformer in the course of the ahead cross. Every layer performs its calculations, and a prediction is generated.

The distinction between the mannequin’s prediction and the specified output is in contrast and measured, indicating an related error.

Subsequent, the error is propagated backwards by the decoder layers to calculate the general error. Backpropagation calculates gradients, which point out how a lot every weight or bias within the community must be adjusted to minimise errors on future predictions.

As soon as the decoder is up to date, the error is additional propagated by the encoder layers. Much like the decoder, the weights and biases within the encoder are adjusted primarily based on their contribution to the general error.

Optimisation algorithms can be utilized thereafter to regulate the weights and biases primarily based on the calculated errors throughout coaching. This course of continues for a lot of iterations till the mannequin achieves the specified degree of efficiency.

An instance to know the method

- You’re coaching a canine to fetch a ball.

- You throw the ball (ahead cross), and the canine chases one thing else (unsuitable prediction).

- You gently information the canine again to the ball (backpropagation).

- With repeated throws and delicate corrections, the canine learns to fetch the ball constantly (improved efficiency).

Because the mannequin is uncovered to extra knowledge and the backpropagation course of continues, it will possibly constantly refine its predictions and enhance its efficiency. Nevertheless, backpropagation might be computationally costly, particularly for very massive neural networks.

Challenges confronted by Transformers (and different deep studying fashions)

Since Transformers require knowledge for coaching and fine-tuning to generate inferences, the standard, amount, and neutrality of knowledge contribute to straight influence the mannequin’s outputs. There are additionally issues on knowledge privateness and safety all through the AI lifecycle since these fashions might embody private data. As AI programs turn out to be extra advanced and built-in into society, there’s a threat of dropping transparency and management over how these programs function.