As soon as mannequin is developed, the subsequent section is to calculate the efficiency of the developed mannequin utilizing some analysis metrics. On this article, you’ll simply uncover about confusion matrix although there are numerous classification metrics on the market.

Primarily, it focuses on under factors:

- What’s confusion matrix?

- 4 outputs in confusion matrix

- Superior classification metrics

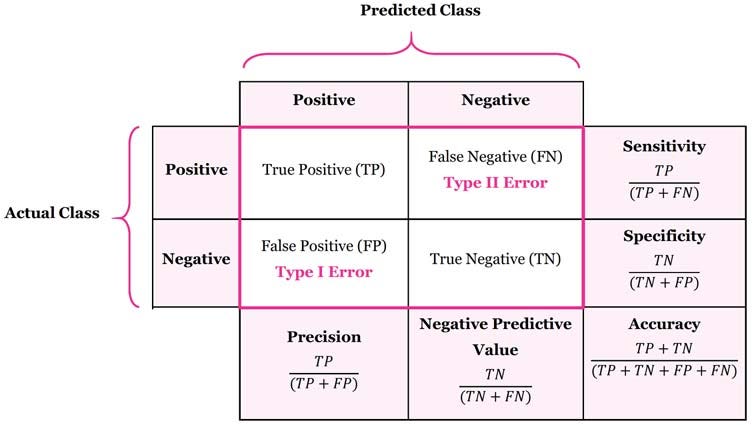

Confusion Matrix is a device to find out the efficiency of classifier. It incorporates details about precise and predicted classifications. The under desk reveals confusion matrix of two-class, spam and non-spam classifier.

Let’s perceive 4 outputs in confusion matrix.

1. True Constructive (TP) is the variety of right predictions that an instance is optimistic which implies optimistic class accurately recognized as optimistic.

Instance: Given class is spam and the classifier has been accurately predicted it as spam.

2. False Adverse (FN) is the variety of incorrect predictions that an instance is unfavourable which implies optimistic class incorrectly recognized as unfavourable.

Instance: Given class is spam nonetheless, the classifier has been incorrectly predicted it as non-spam.

3. False optimistic (FP) is the variety of incorrect predictions that an instance is optimistic which implies unfavourable class incorrectly recognized as optimistic.

Instance: Given class is non-spam nonetheless, the classifier has been incorrectly predicted it as spam.

4. True Adverse (TN) is the variety of right predictions that an instance is unfavourable which implies unfavourable class accurately recognized as unfavourable.

Instance: Given class is spam and the classifier has been accurately predicted it as unfavourable.

Now, let’s see some superior classification metrics primarily based on confusion matrix. These metrics are mathematically expressed in Desk 1 with instance of e mail classification, proven in Desk 2. Classification drawback has spam and non-spam courses and dataset incorporates 100 examples, 65 are Spams and 35 are non-spams.

Sensitivity can be referred as True Constructive Price or Recall. It’s measure of optimistic examples labeled as optimistic by classifier. It needs to be increased. For example, proportion of emails that are spam amongst all spam emails.

Sensitivity = 45/(45+20) = 69.23% .

The 69.23% spam emails are accurately categorized and excluded from all non-spam emails.

Specificity can be know as True Adverse Price. It’s measure of unfavourable examples labeled as unfavourable by classifier. There needs to be excessive specificity. For example, proportion of emails that are non-spam amongst all non-spam emails.

specificity = 30/(30+5) = 85.71% .

The 85.71% non-spam emails are precisely categorized and excluded from all spam emails.

Precision is ratio of complete variety of accurately categorized optimistic examples and the overall variety of predicted optimistic examples. It reveals correctness achieved in optimistic prediction.

Precision = 45/(45+5)= 90%

The 90% of examples are categorized as spam are literally spam.

Accuracy is the proportion of the overall variety of predictions which are right.

Accuracy = (45+30)/(45+20+5+30) = 75%

The 75% of examples are accurately categorized by the classifier.

F1 rating is a weighted common of the recall (sensitivity) and precision. F1 rating could be good selection if you search to steadiness between Precision and Recall.

It helps to compute recall and precision in a single equation in order that the issue to differentiate the fashions with low recall and excessive precision or vice versa might be solved.