RankNet, launched by Microsoft researchers of their paper [1], is a machine studying algorithm developed for the essential job of “studying to rank” in data retrieval programs.

RankNet was one of many first neural network-based approaches to sort out the rating downside, paving the best way for extra superior learning-to-rank algorithms (lambdaRank, lambdaMart, and so forth. [4]). Its key contribution was framing the rating job as a pair-wise comparability downside, utilizing a probabilistic method to study the relative order of things.

This identical method can apply to varied rating eventualities, comparable to:

- Rating search ends in net serps

- Ordering product suggestions in e-commerce platforms

- Prioritizing information articles on a information web site

- Sorting job listings on a job search platform

- Prime ok recommendations throughout auto-complete

On this submit, I’ll give an easy-to-understand overview of the RankNet structure and share a simplified implementation utilizing PyTorch.

If you wish to study extra about completely different loss strategies utilized in “studying to rank” issues, then please go to my different submit on this subject.

Think about a easy instance of rating film search outcomes on a streaming service to grasp how RankNet works. Think about a person looking for “sci-fi motion” on a film streaming platform. The service must rank the search outcomes to point out probably the most related motion pictures first.

For every film, we have now options like relevance_to_genre, user_rating, forged, and so forth. Let’s take into account these 2 motion pictures:

Film A: “The Matrix” (Sci-fi: 9, Motion: 8, Romance:1, Score: 4.5)

Film B: “Titanic” (Sci-fi: 1, Motion: 5, Romance: 7, Score: 4.8)

In our coaching information, we all know that customers sometimes favor “The Matrix” for “sci-fi motion” searches.

Step-1: Generate function set and feed it into the RankNet as a pair of enter.

Enter 1: [9, 8, 1, 4.5]

Enter 2: [1, 5, 7,4.8]

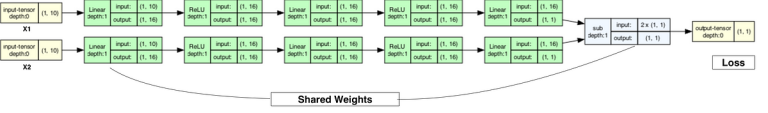

Step-2: Course of the enter pair of films via the RankNet mannequin by way of hidden layers. The final layer will predict a rating for every film.

Let’s say the community outputs (ideally we wish Titanic to have a low rating):

Rating for “The Matrix”: 0.7

Rating for “Titanic”: 0.6

Step 3: RankNet computes the likelihood utilizing the logistic perform (extra about it within the subsequent part) that “The Matrix” (A) ought to be ranked larger than “Titanic” (B).

P_ij(A > B) = 1 / (1 + e^-(score_A — score_B))

= 1 / (1 + e^-(0.7–0.6)) ≈ 0.524

This exhibits a 52.4% likelihood that ‘The Matrix’ ought to be ranked larger than ‘Titanic’ for this search question. Nevertheless, in response to our coaching information, customers really favor ‘The Matrix’ for this question, that means our mannequin isn’t precisely predicting the scores.

Step 4: RankNet makes use of binary cross-entropy loss, which measures the distinction between the anticipated likelihood distribution and the true likelihood distribution. For a pair of things (i, j), the loss (L) is:

L = -P̄_ij log(P_ij) — (1 — P̄_ij) log(1 — P_ij)

The place:

- P̄_ij: The goal likelihood (1 if i ought to be ranked larger than j, 0 if j ought to be ranked larger than i)

- P_ij: The anticipated likelihood that i ought to be ranked larger than j

In step-3, we computed P_ij as 0.524. And we all know “The Matrix” is the popular response to the search question, so P̄_ij is the same as 1.

L = -P̄_ij log(P_ij) — (1 — P̄_ij) log(1 — P_ij)

= -1 * log(0.524) — 0 * log(1–0.524) ≈ 0.646

Step-5: The mannequin makes use of this loss to replace its weights via backpropagation (i.e., computing gradients w.r.t. this loss and propagating again to all of the layers within the mannequin), aiming to reduce the distinction between its predictions and the bottom reality. For extra details about backpropagation, please consult with this video:

https://www.3blue1brown.com/lessons/backpropagation-calculus

In follow, this course of is repeated for a number of coaching samples (over tens of millions) till the mannequin improves its efficiency.

How are gradients computed?

For simplicity, let’s denote P̄_ij as y, P_ij as P and s_i — s_j as s. To compute the gradient, we take a spinoff of loss (L) w.r.t. P.

Observe: we are going to make use of the chain-rule to compute the spinoff. If you’re unfamiliar with this subject, then please consult with this video that clearly explains this idea.

dL/dP = d/dP[-y * log(P) - (1-y) * log(1-P)]

= -y * (1/P) + (1-y) * (1/(1-P))

= (-y * (1-P) + (1-y) * P) / P(1-P)

= (P - y) / (P(1-P))However right here, P represents a sigmoid perform.

P = sigmoid(s) = 1 / (1 + e^(-s))

To get the gradient w.r.t. s, we use the chain rule:

dL/ds = dL/dP * dP/ds

We have already got dL/dP = (P - y) / (P(1-P)).

The spinoff of the sigmoid perform is:

dP/ds = P(1-P)

Substituting them:

dL/ds = [(P - y) / (P(1-P))] * [P(1-P)]

= P - y

Since we’re utilizing a neural community structure, we are going to backpropagate this gradient to every layer inside the community. If P is near y, this gradient shall be small, exhibiting that our prediction is sweet. If P is much from y, the gradient shall be bigger, main to greater updates in our community parameters (i.e., weights).