Align your LLM with much less reminiscence and pace environment friendly strategy than DPO.

Aligning LLMs for optimum efficiency sometimes begins with Supervised Superb-Tuning (SFT). Generally, the mannequin is loaded in 4-Bit, and config for LoRA coaching is utilized. The usual apply entails loading the mannequin in 4-bit mode and making use of configurations for LoRA (Low-Rank Adaptation) coaching. Direct Desire Optimization (DPO) is one other outstanding approach for optimizing fashions with decrease prices. The usual apply entails coupling SFT+DPO to additional enhance mannequin efficiency however might be pricey. Odds Ratio Desire Optimization (ORPO) replaces the SFT+DPO right into a single step with extra enhanced efficiency by including an odds ratio-based penalty to the traditional unfavourable log-likelihood (NLL) loss for differentiating the era types between favored and disfavored responses.

One other approach for extra steady coaching and improved efficiency is CPO-SimPO. It goals to counter SFT’s dependency on coaching knowledge high quality for mannequin efficiency, DPO’s reminiscence + pace inefficiency (if coping with each parametrized and reference coverage) and to forestall the era of lengthy however low-quality sequences. On this weblog, I’ll introduce this method intimately and additional prepare Phi3-Mini-4K-Instruct on CPO-SimPO.

It’s a joint of two choice optimization strategies: CPO and SimPO.

Launched by Haoran Xu et. al, 2024, the CPO goal is an approximation of the DPO goal by discarding the ultimate coverage within the unique DPO loss. Additionally, a habits cloning (BC) regularizer is included to make sure the mannequin doesn’t deviate from the popular knowledge distribution.

CPO requires a high-quality however flawless choice dataset ( format: immediate, chosen, rejected ) to realize perfection in mannequin output and mitigate even minor flaws.

Launched by Yu Meng et. al, 2024, SimPO eliminates the necessity for a reference mannequin in distinction to common DPO, by a length-normalized reward which is the typical log chance of all tokens generated by the principle coverage mannequin itself, as an alternative of an express reward mannequin in DPO. Secondly, it introduces a goal reward margin γ to make sure the reward distinction between chosen and rejected responses exceeds this margin.

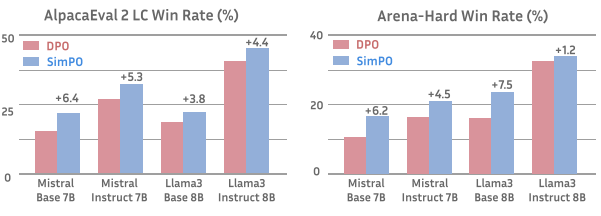

SimPO is extra reminiscence and compute-efficient than DPO for not utilizing an express reward mannequin but prevents producing longer however lower-quality sequences because it outperforms DPO throughout AlpacaEval2 and ArenaHard.

Combining each goals leads us to CPO-SimPO loss to make the most of the advantages of each choice optimization strategies collectively.

We will carry out the CPO-SimPO coaching of any HuggingFace mannequin utilizing the official GitHub repository.

We might want to create a Python surroundings utilizing conda, so in case you don’t have conda put in, right here’s how one can set up conda:

mkdir -p ~/miniconda3

wget https://repo.anaconda.com/miniconda/Miniconda3-latest-Linux-x86_64.sh -O ~/miniconda3/miniconda.sh

bash ~/miniconda3/miniconda.sh -b -u -p ~/miniconda3

rm -rf ~/miniconda3/miniconda.sh

~/miniconda3/bin/conda init bash

~/miniconda3/bin/conda init zsh

You’ll need to open a brand new terminal for the consequences to happen. Now Create a python digital surroundings.

conda create -n handbook python=3.10 && conda activate handbook

Then that you must set up pytorch v2.2.2 , installation method is dependent upon your system.

conda set up pytorch==2.2.2 torchvision==0.17.2 torchaudio==2.2.2 pytorch-cuda=11.8 -c pytorch -c nvidia

Since this codebase is constructed upon the alignment-handbook repo. We will set up its bundle dependencies.

git clone https://github.com/huggingface/alignment-handbook.git

cd ./alignment-handbook/

python -m pip set up .

cd ..

Additionally, you will want Flash Consideration 2 put in:

python -m pip set up flash-attn --no-build-isolation

Now let’s clone the CPO_SimPO repository

git clone https://github.com/fe1ixxu/CPO_SIMPO.git

cd CPO_SIMPO

It’s good to create a .yaml config file to specify the coaching arguments. Regulate per_device_train_batch_size and max_lengthin response to your GPU specs. Be aware that we set the loss_type: simpo and cpo_alpha to a non zero worth

# Mannequin arguments

model_name_or_path: microsoft/Phi-3-mini-4k-instruct

torch_dtype: null

use_flash_attention_2: false

# Information coaching arguments

dataset_mixer:

princeton-nlp/llama3-ultrafeedback: 1.0

dataset_splits:

- prepare

- take a look at

preprocessing_num_workers: 12

# CPOTrainer arguments

bf16: true

beta: 10

simpo_gamma: 5.4

cpo_alpha: 0.05

loss_type: simpo

do_eval: true

evaluation_strategy: steps

eval_steps: 400

gradient_accumulation_steps: 4

gradient_checkpointing: true

gradient_checkpointing_kwargs:

use_reentrant: False

hub_model_id: cpo-simpo-exps

learning_rate: 1.0e-6

log_level: information

logging_steps: 5

lr_scheduler_type: cosine

max_length: 2048

max_prompt_length: 1800

num_train_epochs: 1

optim: adamw_torch

output_dir: outputs/phi3mini4k-cpo-simpo

run_name: phi3mini4k-cpo-simpo

per_device_train_batch_size: 2

per_device_eval_batch_size: 2

push_to_hub: false

save_strategy: "steps"

save_steps: 1000000

report_to:

- none

save_total_limit: 20

seed: 42

warmup_ratio: 0.1

Subsequent we have to specify {hardware} configuration. We are going to make the most of the deepspeed_zero3.yaml config supplied within the repository underneath accelerate_configs listing. Select num_processes because the variety of GPUs you may have accessible. You might want A100 GPUs to keep away from CUDA errors.

compute_environment: LOCAL_MACHINE

debug: false

deepspeed_config:

deepspeed_multinode_launcher: commonplace

offload_optimizer_device: none

offload_param_device: none

zero3_init_flag: true

zero3_save_16bit_model: true

zero_stage: 3

distributed_type: DEEPSPEED

downcast_bf16: 'no'

machine_rank: 0

main_training_function: predominant

mixed_precision: bf16

num_machines: 1

num_processes: 1

rdzv_backend: static

same_network: true

tpu_env: []

tpu_use_cluster: false

tpu_use_sudo: false

use_cpu: false

Present the trail to your coaching and speed up config recordsdata and begin coaching. Your remaining mannequin might be accessible in output_dir as laid out in coaching arguments.

ACCELERATE_LOG_LEVEL=information speed up launch --config_file accelerate_configs/deepspeed_zero3.yaml scripts/run_cpo.py training_configs/phi3-mini4k-instruct-cpo-simpo.yaml

After importing the mannequin to your hugging face account, you’ll be able to carry out inference within the following manner:

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer, pipeline

torch.random.manual_seed(0)

mannequin = AutoModelForCausalLM.from_pretrained(

"abideen/Phi-3-mini-4K-instruct-cpo-simpo",

device_map="cuda",

torch_dtype="auto",

trust_remote_code=True,

)

tokenizer = AutoTokenizer.from_pretrained("abideen/Phi-3-mini-4K-instruct-cpo-simpo")

messages = [

{"role": "user", "content": "Can you provide ways to eat combinations of bananas and dragonfruits?"},

{"role": "assistant", "content": "Sure! Here are some ways to eat bananas and dragonfruits together: 1. Banana and dragonfruit smoothie: Blend bananas and dragonfruits together with some milk and honey. 2. Banana and dragonfruit salad: Mix sliced bananas and dragonfruits together with some lemon juice and honey."},

{"role": "user", "content": "What about solving an 2x + 3 = 7 equation?"},

]

pipe = pipeline(

"text-generation",

mannequin=mannequin,

tokenizer=tokenizer,

)

generation_args = {

"max_new_tokens": 500,

"return_full_text": False,

"temperature": 0.0,

"do_sample": False,

}

output = pipe(messages, **generation_args)

print(output[0]['generated_text'])