Are you aware that AlexNet structure is among the most influential architectures in deep studying? It’s a deep convolutional neural community (CNN) proposed by Alex Krizhevsky and his analysis group on the College of Toronto.

Alexnet received the ImageNet Massive Scale Visible Recognition Problem (ILSVRC) in 2012. It outperformed the earlier state-of-the-art mannequin by a big margin by attaining a top-5 error charge of 15.3%, which was 10.8% decrease than the error charge of the runner-up.

The invention of AlexNet is thought to be one of many breakthrough innovations in pc imaginative and prescient. It demonstrated nice potential in deep studying by introducing many key ideas, resembling utilizing the rectified linear unit (ReLU) activation perform, knowledge augmentation, dropout, and overlapping max pooling. The success of AlexNet impressed many convolutional neural community architectures resembling VGG, ResNet, and Inception.

If you wish to discover extra concerning the AlexNet structure, you could have come to the fitting place. On this weblog publish, we are going to clarify the structure of AlexNet and why it makes use of ReLU, overlapping max pooling, dropout, and knowledge augmentation. So, allow us to dig deep into the article.

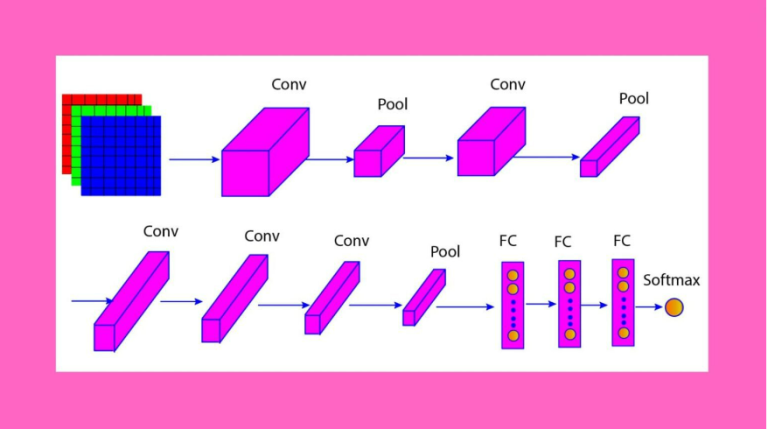

The structure of AlexNet includes 5 convolutional layers, three absolutely related layers, and one SoftMax output layer. The enter is an RGB picture of measurement 227x227x3, and the output is the likelihood of the picture belonging to one of many 1000 object classes.

The determine beneath reveals the varied layers of the AlexNet structure. Within the desk beneath, we additional listed filter measurement, variety of filters, stride, padding, output measurement, and activation for every layer. We’ll focus on these layers within the part beneath.

There are 5 convolutional layers within the AlexNet structure. These layers apply filters that slide throughout the pictures to detect options. The primary convolutional layer makes use of a filter of measurement 11×11 to seize broader, low-level options like edges and textures. The filter measurement within the subsequent convolutional layers is much less (5×5 or 3×3) as they deal with particular particulars inside distinguished options.

The Max pooling layer helps to scale back the spatial dimensions of the information whereas retaining necessary options. There are three max-pooling layers within the AlexNet, which have a filter of measurement 3×3 with a stride of 2.

Within the AlexNet structure, two native response normalization layers (LRN) are used after the primary and second convolutional layers to normalize the outputs. It helps the AlexNet community to study higher and differentiate between important options within the picture whereas avoiding much less related activations.

There are three absolutely related dense layers within the AlexNet community, and they’re chargeable for studying high-level options from the output of the earlier convolutional and max-pooling layers. The primary two dense layers have 4096 neurons every, whereas the third dense layer has 1000 neurons comparable to the 1000 courses within the ImageNet dataset.

A number of the necessary options of AlexNet structure that made it profitable are:

Earlier than the event of AlexNet structure, deep convolutional neural networks confronted a big problem referred to as the vanishing gradient downside on account of using sigmoid and tanh capabilities. These capabilities are likely to saturate at optimistic or detrimental values, resulting in gradients approaching zero throughout backpropagation.

AlexNet community considerably mitigated the affect of the vanishing gradient downside by utilizing the ReLU activation perform. The ReLU activation perform is non-saturating, permitting gradients to move freely by the community. This nature of ReLU makes the deep CNNs computationally sooner and extra correct whereas overcoming the vanishing gradient downside.

We wish to point out that the ReLU doesn’t remove the vanishing gradient downside utterly. We frequently want different methods along with ReLU to develop environment friendly and correct deep-learning fashions whereas avoiding vanishing gradient issues.

AlexNet community makes use of max pooling to scale back the dimensions and complexity of function maps generated by the convolutional layers. It performs max operations on small areas of the function map, resembling 2×2 or 3×3, and outputs the utmost worth. This strategy helps to protect the important options whereas discarding the redundant or noisy options.

From the determine of the AlexNet structure above, we observe that there are max pooling layers after the primary, second, and fifth convolutional layers. These max pooling layers have overlapping areas within the function map, that are pooled collectively. This helps to retain extra important data and scale back error charges in comparison with non-overlapping max pooling.

Coaching deep convolutional networks with a restricted dataset will be difficult on account of overfitting. To beat this, AlexNet used the information augmentation strategy to extend the dimensions of the coaching dataset artificially. Knowledge augmentation creates new photographs by randomly altering the unique photographs. Alterations embrace cropping, flipping, rotating, including noise, and altering the colours of the unique photographs.

The info augmentation strategy considerably will increase the dimensions and high quality of the coaching dataset within the AlexNet mannequin, resulting in elevated generalization, improved coaching pace and accuracy, and addressing overfitting.

Dropout is a regularization methodology in neural networks to keep away from overfitting. It randomly deactivates some neurons in every layer throughout the coaching course of.

Dropout forces neural networks to depend on totally different neuron combos in every iteration, discouraging reliance on particular options within the coaching knowledge. Consequently, it helps keep away from overfitting and will increase the generalization capability of the mannequin.

Within the AlexNet structure, the dropout is utilized within the first two absolutely related layers. Each the dense layers are prone to overfitting as they use very excessive numbers of neurons (4096 every)

A dropout charge of 50% is used within the AlexNet, which signifies that half of the neurons in every layer are randomly dropped out throughout coaching. This excessive charge highlights the significance of stopping overfitting in these giant layers.

- Picture Classification: AlexNet can be utilized to determine and categorize objects in photographs, resembling animals, vegetation, autos, and many others.

- Object Detection: We will use AlexNet to detect and determine varied objects in a picture, resembling faces, pedestrians, and automobiles.

- Face recognition: With the assistance of Alexnet, we will acknowledge and differentiate facial options of people, resembling id, expression, age, and gender.

- Scene understanding: AlexNet can be utilized to research photographs and interpret context and semantics from them, resembling indoor/outside, day/night time, and climate.

- Picture Technology: By utilizing AlexNet, we will additionally generate sensible photographs, resembling faces, landscapes, and art work from textual content descriptions, sketches, or different photographs

AlexNet is a sort of convolutional neural community that received the ImageNet problem in 2012 and sparked a revolution within the subject of deep studying and pc imaginative and prescient.

The design of AlexNet structure consists of eight layers with learnable parameters. It launched a number of novel methods, resembling ReLU activation, dropout, knowledge augmentation, and GPU parallelism.

AlexNet community demonstrated that deep CNNs can obtain nice outcomes on large-scale picture recognition duties utilizing purely supervised studying. It set a brand new normal for CNN fashions and impressed many subsequent analysis works within the subject of deep studying and pc imaginative and prescient.

About The Writer

Dr. Partha Majumder is a distinguished researcher specializing in deep studying, synthetic intelligence, and AI-driven groundwater modeling. With a prolific observe report, his work has been featured in quite a few prestigious worldwide journals and conferences. Detailed details about his analysis will be discovered on his ResearchGate profile. Along with his educational achievements, Dr. Majumder is the founding father of Paravision Lab, a pioneering startup on the forefront of AI innovation.

Introduction To AlexNet Architecture

The Architecture that Changed AlexNet

Learn The Authentic Article Right here: How AlexNet Architecture Revolutionized Deep Learning