Deep studying has revolutionized the sector of synthetic intelligence, enabling breakthroughs in areas like pc imaginative and prescient, pure language processing, and extra. Nevertheless, coaching deep neural networks shouldn’t be with out its challenges. One important challenge that may impede the training course of is the vanishing gradient drawback.

The vanishing gradient drawback happens when the gradients of the loss operate with respect to the parameters (weights) grow to be very small as they’re propagated again by means of the layers of the community throughout coaching. This challenge is especially prevalent in deep networks with many layers. When the gradients are too small, the weights are up to date very slowly, which might successfully halt the coaching course of and forestall the community from studying.

Why Does the Vanishing Gradient Downside Happen?

To grasp why the vanishing gradient drawback happens, we have to have a look at how gradients are computed throughout backpropagation. Through the backward cross, gradients are calculated utilizing the chain rule of calculus. For a deep neural community, the gradient of the loss operate with respect to a weight in an earlier layer includes the product of many small derivatives from subsequent layers. Mathematically, this may be expressed as:

If these derivatives are small (lower than 1), their product can lower exponentially because the variety of layers will increase. Consequently, the gradients for the sooner layers can grow to be vanishingly small, making it troublesome for the community to be taught successfully.

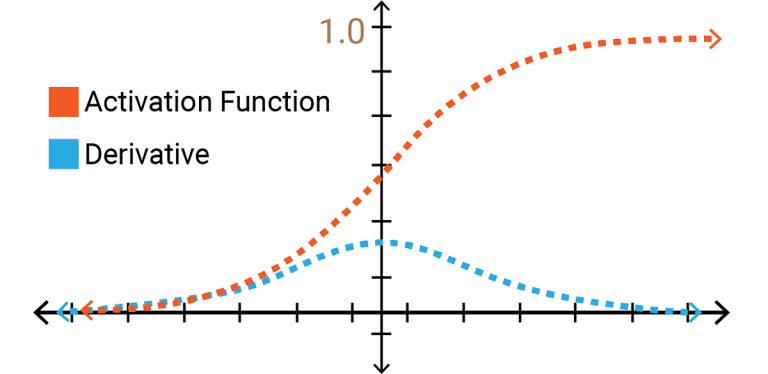

The vanishing gradient drawback happens within the case once we use Sigmoid operate activation operate.

Throughout ahead propagation we use the sigmoid activation operate and its worth ranges from 0 to 1 as denoted by the pink line

Throughout backward propagation we use the spinoff of the sigmoid activation operate and its worth ranges from 0 to 0.25 as denoted by the blue line

Because the worth of spinoff reduces and because the variety of layers will increase the worth retains getting smaller and in the long run the brand new and outdated weight find yourself being the identical and therefore weight updation doesn’t happen and the accuracy of the mannequin doesn’t enhance.

The first consequence of the vanishing gradient drawback is that it slows down or utterly halts the coaching of deep neural networks. When the gradients are too small, the weights within the earlier layers obtain negligible updates, which suggests these layers be taught very slowly or under no circumstances. This could result in:

- Poor Convergence: The community might take an excessively very long time to converge to a great resolution, or it might by no means converge.

- Suboptimal Efficiency: The community might find yourself with a suboptimal set of weights, leading to poor efficiency on the duty at hand.

A number of strategies have been developed to mitigate the vanishing gradient drawback. These embody architectural modifications, activation operate decisions, and initialization methods. Listed here are among the only options:

- ReLU Activation Operate: The Rectified Linear Unit (ReLU) activation operate has grow to be the default alternative for a lot of deep studying fashions. Not like conventional activation features just like the sigmoid and tanh, which squash their inputs to a small vary (resulting in small gradients), ReLU outputs the enter immediately whether it is constructive, or zero in any other case. This helps preserve bigger gradients throughout backpropagation.

2. Batch Normalization: Batch normalization normalizes the inputs of every layer to have a imply of zero and a variance of 1. By decreasing inner covariate shift, batch normalization helps preserve wholesome gradient magnitudes and accelerates coaching.

3. Gradient Clipping: Gradient clipping includes setting a threshold worth, and if the gradients exceed this threshold, they’re scaled down. Whereas this method is extra generally used to handle exploding gradients, it may well additionally assist handle very small gradients by stopping drastic updates that would additional diminish gradient magnitudes.

The vanishing gradient drawback is a major problem in coaching deep neural networks, however understanding its causes and options is essential for constructing efficient fashions. Strategies akin to utilizing ReLU activation features, He initialization, batch normalization, and residual networks will help mitigate this challenge, enabling the profitable coaching of deeper and extra advanced neural networks. By addressing the vanishing gradient drawback, we are able to unlock the total potential of deep studying and proceed to push the boundaries of synthetic intelligence.