Introduction

The rise of Retrieval-Augmented Generation (RAG) and Information Graphs has revolutionized how we work together with complicated knowledge units by offering a structured, interconnected illustration of data. Information Graphs, similar to these utilized in Neo4j, facilitate the querying and visualization of relationships inside knowledge. Nevertheless, translating natural language into structured question languages like Cypher stays a difficult activity. This information goals to bridge this hole by detailing the fine-tuning of the Phi-3 Medium mannequin to generate Cypher queries from pure language inputs. By leveraging the compact but highly effective capabilities of the Phi-3 Medium mannequin, even small-scale builders can effectively convert textual content to Cypher queries, enhancing the accessibility and usefulness of Information Graphs.

Studying Targets

- Perceive the significance of Cypher Question era from natural language for developer effectivity.

- Find out about Microsoft’s Phi 3 Medium and its function in remodeling English queries into code.

- Discover Unsloth’s effectivity enhancements and reminiscence administration for Massive Language Fashions.

- Arrange the atmosphere for fine-tuning Phi 3 Medium with Unsloth effectively.

- Put together datasets appropriate with Phi 3 Medium and Unsloth for efficient fine-tuning.

- Grasp fine-tuning Phi 3 Medium with particular coaching arguments utilizing SFTTrainer.

This text was revealed as part of the Data Science Blogathon.

What’s Phi 3 Medium?

The Phi household of Large Language Models is launched by Microsoft to characterize that even small language fashions can carry out higher and could also be on par with the larger fashions. Microsoft has skilled this small household of fashions with several types of datasets, thus making these fashions good at completely different duties together with entity extraction, summarization, chatbots, roleplay, and extra.

Microsoft has launched these fashions holding in thoughts that their small dimension can assist even small builders work with them, and prepare them on their very personal datasets, thus mentioning many alternative purposes. Not too long ago, Microsoft has introduced the third era of the phi household referred to as the Phi 3 sequence of Massive Language Fashions.

Within the Phi 3 sequence, the context size was purchased from 4k tokens to now 128k tokens, thus permitting extra context to slot in. The Phi 3 household of fashions comes with completely different sizes ranging from the smallest 3.8 billion parameter mannequin referred to as the Phi 3 Mini, adopted by the Phi 3 Small which is a 7B parameter mannequin, and at last the Phi 3 Medium which is a 14 billion parameter mannequin, the one we’ll prepare on this Information. All of those fashions have an extended context model extending the context size to 128k tokens.

Who’s Unsloth?

Developed by Daniel and Michael Han, Unsloth emerged to be one the very best Optimized Frameworks designed to enhance the fine-tuning course of for large language models (LLMs). Recognized for its blazing velocity and reminiscence effectivity, Unsloth can improve coaching speeds by as much as 30 occasions whereas decreasing reminiscence utilization by a powerful 60%. All these capabilities make it the proper framework for builders aiming to fine-tune LLMs with accuracy and velocity.

Unsloth helps several types of {Hardware} Configs, from NVIDIA GPUs just like the Tesla T4 and H100 to AMD and Intel GPUs. It even employs complicated methodologies like clever weight upcasting, which minimizes the necessity for weight upscaling throughout QLoRA, thereby optimizing reminiscence use.

As an open-source device below the Apache 2.0 license, Unsloth integrates seamlessly into the fine-tuning of distinguished LLMs like Mistral 7B, Llama, and Gemma, attaining as much as a 5x improve in fine-tuning velocity whereas concurrently decreasing reminiscence utilization by 60%. Moreover, it’s appropriate with various fine-tuning strategies like Flash-Consideration 2, which not solely hurries up inference however even the fine-tuning course of.

Surroundings Creation

We’ll first create our surroundings. For this we’ll obtain Unsloth for Google Colab.

!pip set up "unsloth[colab] @ git+https://github.com/unslothai/unsloth.git"Then we’ll create some default Unsloth values for coaching. These are:

from unsloth import FastLanguageModel

import torch

sequence_length_maximum = 2048

weights_data_type = None

quantize_to_4bit = TrueWe begin by importing the FastLanguageModel class from the Unsloth library. Then we outline some variables to be labored with all through the information:

- sequence_length_maximum: It’s the max sequence size {that a} mannequin can deal with. We give it a price of 4096.

- weights_data_type: Right here we inform what knowledge sort the mannequin weights must be. We gave it None, which can auto-select the information sort.

- quantize_to_4bit: Right here, we give it a price of True. This then tells the mannequin to load in 4 bits, in order that it may well simply match within the Colab GPU.

Downloading Mannequin and Creating LoRA Adaptors

Right here, we’ll begin downloading the Phi 3 Medium Mannequin. We’ll do that with the Unsloth’s FastLanguageModel class.

mannequin, tokenizer = FastLanguageModel.from_pretrained(

model_name = "unsloth/Phi-3-medium-4k-instruct",

max_seq_length = sequence_length_maximum,

dtype = weights_data_type,

load_in_4bit = quantize_to_4bit,

token = "YOUR_HF_TOKEN"

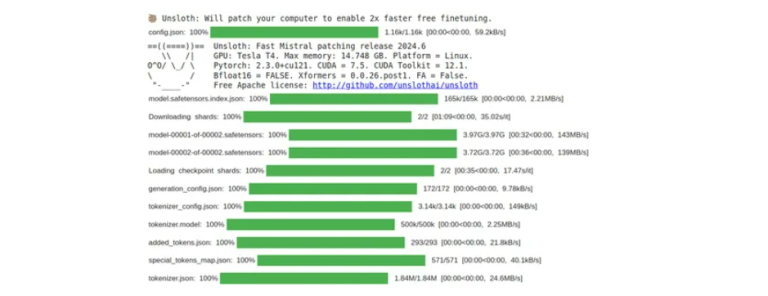

)After we run the code, the output generated will be seen within the pic above. Each the Phi 3 Medium mannequin and its tokenizer will likely be downloaded to the Colab atmosphere by fetching it from the HuggingFace Repository.

We can’t finetune the entire Phi 3 Medium mannequin. So we simply prepare a number of weights of the Phi 3 Mannequin. For this, we work with LoRA (Low-Rank Adaptation), which works by coaching solely a subset of parameters. So for this, we have to create a LoRA config and get the Parameter Environment friendly Finetuned Mannequin (peft mannequin) from this LoRA config. The code for this will likely be:

mannequin = FastLanguageModel.get_peft_model(

mannequin,

r = 16,

target_modules = ["q_proj", "k_proj", "down_proj", "v_proj", "o_proj",

"up_proj", "gate_proj"],

lora_alpha = 16,

bias = "none",

lora_dropout = 0,

random_state = 3407,

use_gradient_checkpointing = "True",

)- Right here “r” is the Rank of the LoRA Matrix. If we’ve got the next rank, then we have to prepare extra parameters, and if decrease rank, then a decrease variety of parameters. We set this to a price of 16.

- Right here the lora_alpha is the scaling issue of the weights current within the LoRA Matrix. It’s often stored the identical as rank to get optimum outcomes.

- Dropout will randomly shut down among the weights within the LoRA weight matrix. We’ve got stored it to 0, in order that we will get a rise within the coaching velocity and it has little affect on the efficiency.

- We will have a bias parameter for the weights within the LoRA matrix. However setting to None will additional improve the reminiscence effectivity and reduce the coaching time,

After operating this code, the LoRA Adapters for the Phi 3 Medium will likely be created. Now we will work with this peft mannequin and finetune it with a dataset of our selection.

Making ready the Dataset for Wonderful-tuning

Right here, we will likely be coaching the Phi 3 Medium Large Language Model with a dataset that may enable the mannequin to generate Cypher Queries that are mandatory for querying the Information Graph Databases just like the neo4j. So for this, we’ll obtain the dataset offered from a GitHub Repository. The command for this will likely be:

!wget https://uncooked.githubusercontent.com/neo4j-labs/text2cypher

/predominant/datasets/synthetic_gpt4turbo_demodbs/text2cypher_gpt4turbo.csvThe above command will obtain a CSV file. This CSV file accommodates the dataset that we’ll be working with to coach the Phi 3 Medium LLM. Earlier than that, we have to do some preprocessing. We’re solely taking a sure half i.e. a subset of the dataset. The code for this will likely be:

import pandas as pd

df = pd.read_csv('/content material/text2cypher_gpt4turbo.csv')

df = df[(df['database'] == 'suggestions') &

(df['syntax_error'] == False) & (df['timeout'] == False)]

df = df[['question','cypher']]

df.rename(columns={'query': 'enter','cypher':'output'}, inplace=True)

df.reset_index(drop=True, inplace=True)

Right here, we filter the information. We’d like the information coming from the suggestions database. We’d like solely these columns which do not need any syntax error and the place there isn’t any timeout. That is mandatory as a result of we’d like the Phi 3 to provide us a syntax error-free Cypher Queries when requested.

The dataset accommodates many columns, however solely the query and the cypher column are those we’d like. And we even renamed these columns to enter and output, the place the query column is the enter and the cypher column is the output that must be generated by the Massive Language Mannequin.

Within the output pic, we will see the primary 5 rows of the dataset. It accommodates solely two columns, enter and output. The database we’re working with, for the coaching knowledge, has a schema to it.

Schema for this Database

graph_schema = """

Node properties:

- **Film**

- `url`: STRING Instance: "https://themoviedb.org/film/862"

- `runtime`: INTEGER Min:1, Max: 915

- `income`: INTEGER Min: 1, Max: 2787965087

- `price range`: INTEGER Min: 1, Max: 380000000

- `imdbRating`: FLOAT Min: 1.6, Max: 9.6

- `launched`: STRING Instance: "1995-11-22"

- `nations`: LIST Min Measurement: 1, Max Measurement: 16

- `languages`: LIST Min Measurement: 1, Max Measurement: 19

- `imdbVotes`: INTEGER Min: 13, Max: 1626900

- `imdbId`: STRING Instance: "0114709"

- `12 months`: INTEGER Min: 1902, Max: 2016

- `poster`: STRING Instance: "https://picture.tmdb.org/t/p/w440_and_h660_face/uXDf"

- `movieId`: STRING Instance: "1"

- `tmdbId`: STRING Instance: "862"

- `title`: STRING Instance: "Toy Story"

- **Style**

- `title`: STRING Instance: "Journey"

- **Person**

- `userId`: STRING Instance: "1"

- `title`: STRING Instance: "Omar Huffman"

- **Actor**

- `url`: STRING Instance: "https://themoviedb.org/particular person/1271225"

- `bornIn`: STRING Instance: "France"

- `bio`: STRING Instance: "From Wikipedia, the free encyclopedia Lillian Di"

- `died`: DATE Instance: "1954-01-01"

- `born`: DATE Instance: "1877-02-04"

- `imdbId`: STRING Instance: "2083046"

- `title`: STRING Instance: "François Lallement"

- `poster`: STRING Instance: "https://picture.tmdb.org/t/p/w440_and_h660_face/6DCW"

- `tmdbId`: STRING Instance: "1271225"

- **Director**

- `url`: STRING Instance: "https://themoviedb.org/particular person/88953"

- `bornIn`: STRING Instance: "Burchard, Nebraska, USA"

- `bio`: STRING Instance: "Harold Lloyd has been referred to as the cinema’s “first m"

- `died`: DATE Min: 1930-08-26, Max: 2976-09-29

- `born`: DATE Min: 1861-12-08, Max: 2018-05-01

- `imdbId`: STRING Instance: "0516001"

- `title`: STRING Instance: "Harold Lloyd"

- `poster`: STRING Instance: "https://picture.tmdb.org/t/p/w440_and_h660_face/er4Z"

- `tmdbId`: STRING Instance: "88953"

- **Particular person**

- `url`: STRING Instance: "https://themoviedb.org/particular person/1271225"

- `bornIn`: STRING Instance: "France"

- `bio`: STRING Instance: "From Wikipedia, the free encyclopedia Lillian Di"

- `died`: DATE Instance: "1954-01-01"

- `born`: DATE Instance: "1877-02-04"

- `imdbId`: STRING Instance: "2083046"

- `title`: STRING Instance: "François Lallement"

- `poster`: STRING Instance: "https://picture.tmdb.org/t/p/w440_and_h660_face/6DCW"

- `tmdbId`: STRING Instance: "1271225"

Relationship properties:

- **RATED**

- `ranking: FLOAT` Instance: "2.0"

- `timestamp: INTEGER` Instance: "1260759108"

- **ACTED_IN**

- `function: STRING` Instance: "Officer of the Marines (uncredited)"

- **DIRECTED**

- `function: STRING`

The relationships:

(:Film)-[:IN_GENRE]->(:Style)

(:Person)-[:RATED]->(:Film)

(:Actor)-[:ACTED_IN]->(:Film)

(:Actor)-[:DIRECTED]->(:Film)

(:Director)-[:DIRECTED]->(:Film)

(:Director)-[:ACTED_IN]->(:Film)

(:Particular person)-[:ACTED_IN]->(:Film)

(:Particular person)-[:DIRECTED]->(:Film)

"""The schema accommodates all of the Node properties and the Relationships between the nodes which might be introduced within the suggestions graph database. Now, we’ll convert these to an instruction format, so the mannequin will solely output a cypher question solely when it has been instructed to take action. The operate for this will likely be.

immediate = """Given are the instruction beneath, having an enter

that gives additional context.

### Instruction:

{}

### Enter:

{}

### Response:

{}"""

token_eos = tokenizer.eos_token

def format_prompt(columns):

directions = f"Use the beneath textual content to generate a cypher question.

The schema is given beneath:n{graph_schema}"

inps = columns["input"]

outs = columns["output"]

text_list = []

for enter, output in zip(inps, outs):

textual content = immediate.format(directions, enter, output) + token_eos

text_list.append(textual content)

return { "textual content" : texts, }- Right here we first outline our Immediate Template. On this template, we begin by defining the instruction then adopted by the enter, and at last the output.

- Then we create a operate referred to as format_prompt(). This takes within the knowledge after which extracts the enter and output columns from the information.

- Then we iterate by means of every row within the enter and output column and match them to the Immediate Template.

- Together with that, we even added the end-of-sentence token referred to as token_eos to the Immediate, which can inform the mannequin that the era must be stopped.

- We lastly return the checklist containing all these Prompts in a dictionary format.

This operate above will likely be handed to our dataset to create the ultimate column. The code for this will likely be:

from datasets import Dataset

dataset = Dataset.from_pandas(df)

dataset = dataset.map(format_prompt, batched = True)

- Right here, we begin by importing the Dataset class from the datasets library.

- Then we convert our dataset, which is of sort DataFrame to the Dataset sort by calling the .from_pandas() technique and passing it to the DataFrame.

- Now, we’ll map the operate that we’ve got created to create our remaining dataset for coaching.

Working the code will create a brand new column referred to as “textual content”, which can include the prompts that we’ve got outlined within the format_prompt() operate. From the pic above, we will see that there are a complete of 700+ rows of knowledge in our dataset and there are three columns, which might be textual content, enter, and output. With this, we’ve got our knowledge prepared for fine-tuning.

Wonderful-tuning Phi 3 Medium for Text2Cypher Question

We are actually able to fine-tune the Phi 3 Medium on the Cypher Question dataset. On this part, we begin by creating our Coach and the corresponding Coaching Arguments that we have to prepare our mannequin on this dataset that we’ve got ready. The code for this will likely be:

from trl import SFTTrainer

from transformers import TrainingArguments

coach = SFTTrainer(

mannequin = mannequin,

tokenizer = tokenizer,

train_dataset = dataset,

dataset_text_field = "textual content",

max_seq_length = sequence_length_maximum,

dataset_num_proc = 2,

packing = False,

args = TrainingArguments(

per_device_train_batch_size = 2,

gradient_accumulation_steps = 4,

warmup_steps = 5,

num_train_epochs=1,

learning_rate = 2e-4,

fp16 = True,

logging_steps = 1,

optim = "adamw_8bit",

weight_decay = 0.02,

lr_scheduler_type = "linear",

output_dir = "outputs",

),

)- We begin by importing the SFTTrainer from the trl library which we’ll work with to carry out the Supervised Wonderful Tuning.

- We even import the TrainingArguments class from the transformers library to set the coaching config for coaching the mannequin.

- Then we create an occasion of SFTTrainer with varied parameters and retailer it within the coach variable mannequin = mannequin: Tells the pre-trained mannequin to be fine-tuned.

- tokenizer = tokenizer: Tells the tokenizer related to the modeltrain_dataset = dataset: Units the dataset that we’ve got ready for coaching the mannequin.

- dataset_text_field = “textual content”: Signifies the sphere within the dataset that accommodates the textual content knowledge.

- max_seq_length = sequence_max_length: Right here, we offer the utmost sequence size for the mannequin.

- dataset_num_proc = 2: Variety of processes to make use of for knowledge loading.

- packing = False: Disables packing of sequences, which might velocity up coaching for brief sequences.

Whereas coaching a Massive Language Mannequin or a Deep Studying mannequin, we should set many alternative hyperparameters, which carry out the best-performing mannequin. These embrace completely different parameters.

Completely different Parameters

- At a time we ship two examples to the processor, so we choose a batch dimension of two.

- We’d like 4 accumulation steps earlier than updating the gradients within the backward cross. So we’ve got set it to 4.

- We’ve got set the warmup steps to three, so the training fee won’t be in impact till three steps are accomplished.

- We wish to run the coaching for the entire dataset, so gave one epoch for the coaching.

- We have to print out the metrics after each step, so we’ll log the coaching metrics just like the accuracy and the coaching loss for every step.

- The optimizer will maintain the gradients in order that they may attain a world minimal in order that the accuracy loss is decreased. Right here for the optimizer, we’ll go together with the Adam optimizer.

- Weight decay is required so the weights don’t go to excessive values. So gave it a decay worth of 0.02.

- The educational fee scheduler will change the training fee whereas the coaching is going on. Right here we wish it to alter linearly so we gave it the choice referred to as “linear”.

We are actually completed with defining our Coach and the TrainingArguments for coaching our quantized Phi 3 Medium 14Billion Massive Language Mannequin. Working the coach.prepare() will begin the coaching.

trainer_stats = coach.prepare()Working the above will begin the coaching course of. In Google Colab, working with the free T4 GPU, it takes round 1 hour and 40 minutes to undergo 1 epoch on the coaching knowledge. It has taken round 95 epochs to finish one epoch. Lastly, the coaching is accomplished.

Producing Cypher Question with Phi 3 Medium

We’ve got now completed coaching the mannequin. Now we’ll take a look at the mannequin to examine how effectively it generates cypher queries given a textual content.

FastLanguageModel.for_inference(mannequin)

inputs = tokenizer(

[

prompt.format(

f"Convert text to cypher query based on this schema: n{graph_schema}",

"What are the top 5 movies with a runtime greater than 120 minutes"

"",

)

], return_tensors = "pt").to("cuda")

outputs = mannequin.generate(**inputs, max_new_tokens = 128)

print(tokenizer.decode(outputs[0], skip_special_tokens = True))

- We begin by loading the skilled mannequin for inference by passing it to the for_inference() technique of the FastLanguageModel class.

- Then we name the tokenizer and provides it the enter Immediate. We work with the identical Immediate Template that we’ve got outlined and provides the questions “What are the highest 5 motion pictures?.

- These are then given to the mannequin to provide out the output tokens and we’ve got set the max new tokens to 128 and retailer the generated end result within the output variable.

- Lastly, we decode the output tokens and print it.

We will see the outcomes of operating this code within the above pic. We see that the Cypher Question generated by the mannequin matches the bottom reality, Cypher Question. Allow us to take a look at with some extra examples to see the efficiency of the fine-tuned Phi 3 Medium for Cypher Question era.

inputs = tokenizer(

[

prompt.format(

f"Convert text to cypher query based on this schema: n{graph_schema}",

"Which 3 directors have the longest bios in the database?"

"",

)

], return_tensors = "pt").to("cuda")

outputs = mannequin.generate(**inputs, max_new_tokens = 128)

print(tokenizer.decode(outputs[0], skip_special_tokens = True))

inputs = tokenizer(

[

prompt.format(

f"Convert text to cypher query based on this schema: n{graph_schema}",

"List the genres that have movies with an imdbRating less than 4.0.",

"",

)

], return_tensors = "pt").to("cuda")

outputs = mannequin.generate(**inputs, max_new_tokens = 128)

print(tokenizer.decode(outputs[0], skip_special_tokens = True))

We will see that in each the examples above, the fine-tuned Phi 3 Medium mannequin has generated the right Cypher Question for the offered query. Within the first instance, the Phi 3 Medium did present the proper reply however took barely a distinct strategy. With this, we will say that finetuning Phi 3 Medium on the Cypher Dataset has made its era barely extra correct whereas producing Cypher Queries given a textual content.

Conclusion

This information has detailed the fine-tuning means of the Phi 3 Medium mannequin for producing Cypher queries from pure language inputs, geared toward enhancing accessibility to Information Graphs like Neo4j. By way of leveraging instruments like Unsloth for environment friendly mannequin coaching and deploying strategies similar to LoRA adapters to optimize parameter utilization, builders can successfully translate complicated knowledge queries into structured Cypher instructions.

Key Takeaways

- Phi 3 Household of fashions developed by Microsoft offers small builders to coach these fashions on their personalised datasets for various situations.

- Unsloth, a Python library is a good device for fine-tuning small language fashions which enhance the coaching speeds and reminiscence effectivity.

- Creating the atmosphere entails putting in mandatory libraries and configuring parameters just like the sequence size and knowledge sort.

- Lora is a technique that permits us to coach solely a subset of the entire parameters of the Massive Language Mannequin thus permitting us to coach them on a shopper {hardware}.

- Textual content to Cypher question era will enable builders to let Massive Language Fashions entry Graph Databases to offer extra correct responses.

Regularly Requested Questions

A. Phi-3 Medium is a compact and highly effective LLM, making it appropriate for builders with restricted sources. Wonderful-tuning permits it to specialise in Cypher question era, enhancing accuracy and effectivity.

A. Unsloth is a framework particularly designed to optimize the fine-tuning course of for giant language fashions. It affords important velocity and reminiscence utilization enhancements in comparison with conventional strategies

A. The information makes use of a dataset containing pairs of pure language questions and their corresponding Cypher queries. This dataset helps the mannequin study the connection between textual content and the structured question language.

A. The information outlines steps for establishing the coaching atmosphere, downloading the pre-trained mannequin, and getting ready the dataset. It then particulars the right way to fine-tune the mannequin utilizing Unsloth and a particular coaching configuration.

A. As soon as skilled, the mannequin can be utilized to generate Cypher Question from Textual content. The information offers an instance of the right way to construction the enter and decode the generated question.

The media proven on this article is just not owned by Analytics Vidhya and is used on the Creator’s discretion.