The advantages from utilizing MLOps software program for information science have been addressed and acknowledged for years, no matter what AI/ML sort the engineers are working towards. It reached a degree the place it slowly grew to become a normal that releases the information scientists from the burden of getting to manually observe their experiments, manually model their belongings (datasets, fashions), sustaining the codebase for serving the fashions and so forth.

Often after recognizing the theoretical benefits of utilizing MLOps, when an inexperienced information scientist needs to make use of it in its on a regular basis work, it turns to small open-source software program instruments which have a large group assist, and on prime of it may be used on their native environments or inside their notebooks. Normally, primarily for private functions.

One such MLOps software program that has been extraordinarily in style for the final 5–6 years is MLflow. It goes past fixing the beforehand talked about ache factors of the information scientists’ work by offering mannequin analysis, mannequin packaging and mannequin deployment as effectively. For instance, one in style manner of routinely reaching compatibility of virtually any mannequin with a one other MLOps service from AWS (Sagemaker) that the information science groups in Intertec have exploited, is to construct a Sagemaker suitable picture, with an choice to push it to ECR (Elastic Container Registry), after which deploy it on Sagemaker by creating an endpoint.

Right here is an instance of our group changing a skilled Xception CNN mannequin for garments classification, whose artifacts are saved in an S3 location, right into a Sagemaker suitable mannequin and deploying it with simply a number of traces of code:

# mlflow fashions build-docker — model-uri {S3_URI} — title “mlflow-sagemaker-xception:newest”

# mlflow sagemaker build-and-push-container — construct — push -c mlflow-sagemaker-xception

mlflow deployments create — goal sagemaker — title mlflow-sagemaker-xception — model-uri {S3_URI} -C region_name={AWS_REGION} -C image_url={ECR_URI} -C execution_role_arn={SAGEMAKER_ARN}

In case you are inquisitive about extra examples of MLflow utilization for AWS and particularly Sagemaker, I encourage you to take a look at the 2nd version of Julien Simon’s ebook “Study Amazon Sagemaker”.

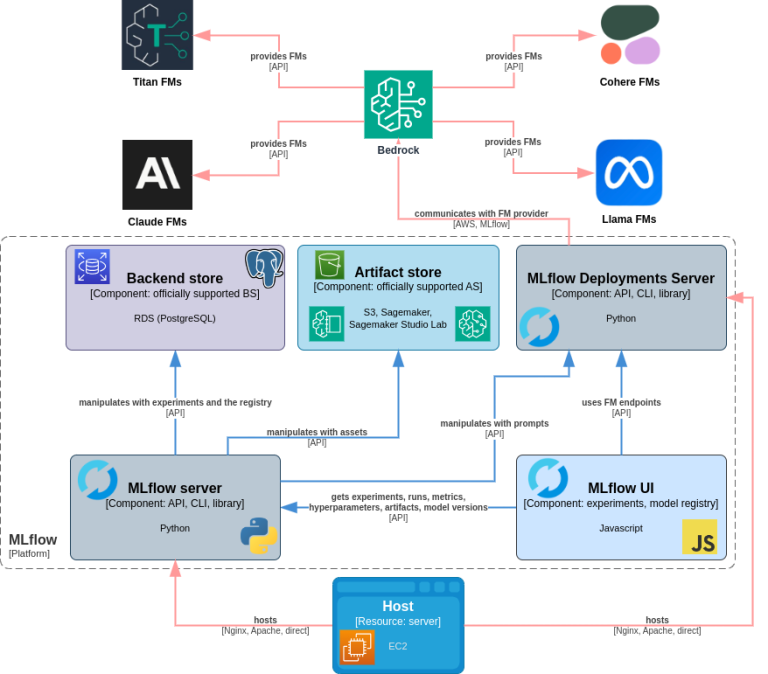

MLflow’s compatibility elements lengthen to extra companies inside the AWS ecosystem. Since MLflow isn’t just an abnormal library, however a server with a UI that connects to completely different storages for its artifact retailer and completely different databases for its backend retailer, there are a number of choices to select from. But the information scientists normally begin by operating the server regionally with out customizing the setup, selecting the native or the pocket book’s file system for each the artifact retailer and the backend retailer. In some circumstances they configure the MLflow to make use of a neighborhood database (SQLite, MySQL, MSSQL, Postgre) as backend retailer or MinIO as artifact retailer.

This setup works completely for them on a private foundation, however issues come up after they wish to expose their progress or work inside a group.

A group or perhaps a entire firm supplier of AI/ML companies consisting of a number of groups akin to Intertec, can’t proceed having private MLOps utilization from each information scientist for a chronic time period. For the people which can be members of various groups, as a consequence the belongings won’t be reusable, the experiments must be manually shared and sure permissions will should be set for each platform as a way to entry the progress particulars of the opposite one. As for the information scientists inside the identical group, if there is no such thing as a centralized MLOps setup, the efforts are dispersed and one group particular person can’t proceed from the place his/her faculty completed.

Since many of the information scientists in Intertec had been beforehand skilled in MLflow, making them change to a different MLOps software program would have induced a protracted interval of adjustment. Due to this fact MLflow needed to be stored as the specified MLOps platform. As well as, the corporate’s major cloud supplier is AWS which provides a number of infrastructure choices as companies that may signify the items of implementation for the centralized MLflow platform. Even for AWS companies that aren’t formally supported by MLflow, profitable setup will be completed, implementing the backend retailer with DynamoDB NoSQL database.

A few of Intertec’s companions and shoppers whose infrastructure depends on AWS, in some unspecified time in the future in time acknowledged the need and advantages of getting a centralized MLOps platform by following Intertec’s instance, though they got here up with their very own necessities concerning the companies which can be used for internet hosting MLflow. Intertec achieved profitable implementation in each inhouse and shoppers’ circumstances.

Already having a DevOps group skilled in AWS and the data of MLflow‘s performance, the cloud setup went easily. Over the upkeep interval there have been some minor challenges akin to needing to improve the backend retailer after the MLflow model was up to date. AWS provides number of sources which can be suitable with MLflow’s setup necessities, however listed here are our suggestions:

- depend on smaller occasion to run the MLflow server — MLflow is light-weight, doesn’t want a big capability occasion to run;

- select RDS (both MySQL or PostgreSQL) for a backend retailer — regardless of what number of experiments you log, you’ll not often attain the quantity of information for which you’ll need a sooner database choice, it merely doesn’t pay for all of the workarounds you should do as a way to use a NoSQL database as a substitute, and on prime of it you could have to intervene manually to make sure adjustments that means SQL will likely be extra appropriate;

- use S3 as an artifact retailer — even when beneath some circumstances you wish to keep away from MLflow to load the artifacts (i.e. belongings) for you, you may nonetheless handle to entry them instantly from S3.

Having this in thoughts, Intertec’s setup of MLflow on AWS for inhouse initiatives and analysis is comparatively easy, consisting of RDS (PostgreSQL) for the backend retailer, S3 for the artifact retailer and a small EC2 occasion for the server itself.

As a viable various, Intertec had additionally ready an MLflow setup on Kubernetes. Regardless that this setup is for cloud agnostic functions, it was deployed on EKS utilizing MinIO as an artifact retailer and SQLite as a backend retailer, together with extra ML associated software program such because the Label Studio and JupyterHub, making a extra full MLOps platform.

For our consumer, the MLflow setup had a requirement to be a part of the already devoted ECS cluster for all of the AI/ML associated companies. Due to this fact MLflow server was supposed to be part of it, however just for the manufacturing AWS account, since MLflow already takes care of all of the environments internally. As a consequence, cross account useful resource entry was enabled utilizing IAM service as a way to make the communication between numerous companies on different AWS accounts and MLflow server doable. The entry to MLFlow UI is achieved utilizing ALB with dynamic host port mapping for the ECS process, whereas the networking within the process definition itself is ready to bridge mode.

The opposite components of the MLflow setup depend on S3 for the artifact retailer and RDS (MySQL) for the backend retailer. Computerized upkeep for the backend retailer is completed inside the MLflow Docker file itself.

FROM python:$PYTHON_BASE_IMAGE-slim

RUN pip set up mlflow==$MLFLOW_VERSION

RUN pip set up pymysql==$PYMYSQL_VERSION

RUN pip set up boto3==$BOTO3_VERSION

EXPOSE $MLFLOW_INTERNAL_PORT

CMD mlflow db improve mysql+pymysql://$MLFLOW_RDS_MYSQL_USER:$MLFLOW_RDS_MYSQL_PASS@$MLFLOW_RDS_MYSQL_HOST:3306/backendstore && mlflow server — default-artifact-root s3://$MLFLOW_S3_BUCKET/mlflow — backend-store-uri mysql+pymysql://$MLFLOW_RDS_MYSQL_USER:$MLFLOW_RDS_MYSQL_PASS@$MLFLOW_RDS_MYSQL_HOST:3306/backendstore — host $MLFLOW_INTERNAL_HOST

Intertec has been working with the primary setup of MLflow on AWS for over 5 years, and the consumer utilizing the third setup efficiently for nearly 4. On this interval the corporate didn’t expertise main issues nor put a whole lot of effort into sustaining MLflow on AWS. The groups benefited from elevated operationalization which not directly decreased the time to marketplace for a number of initiatives.

With the emergence of GenAI idea and know-how, the MLOps software program, the engineers and the cloud suppliers needed to alter and are nonetheless adapting. MLOps platforms enhance their GenAI, engineers slowly upskill in the direction of immediate engineering and the cloud suppliers are releasing companies by which they provide a wide range of rising foundational fashions.

MLflow first enabled the assist of logging the prompts as conventional ML fashions, then quickly launched the Deployments Server as an addition for managing giant GenAI fashions with playground, and eventually integration with RAG frameworks/suppliers. Within the meantime AWS launched Bedrock, a service for dealing with foundational fashions, supporting fine-tuning and RAG. As a GenAI and RAG supplier, Bedrock is built-in with MLflow, a mix which makes it extremely appropriate for upkeep. Right here is my working instance of a Docker file for deploying the Deployments Server on EC2 along with the already present MLflow server.

FROM python:$PYTHON_BASE_IMAGE-slim

RUN pip set up mlflow[genai]==$MLFLOW_VERSION

EXPOSE $MLFLOW_INTERNAL_PORT

CMD mlflow deployments start-server — port $MLFLOW_INTERNAL_PORT — host $MLFLOW_INTERNAL_HOST — employees $NUMBER_OF_WORKERS

Anticipating that the Deployments Server and different new options associated to GenAI will quickly “go away” the experimental state from MLflow’s facet, in addition to Bedrock increasing the supply all through AWS, Intertec has been getting ready for his or her implementation and manufacturing utilization. Already having developed RAG functions akin to Confluence documentation search, common product entity extractor, tabular content material metadata extractor and firm information onboarder, for the previous yr, the brand new MLOps functionalities must be exploited. I personally anticipate much more use circumstances to come back that may require not solely correct growth however elevated operationalization which AWS can provide.