Each in Statistics and Machine Studying, Bias-Variance Tradeoff is a elementary idea which describes the relationship between a Mannequin’s complexity, accuracy of its predictions and the way greatest it may possibly make predictions on beforehand unseen information ( which isn’t used whereas coaching the mannequin).

In Machine Studying, we divide any dataset into 2 elements

- Coaching Information

- Testing Information

information may be divided into any ratio like 70:30,80:20 and so on , by default python code divides into 75:25 ratios.

so we use these coaching information to coach our mannequin and testing information is to check the mannequin.

Earlier than diving additional, we have to perceive about “Bias” and “Variance” terminologies.

In ML, when mannequin predicting fallacious values then we name it as prediction error and these prediction errors are generally known as “Bias” and “Variance”.

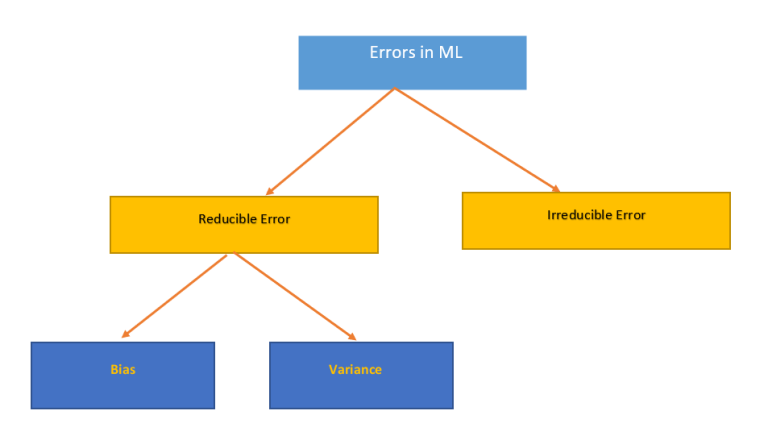

Error is measure of how precisely, an algorithm could make predictions for beforehand unknown dataset, now we have 2 varieties of error in ML.

It refers back to the error or distinction between the anticipated worth by Mannequin and precise worth.

What’s Meant by Excessive Bias

When Mannequin is having excessive bias, it means the mannequin is simply too simplistic relative to the true underlying values/dataset. This usually leads to the mannequin constantly lacking related relationships between enter options and goal variable.

Our aim is scale back bias, means scale back distinction between precise worth(y) and predicted worth(Yˆ) in order that bias is low.

Eg: We now have linear regression Mannequin which predicts housing costs based mostly on “Solely bedrooms” so this mannequin is having excessive Bias as its contemplating different components like location, ameneties, measurement and so on which can play essential position for housing costs. Subsequently, the fashions predictions may constantly underestimate or overestimate precise housing costs resulting from simplicity.

If we summarize, Bias measures how far the predictions of a mannequin are from the precise worth, will mirror the mannequin’s means to seize the true relationship between variable within the information.

It refers back to the adjustments within the mannequin when utilizing totally different portion of the coaching or testing dataset.

Now lets talk about about Bias Variance TradeOff:

Assume now we have Housing costs dataset of 10,000 information which we divided into coaching dataset (80% ) and Testing dataset (20%).

Instance: I’d move Coaching information to Mannequin for predictions, if its giving good accuracy then we name it as “Low Bias” and if its dangerous accuracy then it’s known as as “Excessive Bias”.

Much like the coaching information, as soon as mannequin is developed, we’ll move Check information to the mannequin for predictions.if its giving good accuracy then we name it as “Low Variance” and if its dangerous accuracy then its known as as “Excessive Variance”.

So in abstract, we have to perceive that as Bias is used for coaching dataset and variance is used for Testing dataset and steadiness the Bias and Variance to get good accuracy which is known as as Bias Variance Tradeoff.

Assume, now we have 3 fashions as under and passing coaching and testing dataset will give following outcomes.

Hope you’ve gotten loved studying this text. Thankyou.