Machine Studying (ML) initiatives are part of software program engineering options, however they’ve distinctive traits in comparison with front-end or back-end initiatives. By way of High quality Assurance (QA), ML initiatives have two predominant issues: code type and unit testing. On this article, I’ll present you the right way to apply QA efficiently in your ML initiatives with Kedro.

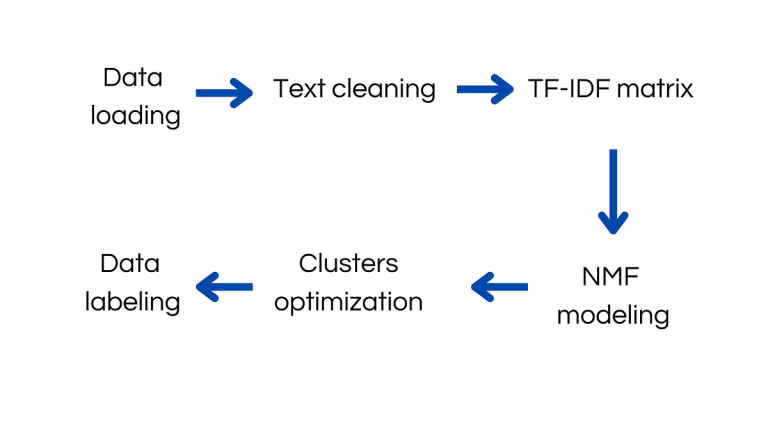

We are going to develop an unsupervised mannequin to label texts. In some situations, we do not need sufficient time or cash to label information manually. Therefore, a potential resolution is to make use of Foremost Matter Identification (MTI). I cannot cowl the small print of this mannequin, I’m assuming you’ve got experience in ML and need to add a brand new degree to your initiatives. The information used comes from a earlier Kaggle repository, which incorporates the titles and abstracts of analysis articles. The pipeline created in Kedro reads the info, cleans the textual content, creates a TF-IDF matrix, and fashions it utilizing the Non-Unfavourable Matrix Factorization (NMF) approach. MTI is carried out on the titles and abstracts of the articles. A abstract of this pipeline could be discovered under.

I bear in mind my first day as an information scientist: all the morning was spent on mission switch. This mission was for the auto business and was developed in R. After a couple of weeks, the code failed, and we obtained suggestions from the consumer to enhance it. At that time, guess what? When the lead information scientist and I reviewed the code, neither of us may perceive what was occurring. The earlier information scientist hadn’t adopted code type practices, and all the pieces was an entire mess. It was so horrible to keep up and skim that we determined to redo the mission from scratch in Python.

As you learn beforehand, not following code type makes initiatives extremely onerous to keep up, and that is no exception for ML initiatives. So, how will you keep away from this in your mission? You would possibly discover varied assets on the web, however from my private expertise, the very best technique is to work with Kedro within the ML context.

Kedro incorporates the “ruff” package deal. Whenever you create a Kedro mission, you’ll be able to allow it by deciding on the linting choice. On this case, I’ll choose choices 1–5 and seven.

With ruff, you’ll be able to shortly test and format your code type. To check which recordsdata want reformatting, run the next command within the root folder of your mission:

ruff format --check

This may inform you which recordsdata ought to be modified to observe the established code type. In my case, the recordsdata to be reformatted are nodes.py and pipeline.py.

To use the formatting, run the next command, which is able to routinely alter the code type in your ML mission:

ruff format

As an example, a bit of code earlier than reformatting:

def calculate_tf_idf_matrix(df: pd.DataFrame, col_target: str):

"""

This operate receives a DataFrame and a column identify and returns the TF-IDF matrix.Args:

df (pd.DataFrame): a DataFrame to be reworked

col_target (str): the column identify for use

Returns:

matrix: the TF-IDF matrix

vectorizer: the vectorizer used to remodel the matrix

"""

vectorizer = TfidfVectorizer(max_df = 0.99, min_df = 0.005)

X = vectorizer.fit_transform( df[col_target] )

X = pd.DataFrame(X.toarray(),

columns = vectorizer.get_feature_names_out())

return X, vectorizer

Instantly after operating ruff format, the code is:

def calculate_tf_idf_matrix(df: pd.DataFrame, col_target: str):

"""

This operate receives a DataFrame and a column identify and returns the TF-IDF matrix.Args:

df (pd.DataFrame): a DataFrame to be reworked

col_target (str): the column identify for use

Returns:

matrix: the TF-IDF matrix

vectorizer: the vectorizer used to remodel the matrix

"""

vectorizer = TfidfVectorizer(max_df=0.99, min_df=0.005)

X = vectorizer.fit_transform(df[col_target])

X = pd.DataFrame(X.toarray(), columns=vectorizer.get_feature_names_out())

return X, vectorizer

Now, you don’t have any purpose to not observe the very best code type practices. Kedro and Ruff will assist make your life simpler. Nonetheless, operating further instructions won’t be essentially the most simple a part of the software program growth course of. However don’t fear, be joyful! You possibly can automate code type overview with the “pre-commit” library.

“Pre-commit” will run computerized linting and formatting in your mission each time you make a commit. To allow it, first set up the pre-commit library by operating:

pip set up pre-commit

After that, you must add a brand new file into your root folder of the mission that is the .pre-commit-config.yaml file. Inside this file, you must outline the hooks. A hoock is simply an instruction to do with ruff, these are executed sequentially. You possibly can see extra data, within the ruff-precommit repository. To make your life simpler, under, I wrote a bit of code to run liting and code formating for all of your python recordsdata similar to jupyter notebooks and scripts. You simply have to vary together with your model of ruff.

repos:

- repo: https://github.com/astral-sh/ruff-pre-commit

rev: v0.1.15

hooks:

- id: ruff

types_or: [python, pyi, jupyter]

args: [--fix]

- id: ruff-format

types_or: [python, pyi, jupyter]

To indicate you the magic, I added a node with out the correct code type. That is the way it seems to be earlier than the code linting and formating

node(inputs=["F_abstracts"],outputs="results_abstracts", identify = "predictions_abstracts")

Now, I’m going to do a brand new commit, through which I’ll add this aditional step within the pipeline. After that, you’ll be able to see the ultimate outcome, the way it ended:

node(

inputs=["F_abstracts"],

outputs="results_abstracts",

identify="predictions_abstracts"

)

As I discussed earlier than, ruff and pre-commit will scale back the prospect to make errors in code type high quality. As soon as, you’ve got configured this step in you kedro mission, all the pieces shall be simpler.

Unit testing is utilized in programming to confirm if a bit of code is behaving as anticipated. This may be utilized in lots of levels of ML mission growth, similar to information ingestion, function engineering, and modeling. To focus on the significance of testing, I need to share a narrative about growing a mannequin for consumer X. This consumer had not developed ETLs to avoid wasting the info periodically in a database following the very best information requirements. As an alternative, the consumer downloaded the info from an exterior SaaS after which uploaded it right into a bucket. What was the issue? The issue was that typically the consumer modified the configuration of how the info was exported. Many occasions, I obtained complaints from the Undertaking Supervisor (PM) that my code failed. Nonetheless, the basis of the issue was that the info modified in measurement and even the sorts of variables. What a multitude!

To be sincere, I keep in mind that I used to spent a number of hours checking the place the issue was situated. As a junior information scientist, I didn’t realized in regards to the significance of unit testing, and the way it can helped me to keep away from some complications. Think about how simple it may very well be to say to my PM, the issue was at this level as a result of the consumer modified this A function within the dataset. Thus, to make your life simpler, I’ll cowl how to do that in Kedro and save many future complains as information scientist.

How do you carry out unit testing? Effectively, initially, it is advisable to make sure that you chose this feature while you created the Kedro mission. Did you keep in mind that? I chosen choices 1 to five after which 7. Subsequently, we are able to proceed.

To outline checks, it is advisable to create recordsdata contained in the check folder within the root of your mission. Kedro makes use of pytest to create all the required unit checks. Inside your check folder, you must create recordsdata that begin with “check”; in any other case, the recordsdata won’t be acknowledged as unit checks. For instance, you’ll be able to see how I created two checks.

As I discussed earlier, I need to create a check to test information construction and sort. To test the info construction, I’ll use the file “test_data_shape.py”. Inside this file, it was created a technique with the “fixture” decorator. This helps to make use of the info returned on this technique in any subsequent operate. After that, it was created a category with a technique that shall be run within the check. The category should begin with “Take a look at” and in addition the operate to run with “check”. In my case, I need to make sure that the dataset has solely 9 columns.

import pytest

from pathlib import Path

from kedro.framework.startup import bootstrap_project

from kedro.framework.session import KedroSession# that is wanted to begin the kedro mission

bootstrap_project(Path.cwd())

@pytest.fixture()

def information():

# the kedro session is loaded inside a with to shut it after the utilization

with KedroSession.create() as session:

context = session.load_context()

df = context.catalog.load("practice")

return df

class TestDataQuality:

def test_data_shape(self, information):

df = information

assert df.form[1] == 9

To run the check, it is advisable to be situated within the root folder of your mission. You possibly can run the check with the command:

pytest

This may execute each check file you’ve got created and supply a report. This report exhibits you which of them elements of your code are lined by the checks and which aren’t.

Lastly, that’s all the pieces. Should you reached this level, you discovered the right way to enhance the standard of your machine studying initiatives. I hope it will scale back the complications brought on by dangerous practices in software program engineering initiatives.

Thanks very a lot for studying. For extra data, questions, you’ll be able to observe me on LinkedIn.

The code is obtainable within the GitHub repository sebassaras02/qa_ml_project (github.com).