Naive Bayes is a household of easy but highly effective classification algorithms primarily based on Bayes’ Theorem with the “naive” assumption of independence between each pair of options. Regardless of its simplicity, Naive Bayes is understood for its effectivity and efficiency in quite a lot of functions, particularly in textual content classification.

To grasp Naive Bayes we have to first perceive a little bit of likelihood

What are impartial occasions?

Two occasions are mentioned to be impartial if the prevalence of 1 occasion doesn’t have an effect on the likelihood of the prevalence of the opposite occasion. In different phrases, the result of 1 occasion has no affect on the result of the opposite.

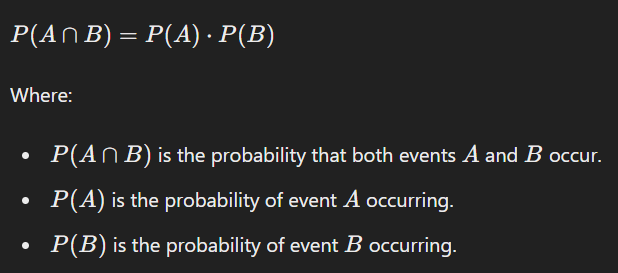

Occasions A and B are impartial if and provided that:

Eg. Tossing a coin or rolling a cube

What are dependent occasions?

Two occasions are mentioned to be dependent if the prevalence of 1 occasion impacts the likelihood of the prevalence of the opposite occasion. In different phrases, the result of 1 occasion influences the result of the opposite.

Occasions A and B are dependent if:

Eg. Deciding on Marbles from a Bag With out Alternative: Suppose you might have a bag with 3 purple marbles and a pair of blue marbles. Should you draw one marble and don’t exchange it, the likelihood of drawing a purple marble on the second draw is determined by the result of the primary draw. If a purple marble is drawn first, the likelihood of drawing one other purple marble is: 2/4

What’s conditional likelihood?

Conditional likelihood is a basic idea in likelihood concept that measures the probability of an occasion occurring on condition that one other occasion has already occurred.

What’s Bayes Theorem?

Bayes Theorem describes the likelihood of an occasion, primarily based on prior information of situations that may be associated to the occasion.

In an actual dataset we need to predict the worth of our dependent variable on condition that the impartial variables have already occurred.

Right here x1,x2,x3 are the impartial and y is the dependent variable

Sensible Implementation of Naive Bayes

Let’s use the Naive Bayes classifier to foretell the species of the well-known Iris dataset from the seaborn library. The Iris dataset comprises measurements of three totally different species of iris flowers (setosa, versicolor, and virginica).

# Step 1: Import essential libraries

import seaborn as sns

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.naive_bayes import GaussianNB

from sklearn.metrics import accuracy_score, confusion_matrix, ConfusionMatrixDisplay

import matplotlib.pyplot as plt# Step 2: Load and discover the dataset

iris = sns.load_dataset('iris')

print(iris.head())

print(iris.describe())

print(iris['species'].value_counts())

# Step 3: Preprocess the info

X = iris.drop(columns='species')

y = iris['species']

# Break up the info into coaching and testing units

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42)

# Step 4: Prepare the Naive Bayes classifier

mannequin = GaussianNB()

mannequin.match(X_train, y_train)

# Step 5: Consider the mannequin

y_pred = mannequin.predict(X_test)

accuracy = accuracy_score(y_test, y_pred)

print(f'Accuracy: {accuracy * 100:.2f}%')

# Step 6: Visualize the outcomes

conf_matrix = confusion_matrix(y_test, y_pred, labels=mannequin.classes_)

disp = ConfusionMatrixDisplay(confusion_matrix=conf_matrix, display_labels=mannequin.classes_)

disp.plot()

plt.title('Confusion Matrix')

plt.present()

Output