We’ve got mentioned all these strategies earlier in regression with tabular cross section data, now we’ll simply apply these for picture knowledge.

1.b.1. AutoEncoders (AE) :

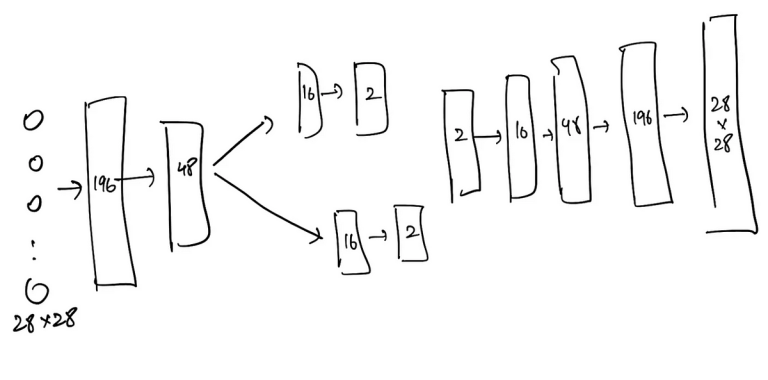

- AE with FFNN

- AE with CNN (works higher for photographs knowledge)

1.b.2. Variational AutoEncoders (VAE) :

- VAE with FFNN

- VAE with CNN (works higher for photographs)

1.b.3. Vector Quantized — Variational Autoencoders (VQ-VAE) :

- VQ-VAE with FFNN

- VQ-VAE with CNN (works higher for photographs)

1.b.4. Generative Adversarial Networks (GANs) :

- GAN with FNN

- GAN with CNN (works higher for photographs)

- Latent GAN with FNN

- Latent GAN with CNN (works higher for photographs)

1.b.5. VAE — GAN :

- VAE-GAN with FFNN

- VAE-GAN with CNN (works higher for photographs)

- Latent VAE-GAN with FFNN

- Latent VAE-GAN with CNN (works higher for photographs)

1.b.6. VQ — GAN :

- VQ-VAE with FFNN

- VQ-VAE with CNN (works higher for photographs)

- Latent VQ-VAE with FFNN

- Latent VQ-VAE with CNN (works higher for photographs)

1.b.7. Denoising Diffusion Probabilistic Models (DDPMs) :

- DDPM with FFNN

- DDPM with CNN (works higher for photographs)

- Latent DDPM with FFNN

- Latent DDPM with CNN (works higher for photographs)

1.b.8. Denoising Diffusion Implicit Fashions (DDIMs) :

- DDIM with FFNN

- DDIM with CNN (works higher for photographs)

- Latent DDIM with FFNN

- Latent DDIM with CNN (works higher for photographs)