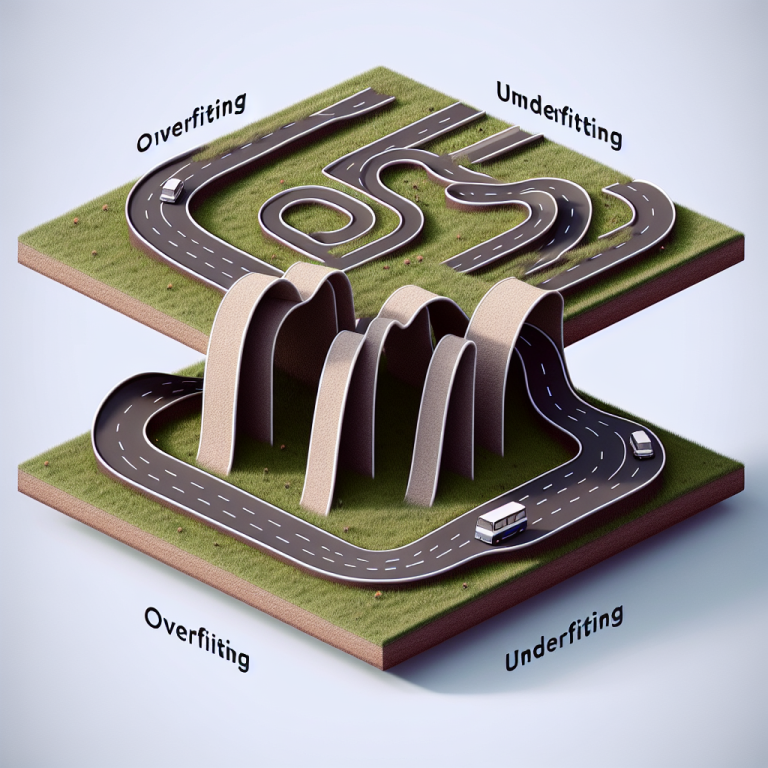

Earlier than understanding the Bias-Variance tradeoff let me clarify overfitting and underfitting issues that may have an effect on the efficiency of machine studying fashions.

Overfitting happens when a machine-learning mannequin is skilled on a coaching dataset very nicely and learns not solely the underlying patterns but additionally the noise and random fluctuations current in coaching knowledge. This ends in a mannequin that performs nicely on the coaching knowledge however poorly on unseen take a look at knowledge.

Causes of Overfitting:

- Advanced Fashions: Utilizing fashions which are too advanced for the quantity of coaching knowledge.

- Too Many Options: Together with too many irrelevant options.

- Inadequate Coaching Knowledge: Not having sufficient knowledge to seize the underlying tendencies.

Signs of Overfitting:

- Excessive accuracy on the coaching set.

- Low accuracy on the validation or take a look at set.

Underfitting happens when a machine studying mannequin is just too easy and unable to seize the underlying construction of the information. This ends in a mannequin that performs poorly on each the coaching knowledge and unseen take a look at knowledge.

Causes of Underfitting:

- Overly Easy Fashions: Utilizing fashions which are too easy to seize the complexity.

- Too Few Options: The mannequin is just not supplied with sufficient related info and thus mannequin could miss out on essential info essential to make correct predictions.

Signs of Underfitting:

- Low accuracy on each the coaching set and the validation or take a look at set.

There are two varieties of errors that have an effect on the efficiency of predictive fashions:

Bias

It refers back to the error that happens on account of overly easy fashions inflicting the mannequin to overlook necessary patterns whereas coaching (underfitting).

- Excessive Bias: The mannequin is just too easy, unable to seize the complexity of the information (e.g., linear regression on non-linear knowledge).

- Low Bias: The mannequin can precisely seize the connection between enter options and the goal variable of coaching knowledge.

**Excessive Bias ends in an underfitting drawback in machine studying fashions.

Variance

It refers back to the error that happens when a machine-learning mannequin is skilled and learns the underlying patterns of a coaching dataset very nicely however fails to carry out on unseen take a look at knowledge.

- Excessive Variance: The mannequin is just too advanced, capturing noise together with the underlying patterns (e.g., a deep neural community with inadequate coaching knowledge).

- Low Variance: The mannequin is much less delicate to the coaching knowledge’s noise and generalizes nicely to new knowledge.

- *Excessive Variance ends in an overfitting drawback in machine studying fashions.

Definition of Bais-Variance tradeoff

The bais-Variance tradeoff is a graphical illustration of errors and complexity of predictive fashions which helps to explain the steadiness between bias and variance errors in predictive fashions. Once we begin rising the mannequin complexity, coaching errors or bias begin lowering, and testing errors or variance begin rising. To attenuate the overall errors mannequin ought to have optimum mannequin complexity which has a superb steadiness between bias and variance.

Greater mannequin complexity results in low bias and excessive variance errors inflicting overfitting issues in fashions whereas low mannequin complexity results in excessive bias and excessive variance errors inflicting underfitting issues in fashions so a mannequin with optimum complexity results in low bias and low variance errors.

Options to Overfitting:

- Simplify the Mannequin: Use fewer parameters or a much less advanced mannequin.

- Regularization: Strategies like L1 or L2 regularization add a penalty for bigger coefficients, serving to to forestall overfitting.

- Cross-Validation: Use cross-validation to make sure the mannequin generalizes nicely.

- Pruning (for choice bushes): Take away components of the mannequin that don’t present energy in predicting goal values.

- Knowledge Augmentation: Improve the dimensions of the coaching set by including modified copies of current knowledge.

- Dropout (for neural networks): Randomly drop models throughout the coaching course of to forestall co-adaptation.

Options to Underfitting:

- Improve Mannequin Complexity: Use extra advanced fashions that may seize the underlying patterns.

- Characteristic Engineering: Add extra related options to the mannequin.

- Cut back Regularization: Cut back the power of regularization methods.

- Improve Coaching Time: Permit the mannequin to coach for an extended interval.

- Use Ensemble Strategies: Mix a number of fashions to enhance efficiency.