Think about this: you’re coaching your canine to fetch. You patiently reward it with treats for efficiently retrieving the ball, subtly shaping its habits. Now, image a pc program studying a fancy job in an analogous approach, not via specific directions, however via trial and error, similar to your furry good friend! That’s the fascinating world of reinforcement studying (RL), a department of Synthetic Intelligence (AI) the place machines turn into masters of their very own future (type of).

Past Treats and Stomach Rubs: The Mechanics of Reinforcement Studying

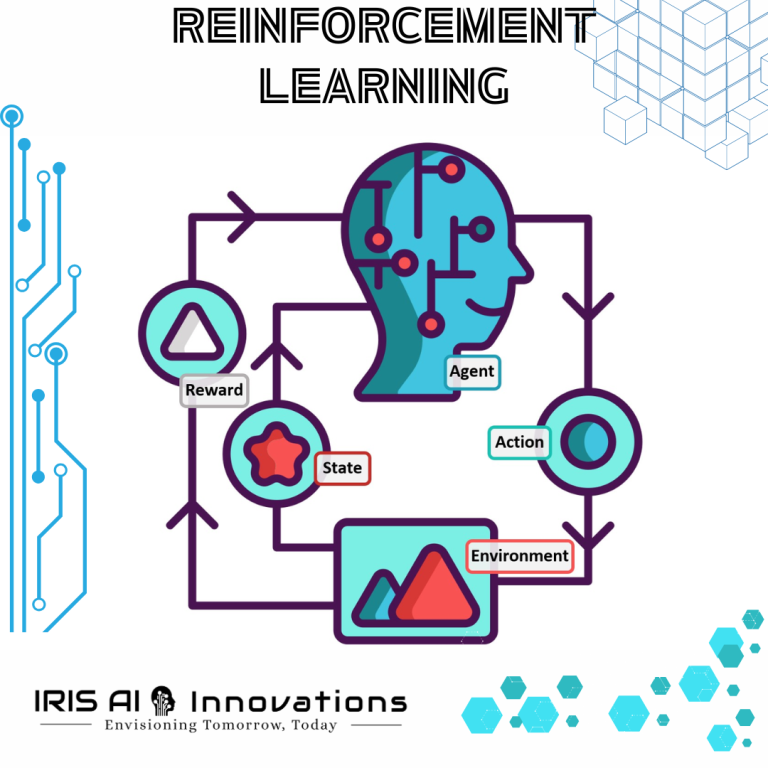

In contrast to supervised studying, the place information is neatly labeled like flashcards (“cat” for an image of a feline, “canine” for a canine), RL throws an agent (the educational program) into an setting. This setting could possibly be a simulated maze, a digital recreation world, and even the true world for a robotic. The agent interacts with this setting, taking actions and receiving rewards (constructive outcomes) or penalties (unfavourable outcomes) in return.

Consider it like enjoying a online game: the agent experiments with completely different strikes, studying which of them result in greater scores (rewards) and avoiding people who get them caught or killed (penalties). The important thing distinction? The agent doesn’t have a pre-programmed “win” situation. It figures it out via trial and error, always refining its technique based mostly on the rewards and penalties it receives.

The Energy of Trial and Error: What Can RL Really Do?

The chances of RL are huge and always evolving. Listed below are a couple of mind-boggling examples:

- Mastering Video games: From traditional Atari titles like Pong and House Invaders to complicated technique video games like StarCraft II, RL brokers are pushing the boundaries of AI gaming prowess. AlphaGo, a program developed by DeepMind, famously defeated the world champion Go participant Lee Sedol in 2016, showcasing the facility of RL in complicated decision-making situations.

- Optimizing Robots: Think about robots that may navigate warehouses with superhuman effectivity, and even carry out delicate surgical procedure with unmatched precision. RL helps practice robots to adapt to dynamic environments, always studying and enhancing their actions based mostly on real-time suggestions.

- Self-Driving Vehicles: Whereas we’re not fairly prepared for absolutely autonomous autos on the highway, RL is a key participant in instructing self-driving automobiles to make split-second choices in complicated site visitors conditions. By simulating tens of millions of driving situations and rewarding secure, environment friendly behaviors, RL helps practice these autos to navigate the true world with (hopefully) minimal fender benders.

However Wait, Are These RL Brokers Getting a Little Too Sensible?

As RL continues to evolve, moral issues turn into paramount. Listed below are some inquiries to ponder:

- Bias within the System: What occurs if the rewards and penalties an agent receives are biased? For instance, if an RL-powered hiring algorithm is skilled on historic information that favors sure demographics, it may perpetuate discriminatory practices. We have to guarantee equity and inclusivity within the design and coaching of RL algorithms.

- The Black Field Downside: With complicated RL algorithms, it may be obscure how they arrive at their choices. This lack of transparency may be unsettling. How can we belief an RL-powered medical prognosis system if we don’t absolutely perceive its reasoning course of? Explainability and transparency are essential as RL turns into extra refined.

- Superintelligence? Is RL a stepping stone to robots turning into self-aware and probably a risk to humanity? (Cue dramatic film music) Whereas this can be a widespread trope in science fiction, the truth is probably going much less sensational. Nonetheless, it’s essential to have open discussions in regards to the accountable growth and deployment of superior AI like RL.

The Way forward for RL: A Balancing Act

The way forward for reinforcement studying is shiny, with the potential to revolutionize numerous industries and resolve complicated issues. Nonetheless, it’s not with out its challenges. By addressing points like bias, transparency, and accountable growth, we will be sure that RL turns into a pressure for good, serving to us create a future the place people and machines work collectively as superior roommates, not overlords and underlings.

What are your ideas on the potential and moral issues of reinforcement studying? Share your concepts within the feedback under! Let’s preserve the dialog going.