The MIT Deep Studying neighborhood has considerably influenced our understanding and the dialogue on Neural Networks 🙂 For a deeper dive, see MIT’s authentic sources [1][4].

We see AI in all places and Deep Studying definitely has revolutionized many fields. In autonomous automobiles, it helps automobiles understand and navigate. In healthcare, it aids in diagnosing illnesses and personalizing therapies. Reinforcement studying permits AI to excel in decision-making in gaming and robotics, studying advanced duties. Generative modeling permits producing life like photos, music, and textual content. The affect of different purposes definitely goes on and on, together with pure language processing and safety.

Up to now, we imagine now we have gained a transparent understanding of how these algorithms drive developments. Neural networks remodel enter knowledge (indicators, photos, sensor measurements) into selections (predictions, classifications, actions in reinforcement studying). In addition they can generate new knowledge from desired outcomes, the opposite manner round, as seen in generative modeling. Primarily, all of the algorithm is attempting to do is estimate a sure operate that maps some inputs to some outputs and builds up some illustration of it.

Again in 1989, the Common Approximation Theorem [7][8] posited {that a} neural community with sufficient neurons may approximate any operate mapping enter to output. This theorem highlighted neural networks’ theoretical potential to unravel numerous issues by studying from knowledge [2][4]. Nevertheless, it didn’t handle sensible challenges like defining the community structure, discovering optimum weights, or making certain generalization to new duties [2][5]. Partly, this may increasingly have led to overlooking and overestimating neural networks’ capabilities in all real-world issues.

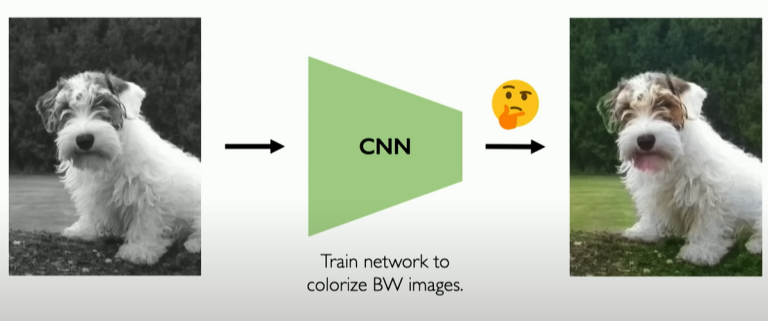

One unharmful, easy instance is that this: suppose a Convolutional Neural Community (CNN) makes an attempt to colorize a black-and-white picture of a canine however finally ends up giving the canine a green-colored ear in delicate elements and a pink chin with the tongue protruding, Determine 1. This might happen as a result of the coaching knowledge doubtless included many photos of canine with tongues out or with grass backgrounds, inflicting the CNN to misread these options. This highlights how deep studying fashions rely closely on their coaching knowledge which frequently results in points like algorithmic bias and potential failures in vital purposes.

One other instance is within the safety-critical situation of autonomous driving. Automobiles on autopilot can typically crash or carry out nonsensical maneuvers, usually leading to deadly penalties. These normally happen when neural networks encounter conditions they haven’t fastidiously been skilled on, resulting in excessive uncertainty and ineffective dealing with of those situations.

There are tons of examples of failure modes [2][5], and the checklist of limitations is much from exhaustive. Nevertheless, the next are some limitations of neural networks we generally encounter at present:

- Very knowledge hungry (usually tens of millions of examples)

- Computationally intensive to coach and deploy (requires GPUs)

- Simply fooled by adversarial examples

- Could be topic to algorithmic bias

- Poor at representing uncertainty (how are you aware what the mannequin is aware of?)

- Uninterpretable black packing containers, tough to belief

- Typically requires skilled data to design and fine-tune architectures

- Troublesome to encode construction and prior data throughout studying

- Struggles with extrapolation (going past knowledge)

These are the open issues we see in AI and Deep Studying analysis at present, and we hope that addressing them will advance the sphere, serving each as an invite and motivation for additional improvements.

References

- [1] Moitra, Ankur. “18.408 Theoretical Foundations for Deep Studying, Spring 202.” Folks.csail.mit.edu, Feb. 2021, folks.csail.mit.edu/moitra/408c.html. Accessed 23 June 2024.

- [2] Thompson, Neil, et al. THE COMPUTATIONAL LIMITS of DEEP LEARNING. 2020.

- [3] Tala Talaei Khoei, et al. “Deep Studying: Systematic Evaluation, Fashions, Challenges, and Analysis Instructions.” Neural Computing and Purposes, vol. 35, 7 Sept. 2023, https://doi.org/10.1007/s00521-023-08957-4.

- [4] MIT Deep Studying 6.S191. introtodeeplearning.com/.

- [5]Raissi, Maziar. Open Issues in Utilized Deep Studying. 2023.

- [6] Nielsen, Michael A. “Neural Networks and Deep Studying.” Neuralnetworksanddeeplearning.com, Willpower Press, 2019, neuralnetworksanddeeplearning.com/chap4.html.

- [7] Zhou, Ding-Xuan. “Universality of Deep Convolutional Neural Networks.” Utilized and Computational Harmonic Evaluation, vol. 48, no. 2, June 2019, https://doi.org/10.1016/j.acha.2019.06.004.

- [8] Schäfer, Anton Maximilian, and Hans Georg Zimmermann. “Recurrent Neural Networks Are Common Approximators.” Synthetic Neural Networks — ICANN 2006, 2006, pp. 632–640, https://doi.org/10.1007/11840817_66.