Overfitting and underfitting are two of essentially the most essential but misunderstood matters for newbies in machine studying. Whereas newbies may perceive these ideas theoretically, making use of them virtually usually proves difficult. This text goals to deal with these points and make sensible implications simpler to know.

Bias:

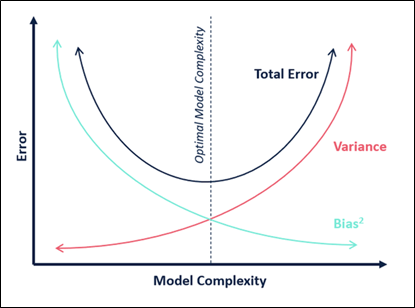

Bias refers back to the assumptions made by the mannequin to simplify the training means of the goal perform. It may be seen as an inherent error that persists even with infinite coaching knowledge. This happens as a result of the mannequin is biased towards a specific resolution. If a mannequin makes the identical mistake repeatedly, it’s thought of biased.

- Low bias: Signifies fewer assumptions (e.g., KNN, Resolution tree)

- Excessive bias: Signifies extra assumptions (e.g., Linear regression, logistic regression)

A mannequin with excessive bias pays little consideration to the coaching knowledge, resulting in underfitting.

Variance:

Variance measures the mannequin’s sensitivity to adjustments within the coaching knowledge. It signifies how a lot the mannequin’s predictions would change if it have been skilled on completely different datasets. Excessive variance means the mannequin pays an excessive amount of consideration to the coaching knowledge, resulting in overfitting and problem in generalizing to new knowledge.

- Low variance: Small adjustments in predictions with completely different datasets

- Excessive variance: Massive adjustments in predictions with completely different datasets

Case 1:

Take into account the R-square worth of a mannequin:

- R-square on prepare knowledge: 0.29

- R-square on take a look at knowledge: 0.02

Because the distinction between the R-square values on prepare and take a look at knowledge is critical, the mannequin reveals excessive variance.

Case 2:

Take into account polynomial regression of levels 1, 2, and three with the next R-square values:

The diploma 3 mannequin reveals the utmost change in R-square worth (from 1 to 0.07), indicating the very best variance.

Case 3: Random Forest Classifier

# Create a Random Forest classifier

rf_model = RandomForestClassifier(n_estimators=200, max_depth=10,

random_state=42)

- Lowering the variety of timber will scale back mannequin complexity, resulting in underfitting.

- Growing the variety of timber improves efficiency and reduces variance with out inflicting overfitting.

- Lowering the depth of the timber simplifies the mannequin, resulting in underfitting.

- Growing the depth of the timber makes the mannequin extra advanced, resulting in overfitting.

Case 4: Ok-Nearest Neighbors (KNN)

# Outline the variety of neighbours for KNN

okay = 2# Initialize the KNN classifier

knn_classifier = KNeighborsClassifier(n_neighbors=okay, metric='euclidean')

Growing the worth of ‘okay’ will increase bias, making the choice boundary smoother. An optimum worth of ‘okay’ could be chosen by way of cross-validation.

Case 5: Naive Bayes Classifier

# Initialize the Naive Bayes classifier

nb_model = GaussianNB(var_smoothing=1e-9)

var_smoothing is a hyperparameter that provides a small worth to the variance of every function to keep away from division by zero and deal with numerical stability. Growing the var_smoothing worth makes the mannequin much less delicate to small variations within the knowledge, resulting in underfitting.