A Assist Vector Machine (SVM) is a supervised studying algorithm used for classification and regression duties. It really works by discovering the most effective boundary (hyperplane) that separates totally different courses within the knowledge. SVM tries to maximise the margin between this boundary and the closest knowledge factors (assist vectors) from every class. It may well deal with each linear and non-linear knowledge by utilizing kernel features. SVMs are efficient in high-dimensional areas and are versatile by way of classification complexity.

- Excessive-Dimensional Effectiveness: Performs nicely with many options.

- Kernel Versatility: Handles linear and non-linear knowledge.

- Overfitting Robustness: Focuses on crucial factors, lowering overfitting.

- Excessive-Dimensional Knowledge: When the variety of options is massive relative to the variety of samples.

- Non-linear Boundaries: When the choice boundary between courses shouldn’t be linear.

- Small to Medium-Sized Datasets: When coping with datasets the place overfitting is a priority however computational sources are restricted.

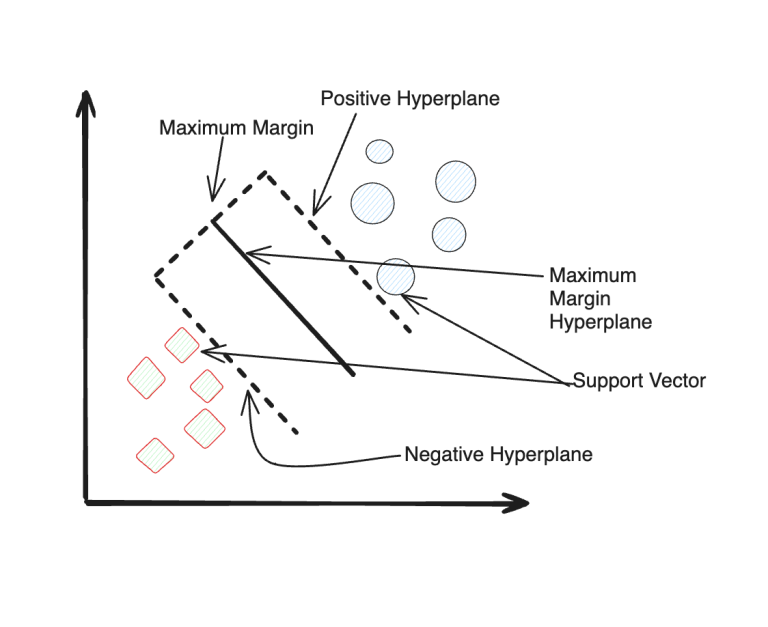

- Hyperplane:

A hyperplane is use to separate totally different courses of knowledge. For a 2-dimensional house, it is a line, however in increased dimensions, it turns into a hyperplane. The purpose is to seek out the hyperplane that maximizes the margin between the courses.

2. Maximizing the Margin:

The SVM algorithm seeks the hyperplane that has the biggest distance (margin) to the closest factors from any class, that are known as assist vectors. This maximized margin helps enhance the generalization skill of the classifier.

3. Assist Vectors:

These are the information factors which might be closest to the hyperplane and affect its place and orientation. The hyperplane is outlined based mostly on these assist vectors, quite than all the dataset.

- Knowledge Preprocessing: Put together your dataset by cleansing, remodeling, and scaling as crucial.

- Cut up Knowledge: Divide the dataset into coaching and testing units for analysis.

- Select Kernel: Choose a kernel sort (e.g., linear, polynomial, RBF) and tune its parameters if crucial.

- Instantiate SVM: Create an SVM classifier object with chosen parameters and kernel.

- Prepare the Mannequin: Match the SVM classifier to the coaching knowledge.

- Predictions: Use the skilled SVM mannequin to foretell outcomes for brand spanking new knowledge.

- Consider Efficiency: Assess the mannequin’s accuracy and different metrics utilizing the take a look at set.

- Accuracy: The proportion of appropriately labeled situations out of the overall situations evaluated.

- Precision: The ratio of true optimistic predictions to the overall predicted positives. It measures the accuracy of optimistic predictions.

- Recall (Sensitivity): The ratio of true optimistic predictions to the overall precise positives. It measures the power of the mannequin to establish all optimistic situations.

- F1 Rating: The harmonic imply of precision and recall. It supplies a single metric that balances each precision and recall.

- Confusion Matrix: A desk that summarizes the variety of true positives, true negatives, false positives, and false negatives. It’s helpful for understanding the place the mannequin is making errors.

- ROC Curve (Receiver Working Attribute Curve): A graphical plot that illustrates the efficiency of a binary classifier as its discrimination threshold is diverse. It plots the true optimistic fee (TPR) towards the false optimistic fee (FPR).

- AUC (Space Beneath the ROC Curve): The realm beneath the ROC curve. It supplies an combination measure of efficiency throughout all attainable classification thresholds.

- Precision-Recall Curve: A graphical plot that exhibits the trade-off between precision and recall for various threshold values.

- Picture Classification and Object Recognition: SVMs excel in precisely categorizing photographs into predefined courses, making them useful in purposes like autonomous autos for figuring out street indicators and pedestrians.

- Biomedical Purposes: In bioinformatics, SVMs are essential for analyzing gene expression knowledge and predicting protein operate, aiding in illness analysis and drug discovery efforts.

Try implementation on SVM utilizing breast most cancers dataset

Dataset Hyperlink — https://www.kaggle.com/datasets/krupadharamshi/breast-cancer-dataset/data

Pocket book Hyperlink — https://www.kaggle.com/code/krupadharamshi/svm-model-krupa

Right here’s transient description of Breast Most cancers dataset

Positive, right here’s a short description of every column within the supplied dataset:

- id: Distinctive identification quantity.

- analysis: Malignant (M) or benign (B).

- radius_mean: Imply radius of tumor.

- texture_mean: Imply texture of tumor.

- perimeter_mean: Imply perimeter of tumor.

- area_mean: Imply space of tumor.

- smoothness_mean: Imply smoothness of tumor.

- compactness_mean: Imply compactness of tumor.

- concavity_mean: Imply concavity of tumor.

- concave points_mean: Imply variety of concave parts.

- symmetry_mean: Imply symmetry of tumor.

- fractal_dimension_mean: Imply fractal dimension of tumor.

- radius_se: Customary error of radius.

- texture_se: Customary error of texture.

- perimeter_se: Customary error of perimeter.

- area_se: Customary error of space.

- smoothness_se: Customary error of smoothness.

- compactness_se: Customary error of compactness.

- concavity_se: Customary error of concavity.

- concave points_se: Customary error of concave factors.

- symmetry_se: Customary error of symmetry.

- fractal_dimension_se: Customary error of fractal dimension.

- radius_worst: Worst (largest) radius of tumor.

- texture_worst: Worst (largest) texture of tumor.

- perimeter_worst: Worst (largest) perimeter of tumor.

- area_worst: Worst (largest) space of tumor.

- smoothness_worst: Worst (largest) smoothness of tumor.

- compactness_worst: Worst (largest) compactness of tumor.

- concavity_worst: Worst (largest) concavity of tumor.

- concave points_worst: Worst (largest) variety of concave parts.

- symmetry_worst: Worst (largest) symmetry of tumor.

- fractal_dimension_worst: Worst (largest) fractal dimension of tumor.

- Unnamed: 32: Extra unspecified column (doubtless NaN values).

Dataset Hyperlink — https://www.kaggle.com/datasets/krupadharamshi/breast-cancer-dataset/data

Pocket book Hyperlink — https://www.kaggle.com/code/krupadharamshi/svm-model-krupa

The code we carried out demonstrates constructing a SVM mannequin utilizing the given dataset. Right here’s a abstract of the steps:

- Knowledge Preparation: Load, clear, and preprocess the dataset for evaluation.

- Visualization: Discover knowledge distributions and correlations visually.

- Characteristic Choice: Select related options for the SVM mannequin.

- Mannequin Coaching: Cut up knowledge, scale options, and practice the SVM mannequin.

- Prediction: Generate predictions on take a look at knowledge.

- Analysis: Assess mannequin efficiency utilizing metrics like confusion matrix and classification report.

- Visualization of Outcomes: Visualize mannequin analysis outcomes.

- Conclusion: Summarize findings and suggest subsequent steps for refinement or utility.

General, the code demonstrates a fundamental workflow for constructing and evaluating a SVM mannequin utilizing Python and scikit-learn.

About me (Krupa Dharamshi)

Hey there! I’m Krupa, a tech fanatic with an insatiable curiosity for all issues tech. Be a part of me on this thrilling journey as we discover the most recent improvements, embrace the digital age, and dive into the world of synthetic intelligence and cutting-edge devices.I hope you all like this weblog. See you all within the subsequent weblog !!! Let’s unravel the marvels of know-how collectively!