The Skip-gram mannequin works by predicting the context phrases given a goal phrase. For instance, within the sentence “I like machine studying,” if “love” is the goal phrase, the mannequin will attempt to predict “I” and “machine” because the context phrases.

How Skip-gram Works

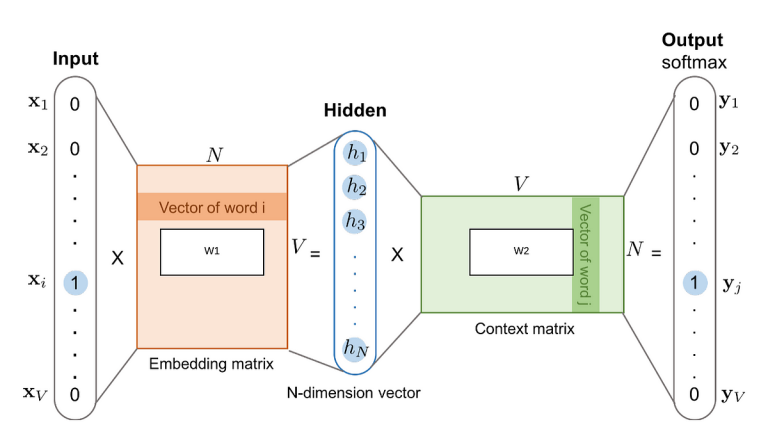

- Enter Layer: The enter to the mannequin is the

one-hot encodedvector of the goal phrase. For instance, if the goal phrase is “love,” the enter row vector is [0, 1, 0, 0] with dimension 4. It may be seen as 4×1 (4 rows and 1 column) dimension as matrix. - Hidden Layer: The enter vector is multiplied by a weight matrix to supply a hidden layer. This weight matrix is what we try to be taught. If our embedding dimension is 2, the load matrix may seem like this (randomly initialized):

[[0.8, 0.1],It’s 4×2 matrix (4 rows and a pair of column) dimension. So, the end result, the hidden layer as matrix has 2×1 (2 rows and 1 column) dimension as matrix or row vector with dimension 2.

[0.9, 0.2],

[0.4, 0.7],

[0.3, 0.8]] - Output Layer: The hidden layer is then multiplied by one other weight matrix, known as

contextmatrix, I’ll name it “second matrix”, to supply the output, which is a vector of chances for every phrase within the vocabulary (for every phrase in a sentence (the goal phrase), the mannequin tries to foretell the phrases which can be more likely to seem close to it (the context phrases).

Studying the Vectors

When the mannequin is skilled, it step by step adjusts its inner parameters (the load matrix) to enhance prediction accuracy. This course of happens as follows:

- Coaching iterations: The mannequin repeatedly makes predictions, compares them with precise information, and updates its weights. Via hundreds and even thousands and thousands of such iterations, the mannequin finds optimum weight values that decrease prediction errors on all coaching information.

- Understanding context: Throughout coaching, the mannequin begins to acknowledge patterns within the information. It notices which phrases ceaselessly seem collectively and adjusts its weights to higher predict these phrases sooner or later. Phrases that always seem in related contexts obtain related numerical illustrations (vectors). For instance, the phrases “king” and “queen” may ceaselessly seem alongside phrases like “throne,” “fort,” “royal.” Subsequently, their vectors shall be related as a result of the mannequin learns to foretell them primarily based on the identical surrounding phrases.

Extracting the Vectors

After coaching, the rows of the load matrix within the hidden layer turn into the phrase vectors. For instance, if our weight matrix is:

[[0.8, 0.1],

[0.9, 0.2],

[0.4, 0.7],

[0.3, 0.8]]

The phrase vectors are:

- “I”: [0.8, 0.1]

- “love”: [0.9, 0.2]

- “machine”: [0.4, 0.7]

- “studying”: [0.3, 0.8]

Notice: Every row immediately corresponds to a phrase within the vocabulary.

These vectors are dense representations of the phrases, capturing their meanings primarily based on the contexts by which they seem. The dense vectors will not be nearly direct phrase co-occurrence but in addition seize deeper semantic similarities. As an example, “king” and “queen” may need related vectors as a result of they each relate to royalty, even when they don’t all the time seem collectively in textual content.

Notice: Whereas usually the matrix weight from enter layer to hidden layer as described above is used as embeddings, the matrix weight from hidden layer to output layer can additionally be used to acquire phrase embeddings, and a few research have explored combining each matrices to reinforce the standard of the embeddings. Every column within the second weight matrix may be interpreted as embeddings, however they signify the phrases within the context of predicting surrounding phrases relatively than as direct phrase embeddings. That is extra oblique method.

Word2Vec Abstract

The Skip-gram mannequin is skilled to foretell the context phrases given a goal phrase. By doing so, it learns to seize the relationships between phrases primarily based on their co-occurrence patterns within the corpus. This predictive job is what drives the educational of significant phrase embeddings, which might then be used for varied pure language processing duties.

Normally, to extract embeddings from a deep studying community, the community usually must be designed for a job that includes studying significant representations of the enter information equivalent to classification (assigning enter information to considered one of a number of predefined classes), language modeling (predicts the following phrase in a sequence given the earlier phrases), autoencoders (reconstructing the enter information from a compressed illustration), and extra. As we see above totally different layers of the neural community can be utilized as embeddings.