Statistics and Arithmetic

-Basis for Understanding Information: These supply the basic framework required to grasp and analyze knowledge.

– Ideas in Arithmetic:

Linear algebra

Calculus:

Likelihood Idea

Statistical Approaches

Speculation Testing

Regression Evaluation

Bayesian Inference:

Algorithm and Mannequin Growth:

Quantifying Relationships and Uncertainty

The interdisciplinary nature of information science is a major power, permitting it to deal with complicated points by combining numerous expertise and information from varied fields. This methodology makes it attainable to use knowledge science to a wide range of sectors, which opens up new utility areas and produces extra full options. For instance, a healthcare knowledge scientist can forecast illness dangers by using pc science, statistics, and medical information. Information science additionally promotes collaboration and integrates varied viewpoints, which hurries up innovation. In the long run, its adaptability and effectivity outcome from its capability to incorporate a number of disciplines.

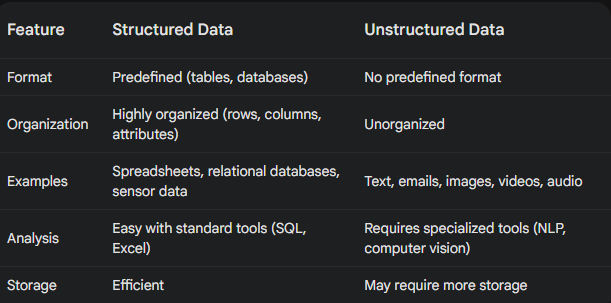

It’s important to grasp the excellence between structured and unstructured knowledge so as to handle and analyze info effectively in a wide range of sectors, comparable to internet design and utility improvement.

Nicely-organized knowledge readily suits into preset codecs, comparable to databases or tables. It’s clearly organized into rows and columns, with every column denoting a special property or piece of information.

Buyer Database: Has columns with info comparable to identify, deal with, and buyer ID.

Monetary Information: Gross sales knowledge organized by product, space, and date.

Temperature measurements obtained from the sensor frequently.

Advantages

Easy to Search, Question, and Analyze: Normal instruments can be utilized to successfully retrieve and manipulate knowledge.

Efficient Storage and Retrieval: Exceptionally well-suited for relational database storage.

Easy to Search, Question, and Analyze: Normal instruments can be utilized to successfully retrieve and manipulate knowledge.

Efficient Storage and Retrieval: Exceptionally well-suited for relational database storage.

Match for Machine Studying and Statistical Evaluation: The usage of algorithms is made easy by structured codecs.

Unstructured knowledge doesn’t comply with a predetermined format or schema. Ceaselessly composed primarily of textual content, it could additionally include photos, movies, and audio belongings.

Textual content and e mail messages

Posts on Social Media

Articles of Information

Imaging Scans for Medication

Issues with Surveillance Footage:

Analyze and Search Issue: Wants sure instruments or strategies to acquire important insights.

Appreciable Preprocessing Is Required: Earlier than evaluation, knowledge must be organized and processed.

Storage Necessities: Resulting from its complexity, unstructured knowledge regularly wants extra space for storing than structured knowledge.

Python reigns supreme in knowledge science resulting from its readability and intensive libraries like Pandas (knowledge manipulation) and NumPy (numerical computing). R is one other standard choice, significantly for statistical evaluation.

Python: The Crown Jewel of Information Science

Readability: Python’s clear, concise syntax makes it simple to study and perceive, even for these with no sturdy programming background. This results in sooner improvement and simpler collaboration.

Huge Libraries: Python’s wealthy ecosystem of libraries tailor-made for knowledge science contains:

Pandas: Important for knowledge manipulation and evaluation with intuitive knowledge constructions and instruments.

NumPy: The spine of numerical computing, providing arrays, matrices, and mathematical capabilities.

Matplotlib and Seaborn: Extensively used for creating informative and visually interesting knowledge visualizations.

Scikit-learn: Complete machine studying library with algorithms for varied duties like classification, regression, and clustering. Versatility: As a general-purpose language, Python can be utilized past knowledge science, in internet improvement, automation, and scripting, making it usefull for any knowledge skilled.

R is a well-liked selection for people with a robust background in statistics as a result of it was created particularly for statistical computing and graphics. It excels within the following areas:

Statistical Focus: R is designed with knowledge evaluation and visualization in thoughts.

Specialised Libraries: Supplies a wide range of packages for duties like regression evaluation, speculation testing, and time sequence evaluation.

Information Visualization: The ggplot2 library is well-known for producing stunning, editable, publication-quality plots and graphs.

Introduction to Machine Studying

Computer systems could study from expertise and turn into extra clever with out express programming due to a subset of synthetic intelligence referred to as machine studying, or ML. It entails creating algorithms that look at knowledge, spot developments, and render judgments or predictions.

Machine Studying Goals

Prediction: Utilizing previous knowledge to make well-informed forecasts about what is going to occur sooner or later.

Sorting knowledge into pre-established teams or courses is called classification.

Clustering is the method of assembling associated knowledge parts in keeping with shared traits.

Discovering odd or surprising patterns in knowledge is called anomaly detection.

Era: Producing recent cases of information that mimic preexisting knowledge.

Typical programming entails producing exact directions, or code, to course of explicit inputs and generate predictable outputs in accordance with predetermined pointers.

Machine studying is the method of instructing algorithms to acknowledge patterns and relationships utilizing large datasets. Subsequently, the algorithm applies this info to forecast or determine on beforehand unknown info. The output just isn’t explicitly programmed; fairly, it’s primarily based on patterns which are discovered.

- Classification: Placing knowledge factors in pre-established teams.

Examples:

- Image identification

- Medical analysis

- Electronic mail spam detection

2. Regression: Forecasting a steady numerical worth.

Examples:

- Forecasting climate

- Inventory market patterns

- Residence values

3. Clustering: Assembling comparable knowledge factors with out the necessity for pre-established classifications.

Examples:

- Anomaly detection

- Social community evaluation

- Buyer segmentation

4. Dimensionality Discount: Eradicating options from a dataset with out sacrificing its basic content material.

Examples:

- Information visualization utilizing t-SNE

- Picture discount with Principal Element Evaluation (PCA)

5. Reinforcement Studying: Instructing an agent the way to behave in a manner that maximizes a reward sign.

Examples:

- Robotics management

- AI that performs video games (e.g., AlphaGo)

Algorithms for Supervised Studying (Studying from Labeled Information)

The Linear Regression

Aim: Using enter info, predicts a steady output variable.

Forecasting gross sales or inventory costs is an instance of a use case.

Key Idea: Minimizes the sum of squared errors to seek out the road that matches the very best.

Regression utilizing Logistic Regression

Aim: Forecasts a categorical outcome, comparable to sure or no.

As an illustration Use Case: Recognizing spam or diagnosing diseases.

Key Idea: Fashions the probability of sophistication membership utilizing a logistic operate.

Resolution Bushes

The aim is to mannequin choices and outcomes as a choice tree.

Fraud detection or mortgage approval are two examples of use circumstances.

Precept: Characteristic nodes are represented by branches, that are choice guidelines.

SVMs, or help vector machines

The aim is to establish the very best hyperplane for sophistication separation.

Excessive-dimensional classification and regression points are an instance of a use case random Wooden’s

combines a number of choice bushes with the aim of bettering prediction accuracy.

As an illustration, estimating credit score threat.

Key Idea: By averaging the output from a number of bushes, overfitting is decreased.

Networks of Neurals

Aim: Represents intricate patterns in knowledge.

Pure language processing and picture recognition are two examples of use circumstances.

Key Idea: Non-linear activations and linear transformations are utilized through layers of networked neurons.

- Hierarchical Clustering

- Function: Builds a hierarchical tree of clusters.

- Instance Use Case: Gene expression evaluation.

- Key Idea: Makes use of agglomerative or divisive approaches to kind clusters of assorted sizes.

2. Principal Element Evaluation (PCA)

- Function: Reduces knowledge dimensionality whereas preserving most info.

- Instance Use Case: Information visualization and algorithm efficiency enchancment.

- Key Idea: Transforms knowledge into orthogonal parts capturing most variance.

3. Affiliation Rule Mining

. Function: Finds fascinating relationships between gadgets in datasets.

- Instance Use Case: Market basket evaluation.

- Key Idea: Identifies frequent merchandise co-occurrences.

4. Reinforcement Studying (Agent learns by interacting with an setting)

- Instance Use Case: Robotics, recreation taking part in (e.g., AlphaGo), and autonomous methods.

- Key Idea: Brokers obtain suggestions as rewards or penalties and study actions that maximize cumulative rewards.

- Options: Enter variables used for predictions.

- Labels: Goal values to foretell.

- Coaching Information: Used to coach the mannequin.

- Testing Information: Used to guage mannequin efficiency.

- Overfitting: When the mannequin performs nicely on coaching knowledge however poorly on new knowledge.

- Underfitting: When the mannequin is simply too easy to seize knowledge patterns.

- Bias-Variance Tradeoff:

- Bias: Error resulting from simplistic assumptions, resulting in underfitting.

- Variance: Error resulting from sensitivity to knowledge fluctuations, resulting in overfitting.

- Tradeoff: Balancing generalization (low bias) and sensitivity to coaching knowledge (low variance) for higher mannequin efficiency.

This overview of machine studying, knowledge science, and synthetic intelligence has hopefully illuminated the foundational ideas that underpin these quickly rising fields. Armed with this data, you possibly can start to establish alternatives to leverage these highly effective instruments in your individual work or private initiatives, finally contributing to the continued wave of innovation pushed by knowledge and clever algorithms.