Day 1 notes from “An Introduction to Statistical Studying: with Functions in Python by Hastie et. al.” as a part of my Knowledge Science studying documentation.

Right here, I’m combining my prior data in Python and machine studying to assist visualize and make the narrative to be relevant in code.

Machine (statistical) studying (ML) refers to an enormous set of instruments for understanding information.

It’s primarily categorized into:

- Supervised studying: constructing a (statistical) mannequin for predicting or estimating an output primarily based on a number of inputs.

- Unsupervised studying: constructing a system (mannequin or algorithm) to study relationships and construction from information thus there are inputs however

no supervising output like within the supervised studying.

The distinction between supervised and unsupervised studying lies within the availability of output information (synonym: dependent variable, goal variable, or final result which is symbolized as “y”).

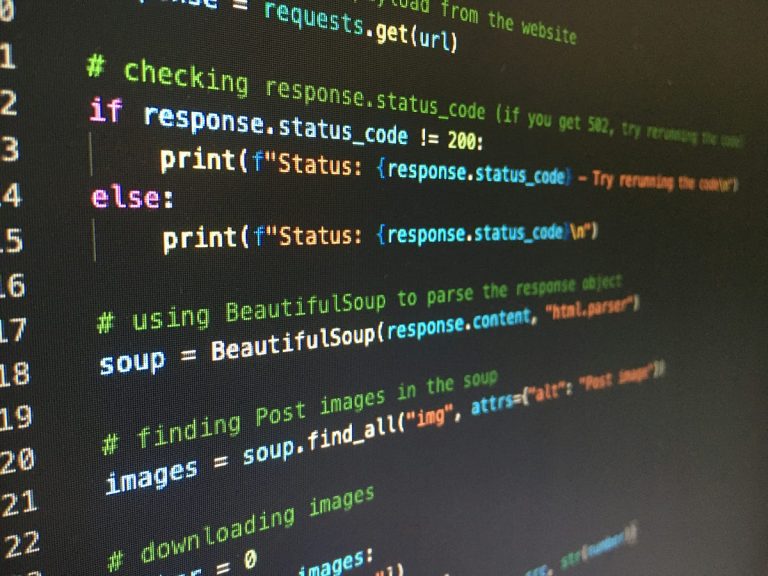

Let’s put together our Python code first by importing the important libraries.

# Import library

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

from ISLP import load_data # ISLP supplied by the creator of the guide

Listed below are some examples of instances in machine studying:

Let’s import the information.

# Import wage information from ISLP

df_wage = load_data('Wage')

df_wage

This information can be utilized to look at components associated to wages for a bunch of males from the Atlantic area of the US. It’s clear that our output variable is wage and the enter variables are the remaining variables in our information.

Wages in our information are quantitative values which implies that we face a regression drawback. Subsequently, we’re concerned with predicting steady or quantitative output.

For instance we need to perceive the affiliation between an worker’s age, schooling, and calendar 12 months on his wage. We will do that by visualizing the connection between every of the enter variables on wage utilizing scatterplot and boxplot.

# Setup the matplotlib subplots

fig, ax = plt.subplots(1, 3, figsize=(15,4))# Fig 1

sns.regplot(information=df_wage, x='age', y='wage', lowess=True, ax=ax[0], scatter_kws={'edgecolor': 'gray', 'facecolor': 'none', 'alpha': 0.5}, line_kws={'coloration': 'crimson'})

# Fig 2

sns.regplot(information=df_wage, x='12 months', y='wage', lowess=True, ax=ax[1], scatter_kws={'edgecolor': 'gray', 'facecolor': 'none', 'alpha': 0.5}, line_kws={'coloration': 'crimson'})

# Fig 3

temp = {}

for i in df_wage['education'].distinctive():

temp[i] = df_wage[df_wage['education'] == i]['wage'].reset_index(drop=True)

temp = pd.DataFrame(temp)

temp.columns = temp.columns.str.extract(r'(d)')[0].astype(int)

temp = temp[[i for i in range(1, 6)]]

temp.plot(variety='field', ax=ax[2], coloration='black', xlabel='schooling stage', ylabel='wage')

Based mostly on the graph above we are able to see that:

- Wages improve with age till about 40 years previous, then it barely and slowly decreases after that.

- Wages elevated in a roughly linear (or straight-line) trend, between 2003 and 2009, although this rise could be very slight relative to the variability within the information.

- Wages are additionally sometimes larger for people with increased schooling ranges: males with the bottom schooling stage (1) are likely to have considerably decrease wages than these with the best schooling stage (5).

After all, it’s potential that we are able to get extra correct predictions by combining age, schooling, and the 12 months quite than utilizing every enter variable individually. This may be completed by becoming a machine studying mannequin to foretell one wage primarily based on its age, schooling, and calendar 12 months.

Let’s import the information.

# Import inventory market information from ISLP

df_smarket = load_data('Smarket')

df_smarket

This information incorporates the every day actions within the Customary & Poor’s 500 (S&P) inventory index over 5 years between 2001 and 2005. On this case, we’re concerned with predicting qualitative or categorical output, i.e., as we speak’s inventory market course. Such a drawback is named a classification drawback. A mannequin that would precisely predict the course by which the market will transfer could be very helpful!

Let’s perceive the information slightly bit by understanding the sample by which the market course is up or down primarily based on every of the lag1, lag2, and lag3 share adjustments in S&P. We will do it by creating boxplots as follows:

# Setup the matplotlib subplots

fig, ax = plt.subplots(1, 3, figsize=(14, 4))# Fig 1

temp = {}

for i in df_smarket['Direction'].distinctive():

temp[i] = df_smarket[df_smarket['Direction'] == i]['Lag1'].reset_index(drop=True)

temp = pd.DataFrame(temp)

temp.plot(variety='field', coloration='black', ax=ax[0], xlabel='As we speak's Path', ylabel='Proportion Change in S&P', title='Yesterday')

# Fig 2

temp = {}

for i in df_smarket['Direction'].distinctive():

temp[i] = df_smarket[df_smarket['Direction'] == i]['Lag2'].reset_index(drop=True)

temp = pd.DataFrame(temp)

temp.plot(variety='field', coloration='black', ax=ax[1], xlabel='As we speak's Path', ylabel='Proportion Change in S&P', title='Two Days Earlier')

# Fig 3

temp = {}

for i in df_smarket['Direction'].distinctive():

temp[i] = df_smarket[df_smarket['Direction'] == i]['Lag3'].reset_index(drop=True)

temp = pd.DataFrame(temp)

temp.plot(variety='field', coloration='black', ax=ax[2], xlabel='As we speak's Path', ylabel='Proportion Change in S&P', title='Three Days Earlier')

plt.present()

Based mostly on the graph above, it’s clear that there isn’t a seen distinction between the share adjustments in S&P by which as we speak’s course is up or down whether or not it’s for lag1 (yesterday), lag2 (two days earlier), and lag3 (three days earlier). It means that there isn’t a easy technique for predicting how the market will transfer primarily based on these 3 variables. If the sample appears fairly easy, then anybody might undertake a easy buying and selling technique to generate earnings from the market. As a substitute, any such drawback might be solved by a machine studying mannequin to foretell as we speak’s market with excessive accuracy.

One other essential class of issues in machine studying entails conditions by which we solely observe enter variables, with no corresponding output which is named unsupervised studying. In contrast to within the earlier examples, right here we aren’t making an attempt to foretell an output variable.

Let’s verify the instance information from gene expression:

# Import gene expression information

df_gen = load_data('NCI60')

df_gen

This information consists of 6,830 gene expression measurements for every of 64 most cancers cell strains. As a substitute of predicting a specific output variable, we’re all in favour of figuring out whether or not there are teams, or clusters, among the many cell strains primarily based on their gene expression measurements. This can be a troublesome query to handle, partially as a result of there are millions of gene expression measurements per cell line, making it onerous to visualise the information.

Right here, we are able to use unsupervised studying strategies corresponding to dimensionality discount and clustering to raised perceive the sample in our information.

Let’s code!

# PCA for the primary two parts

from sklearn.decomposition import PCApca = PCA(n_components = 2)

Z = pca.fit_transform(df_gen['data'])

# Ok-Means cluster for 4 clusters, only for illustration

from sklearn.cluster import KMeans

kmeans = KMeans(n_clusters=4)

kmeans.match(Z)

# Visualize the outcomes

fig, ax = plt.subplots(1, 2, figsize=(10, 4))

# Fig 1

ax[0].scatter(x=Z[:,0], y=Z[:,1], edgecolors='black', facecolor='none')

ax[0].set_xlabel('Z1')

ax[0].set_ylabel('Z2')

# Fig 2

scatter = ax[1].scatter(x=Z[:,0], y=Z[:,1], c=kmeans.labels_)

legendc = ax[1].legend(*scatter.legend_elements(prop='colours'), loc="higher left", title="Cluster")

ax[1].set_xlabel('Z1')

ax[1].set_ylabel('Z2')

plt.present()

We’re utilizing the primary two principal parts of the information, which summarize the 6,830 expression measurements for every cell line down to 2 numbers or dimensions. Whereas it’s doubtless that this dimension discount has resulted in some lack of info, it’s now potential to visually look at the information for proof of clustering. Deciding on the variety of clusters is commonly a troublesome drawback. Within the graph above, we’re utilizing 4 clusters for the sake of illustration. Based mostly on this graph, there may be clear proof that cell strains with fairly related traits are typically positioned close to one another on this two-dimensional illustration.

To be continued…